Making Climate Models Look Good

Clive Best dove into climate models temperature projections and discovered how the data can be manipulated to make model projections look closer to measurements than they really are. His first post was A comparison of CMIP5 Climate Models with HadCRUT4.6 January 21, 2019. Excerpts in italics with my bolds.

Overview: Figure 1. shows a comparison of the latest HadCRUT4.6 temperatures with CMIP5 models for Representative Concentration Pathways (RCPs). The temperature data lies significantly below all RCPs, which themselves only diverge after ~2025.

Modern Climate models originate from Global Circulation models which are used for weather forecasting. These simulate the 3D hydrodynamic flow of the atmosphere and ocean on earth as it rotates daily on its tilted axis, and while orbiting the sun annually. The meridional flow of energy from the tropics to the poles generates convective cells, prevailing winds, ocean currents and weather systems. Energy must be balanced at the top of the atmosphere between incoming solar energy and out going infra-red energy. This depends on changes in the solar heating, water vapour, clouds , CO2, Ozone etc. This energy balance determines the surface temperature.

Weather forecasting models use live data assimilation to fix the state of the atmosphere in time and then extrapolate forward one or more days up to a maximum of a week or so. Climate models however run autonomously from some initial state, stepping far into the future assuming that they correctly simulate a changing climate due to CO2 levels, incident solar energy, aerosols, volcanoes etc. These models predict past and future surface temperatures, regional climates, rainfall, ice cover etc. So how well are they doing?

Fig 2. Global Surface temperatures from 12 different CMIP5 models run with RCP8.5

The disagreement on the global average surface temperature is huge – a spread of 4C. This implies that there must still be a problem relating to achieving overall energy balance at the TOA. Wikipedia tells us that the average temperature should be about 288K or 15C. Despite this discrepancy in reproducing net surface temperature the model trends in warming for RCP8.5 are similar.

Likewise weather station measurements of temperature have changed with time and place, so they too do not yield a consistent absolute temperature average. The ‘solution’ to this problem is to use temperature ‘anomalies’ instead, relative to some fixed normal monthly period (baseline). I always use the same baseline as CRU 1961-1990. Global warming is then measured by the change in such global average temperature anomalies. The implicit assumption of this is that nearby weather station and/or ocean measurements warm or cool coherently, such that the changes in temperature relative to the baseline can all be spatially averaged together. The usual example of this is that two nearby stations with different altitudes will have different temperatures but produce the similar ‘anomalies’. A similar procedure is used on the model results to produce temperature anomalies. So how do they compare to the data?

Fig 4. Model comparisons to data 1950-2050

Figure 4 shows a close up detail from 1950-2050. This shows how there is a large spread in model trends even within each RCP ensemble. The data falls below the bulk of model runs after 2005 except briefly during the recent el Nino peak in 2016. Figure 4. shows that the data are now lower than the mean of every RCP, furthermore we won’t be able to distinguish between RCPs until after ~2030.

Zeke Hausfather’s Tricks to Make the Models Look Good

Clive’s second post is Zeke’s Wonder Plot January 25,2019. Excerpts in italics with my bolds.

Zeke Hausfather who works for Carbon Brief and Berkeley Earth has produced a plot which shows almost perfect agreement between CMIP5 model projections and global temperature data. This is based on RCP4.5 models and a baseline of 1981-2010. First here is his original plot.

I have reproduced his plot and essentially agree that it is correct. However, I also found some interesting quirks.

The apples to apples comparison (model SSTs blended with model land 2m temperatures) reduces the model mean by about 0.06C. Zeke has also smoothed out the temperature data by using a 12 month running average. This has the effect of exaggerating peak values as compared to using the annual averages.

Effect of changing normalisation period. Cowtan & Way uses kriging to interpolate Hadcrut4.6 coverage into the Arctic and elsewhere.

Shown above is the result for a normalisation from 1961-1990. Firstly look how the lowest 2 model projections now drop further down while the data seemingly now lies below both the blended (thick black) and the original CMIP average (thin black). HadCRUT4 2016 is now below the blended value.

This improved model agreement has nothing to do with the data itself but instead is due to a reduction in warming predicted by the models. So what exactly is meant by ‘blending’?

Measurements of global average temperature anomalies use weather stations on land and sea surface temperatures (SST) over oceans. The land measurements are “surface air temperatures”(SAT) defined as the temperature 2m above ground level. The CMIP5 simulations however used SAT everywhere. The blended model projections use simulated SAT over land and TOS (temperature at surface) over oceans. This reduces all model predictions slightly, thereby marginally improving agreement with data. See also Climate-lab-book

The detailed blending calculations were done by Kevin Cowtan using a land mask and ice mask to define where TOS and SAT should be used in forming the global average. I downloaded his python scripts and checked all the algorithm, and they look good to me. His results are based on the RCP8.5 ensemble.

The solid blue curve is the CMIP5 RCP4.6 ensemble average after blending. The dashed curve is the original. Click to expand.

Again the models mostly lie above the data after 1999.

This post is intended to demonstrate just how careful you must be when interpreting plots that seemingly demonstrate either full agreement of climate models with data, or else total disagreement.

In summary, Zeke Hausfather writing for Carbon Brief 1) used a clever choice of baseline, 2) of RCP for blended models and 3) by using a 12 month running average, was able to show an almost perfect agreement between data and models. His plot is 100% correct. However exactly the same data plotted with a different baseline and using annual values (exactly like those in the models), instead of 12 monthly running averages shows instead that the models are still lying consistently above the data. I know which one I think best represents reality.

Moral to the Story:

There are lots of ways to make computer models look good.Try not to be distracted.

Reblogged this on Climate Collections.

LikeLike

As you say, Ron, ‘There are lots of ways to make computer models look good.’ and climate science explores most of them. But if your models *won’t agree with observations, ref. Professor Richard Lindzen, or *can’t agree with

observations, ref Professor Judith Curry et al, (not big Al) then no matter how beautiful the model -it’s wro-ong.

https://beththeserf.wordpress.com/2018/12/23/56th-edition-serf-under_ground-journal/

LikeLike

Or you can fix it like GISS does. Every year their graph of temp observations morphs closer and closer to the models average estimates.

LikeLiked by 1 person

I do not see anything wrong in running averages or in the choice of the baseline. The question is, why Zeke’s graph does omit the period prior to 1970 (where the agreement with measured data must have deteriorated – my guess, I did not calculate it). It would be a real triumph if the result agrees with measured data in the whole range of simulation. But it would be shameful if the correspondence is only transferred to another part of the graph and the rest of the graph is dropped?

LikeLike

udoli, note that most of Zeke’s result comes from using two different baselines for anomalies. Hadcrut uses 1961-1990 while he shows CMIP5 models on 1981-2010. The latter baseline is higher, so the anomalies are reduced. The models estimate GMT in Kelvin degrees, so it is simple to put them on the same baseline as observations, which Clive has done. A true comparison requires normalizing to the same standard. Putting all data on the same baseline (either one) shows what Clive shows. Zeke’s trick is clever, eh?

On your other point, remember CMIP5 models are hindcasting from 2005 backwards, and forecasting from 2006 to 2100. Since most models are tuned to rising temperatures from 1976 to 1998, they fail to replicate the decrease 1946 to 1976.

LikeLike

You are basically right. Nevertheless, my idea was that the change of the baseline is manifested only in a change of all the simulated data by a constant. In the situation when the individual models differ in units of degrees from each other the shift by a constant is meaningless in my eyes: almost the same would happen if some of the authors of the models did not produce any calculation at all. The whole technique is based on the idea that the absolute value of the temperature has little value – what matters is the derivative, which is gradually added up (integrated) to yield the result. I have no problem with a constant in this difficult situation _IF_ it does not hurt the agreement with measured data in the left part of the graph – which was UNFORTUNATELY omitted in Zeke’s graph.

In principle, the graph of any simulation should have three parts: The leftmost part where the calculation would take the boundary conditions for the calculation; the middle part where the simulation would prove the validity of the algorithm by replicating real-world data which were not taken into account previously; and the rightmost part where the forecast is done. It seems to me that Zeke takes the second part as the baseline leaving out the left part of the graph. I cannot get rid of a suspicion of a philosophical mistake.

LikeLike

udoli, I take your point. Zeke’s graph starts in 1970 because prior to that observed temperatures were falling, not rising, and divergence from the models would be obvious. In fact they were falling and stopped only in 1977, a fact hidden by the averaging. As I said above, Zeke’s graph misleads in all time frames by not putting model estimates on the same baseline as observations. The issue is not comparison between models, but how models compare to observations.

LikeLike

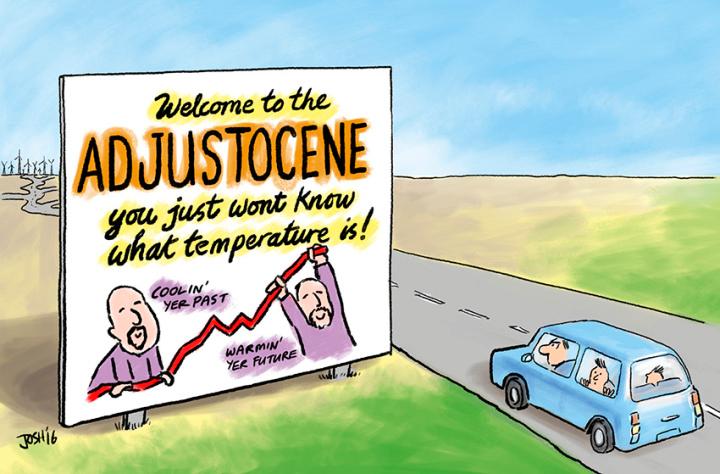

When observations and models,don’t match, add-ustments required, ’tis the cli-science method.

LikeLike

You got it!

LikeLiked by 1 person

Tony Heller 28/01/19.

https://realclimatescience.com/2019/01/overwhelming-evidence-of-collusion/

LikeLike

What leads generally good people to believe so strongly in the climate consensus that they turn off their critical thinking skills?

LikeLike

hunter, my guess: The instinct of the herd is to fear the future and blame someone.

LikeLike

Reblogged this on WeatherAction News and commented:

When you’ve so much invested in an outcome and the FUD sales puffy is falling you have to make your data look good – no matter how far the truth is stretched.

LikeLike

No Ron, yu got it! Lol..

LikeLike