Magnetic Pole Swapping and Cooling

The Earth’s North magnetic pole has been wandering at 10-year intervals from 1970 to 2020, as seen in this animation from the National Centers for Environmental Information.

This post discusses solar and geologic magnetic pole swapping (not with each other of course) and the implications for humans. First the earth and later on the sun.

What On Earth?

Newsweek chose to report yesterday on earth’s meandering north pole as shown in the cool graphic above. That article (here) aims at sensational possible calamities, including high energy radiation, space particles, ozone depletion and electrical blackouts. A more sober assessment is provided by the conversation Why the Earth’s magnetic poles could be about to swap places – and how it would affect us By Phil Livermore and Jon Mound of U. Leeds.Excerpts below with my bolds.

The Earth’s magnetic field surrounds our planet like an invisible force field – protecting life from harmful solar radiation by deflecting charged particles away. Far from being constant, this field is continuously changing. Indeed, our planet’s history includes at least several hundred global magnetic reversals, where north and south magnetic poles swap places. So when’s the next one happening and how will it affect life on Earth?

During a reversal the magnetic field won’t be zero, but will assume a weaker and more complex form. It may fall to 10% of the present-day strength and have magnetic poles at the equator or even the simultaneous existence of multiple “north” and “south” magnetic poles.

Geomagnetic reversals occur a few times every million years on average. However, the interval between reversals is very irregular and can range up to tens of millions of years.

There can also be temporary and incomplete reversals, known as events and excursions, in which the magnetic poles move away from the geographic poles – perhaps even crossing the equator – before returning back to their original locations. The last full reversal, the Brunhes-Matuyama, occurred around 780,000 years ago. A temporary reversal, the Laschamp event, occurred around 41,000 years ago. It lasted less than 1,000 years with the actual change of polarity lasting around 250 years.

In 2003, the so-called Halloween storm caused local electricity-grid blackouts in Sweden, required the rerouting of flights to avoid communication blackout and radiation risk, and disrupted satellites and communication systems. But this storm was minor in comparison with other storms of the recent past, such as the 1859 Carrington event, which caused aurorae as far south as the Caribbean.

The simple fact that we are “overdue” for a full reversal and the fact that the Earth’s field is currently decreasing at a rate of 5% per century, has led to suggestions that the field may reverse within the next 2,000 years. But pinning down an exact date – at least for now – will be difficult.

Since 2014, Swarm—a trio of satellites from the European Space Agency—has allowed researchers to study changes building at the Earth’s core, where the magnetic field is generated.

Historically, Earth’s North and South magnetic poles have flipped every 200,000 or 300,000 years—except right now, they haven’t flipped successfully for about 780,000 years. But the planet’s magnetic field is at long last showing signs of shifting.

The Earth’s magnetic field is generated within the liquid core of our planet, by the slow churning of molten iron. Like the atmosphere and oceans, the way in which it moves is governed by the laws of physics. We should therefore be able to predict the “weather of the core” by tracking this movement, just like we can predict real weather by looking at the atmosphere and ocean. A reversal can then be likened to a particular type of storm in the core, where the dynamics – and magnetic field – go haywire (at least for a short while), before settling down again.

The difficulties of predicting the weather beyond a few days are widely known, despite us living within and directly observing the atmosphere. Yet predicting the Earth’s core is a far more difficult prospect, principally because it is buried beneath 3,000km of rock such that our observations are scant and indirect. However, we are not completely blind: we know the major composition of the material inside the core and that it is liquid. A global network of ground-based observatories and orbiting satellites also measure how the magnetic field is changing, which gives us insight into how the liquid core is moving.

Solar Pole Swapping Puts Earth to Shame

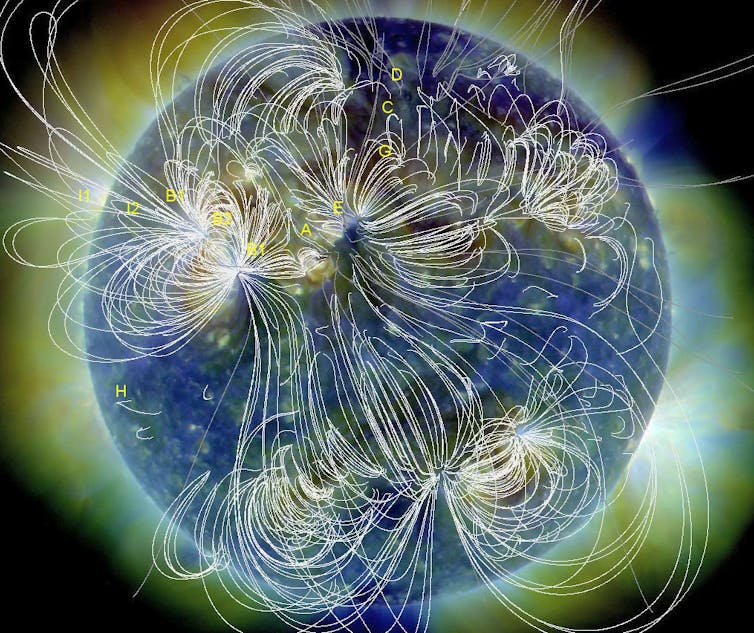

White lines show the magnetic field emanating from the sun’s surface. NASA

Background:

The sun as a whole also has a “global” magnetic field, oriented more or less north-south. So we can think of the sun as a large N-S magnet, like our Earth, but with smaller variously (but not randomly) oriented and continually evolving mini-magnets distributed over its photosphere (visible surface) and throughout its corona (extended atmosphere).

However, unlike our Earth, the sun’s large scale magnetic field flips over on a regular basis, roughly every 11 years. (Actually, Earth’s flips too, very irregularly. The last time was 780,000 years ago. But that’s another story.) Solar magnetic reversals occur close to solar maximum, when the number of sunspots is near its peak, though it is often a gradual process, taking up to 18 months.

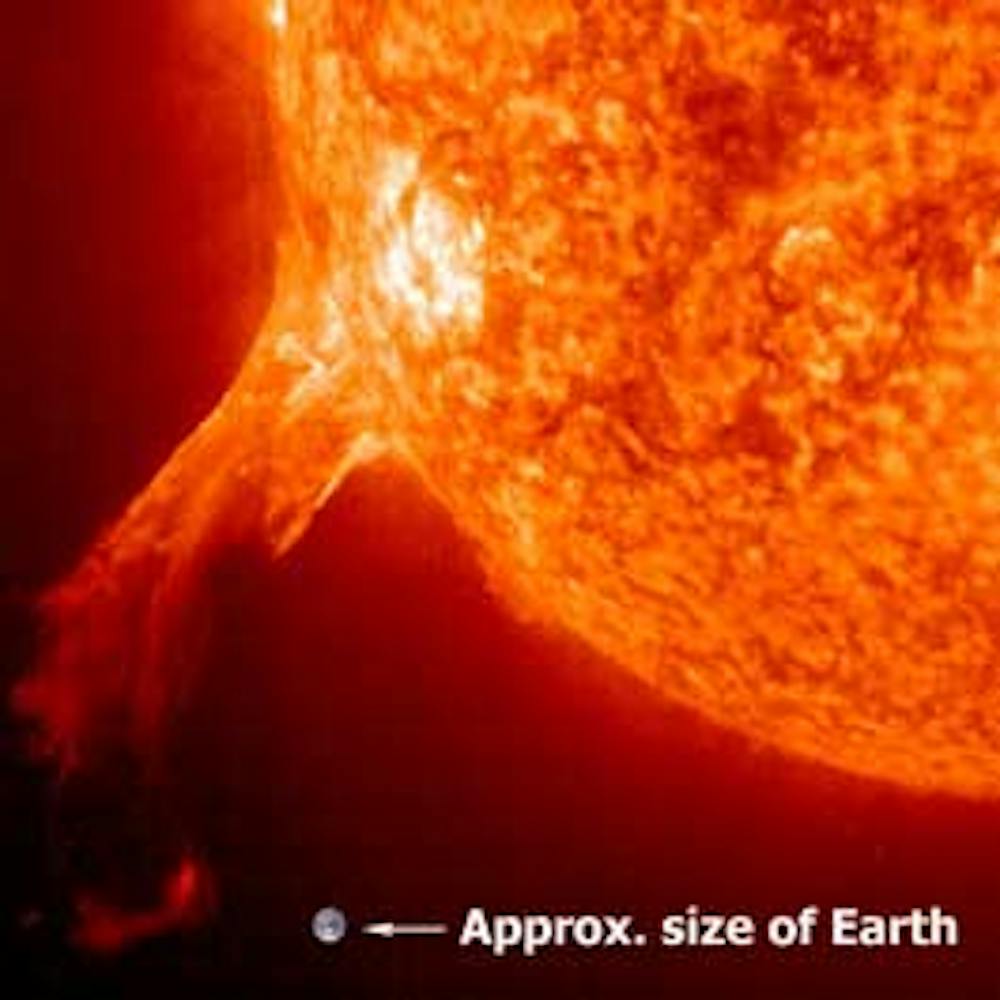

A solar flare (the white patch on the sun), and an erupting prominence reaching into space, are features of our active sun, and place the size of Earth in context. NASA

Paul Cally solar physicist Monash U (here)

About the Current Quiet Sun

Euan Mearns considers the implications at Energy Matters The Death of Sunspot Cycle 24, Huge Snow and Record Cold Excerpts below with my bolds.

8 meter snow depth in Chamonix in the shadow of Mont Blanc in the French Alps January 2018

It looks like the snow in this drift is ~ 8m deep. And this is in the valley, not in the high basins where the snow fields that feed the glaciers lie. Now it’s obviously far too early to begin to draw any conclusions. But IF we get a run of 3 or 4 winters that dump this much snow, it is not inconceivable for me to imagine Alpine glaciers once again beginning to advance. I’m totally unsure how long it takes for pressure in the glacier source to feed through to advance of the snout.

So what is going on? We’ve been told by climate scientists that snow would become a thing of the past. We’ve also been told that global warming might lead to more snow and less snow. And we’ve been told that warming might even lead to cooling. The competing theory to the CO2 greenhouse is that the Sun has a prominent role in modulating Earth’s climate that was so eloquently described by Phil Chapman in his post earlier this week. This theory simply observes a strong connection between a weak solar wind (that is expressed by low sunspot numbers) and cold, snowy winters in the N hemisphere. Uniquely, most of those who argue for a strong solar influence also acknowledge the overprint of anthropogenic CO2. The IPCC effectively sets the Sun to zero. The Sun is entering a grand solar minimum already christened the Eddy Minimum by the solar physics community.

Figure 2 It is crucial to look at the baseline closely that in 2009 actually touched zero for months on end. This is not normal for the low point of the cycle. Figure 3 shows how cycle 24 was feeble compared with recent cycles. And it looks like it will have a duration of ~10 years (2009-2019) which as the low end of the normal range which is 9 to 14 years with mean of 11 years. Chart adapted from SIDC is dated 1 January 2018.

Mearns provides this summary of his article Cosmic Rays, Magnetic Fields and Climate Change

Cosmic rays are deflected by BOTH the Sun’s and Earth’s magnetic fields and there may also be variations in the incident cosmic ray background. Cosmogenic isotope variations, therefore, do not only record variations in solar activity.

This has two significant implications for me: 1) when I have looked into cosmogenic isotopes in the past I have been perplexed by the fact that in parts you see a wonderful coherence with “climate” (T≠climate) while else where, the relationship breaks down, and 2) my recent focus has been on variations in spectrum from the Sun (which may still be important) but to the extent that the Laschamp event (Earth’s magnetic field) may also be implicated in climate change then the emphasis needs to shift to cosmic rays themselves i.e. what Svensmark has been saying for years.

For readers not familiar with Earth’s magnetic field. It periodically flips but on a time-scale of millions of years. The N pole moves to the S pole and in the process of doing so the magnetic field strength collapses as evidenced by “Figure 7” in Phil’s post. The last time this happened was during the Laschamp event ~ 41,000 years ago. There was a full but short lived reversal, but the Earth’s magnetic field did collapse.

Now here’s the main point. We know that the glacial cycles beat to a 41,000 year rhythm that is the obliquity (tilt) of Earth’s axis. The magnetic field originates in Earth’s liquid mainly iron core. This raises the question, can changes in obliquity affect the geo-dynamo. You have to read what Phil has written closely:

Since we absolutely know (don’t we?) that the interglacial to glacial transitions of the current ice age are caused by Milankovitch forcing, the usual interpretation is that there must be some unknown mechanism by which changes in the orbit of the Earth and/or the tilt of the polar axis affect the geodynamo, triggering the excursions.

For decades to centuries, Earth’s N magnetic pole was pretty well fixed to a point in northern Canada. Not much in the news, but it has recently begun to migrate, quite rapidly.

For a more complete description of solar effects effects on earth’s climate see

The cosmoclimatology theory

Earth’s magnetic field, in blue, shields the planet from the solar wind. NASA

I am excerpting from Dr. Cohen’s latest post because of his refreshing candor sharing his thought processes regarding arctic weather patterns.

I am excerpting from Dr. Cohen’s latest post because of his refreshing candor sharing his thought processes regarding arctic weather patterns.

De Decker: If we divide total primary energy use per country by population, we see that the average North American uses more than twice the energy of the average European (6,881 kgoe versus 3,207 kgoe, meaning kg of oil equivalent). Within Europe, the average Norwegian (5,818 kgoe) uses almost three times more energy than the average Greek (2,182 kgoe). The latter uses three to five times more energy than the average Angolan (545 kgoe), Cambodian (417 kgoe) or Nicaraguan (609 kgoe), who uses two to three times the energy of the average Bangladeshi (222 kgoe). [

De Decker: If we divide total primary energy use per country by population, we see that the average North American uses more than twice the energy of the average European (6,881 kgoe versus 3,207 kgoe, meaning kg of oil equivalent). Within Europe, the average Norwegian (5,818 kgoe) uses almost three times more energy than the average Greek (2,182 kgoe). The latter uses three to five times more energy than the average Angolan (545 kgoe), Cambodian (417 kgoe) or Nicaraguan (609 kgoe), who uses two to three times the energy of the average Bangladeshi (222 kgoe). [

New York city signed up for “Me Too” commitment to Paris Accord, and the city’s pensioners are paying the price as their retirement funds suffer from virtue signaling divestment from oil companies. Jeff Patch writes in Real Clear Policy

New York city signed up for “Me Too” commitment to Paris Accord, and the city’s pensioners are paying the price as their retirement funds suffer from virtue signaling divestment from oil companies. Jeff Patch writes in Real Clear Policy