The peoples’ instincts are right, though they have been kept in the dark about this “pandemic” that isn’t. Responsible citizens are starting to act out their outrage from being victimized by a medical-industrial complex (to update Eisenhower’s warning decades ago). The truth is, governments are not justified to take away inalienable rights to life, liberty and the pursuit of happiness. There are several layers of disinformation involved in scaring the public. This post digs into the CV tests, and why the results don’t mean what the media and officials claim.

The peoples’ instincts are right, though they have been kept in the dark about this “pandemic” that isn’t. Responsible citizens are starting to act out their outrage from being victimized by a medical-industrial complex (to update Eisenhower’s warning decades ago). The truth is, governments are not justified to take away inalienable rights to life, liberty and the pursuit of happiness. There are several layers of disinformation involved in scaring the public. This post digs into the CV tests, and why the results don’t mean what the media and officials claim.

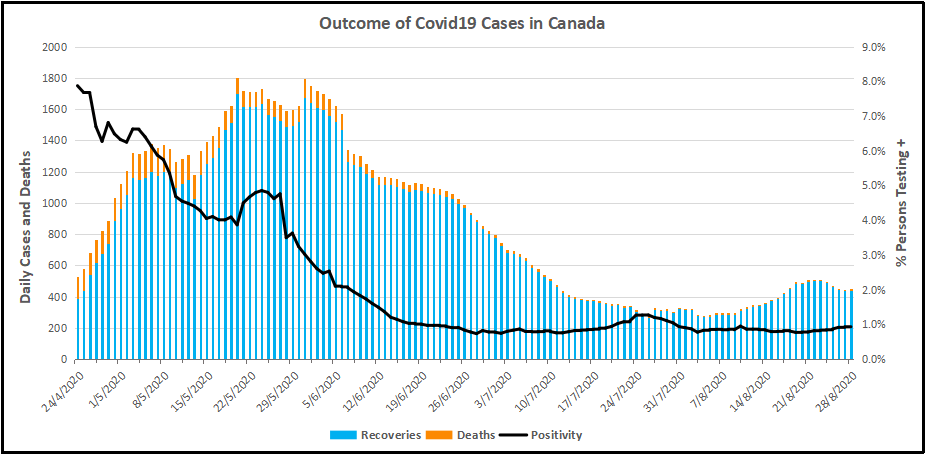

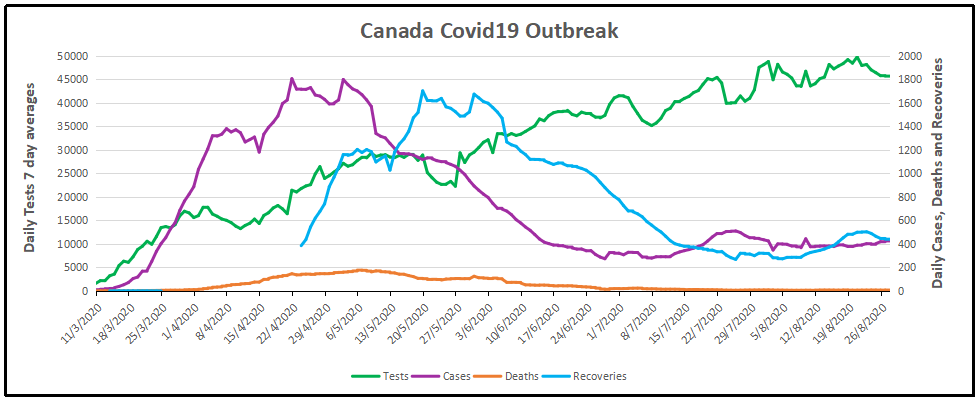

For months now, I have been updating the progress in Canada of the CV outbreak. A previous post later on goes into the details of extracting data on tests, persons testing positive (termed “cases” without regard for illness symptoms) and deaths after testing positive. Currently, the contagion looks like this.

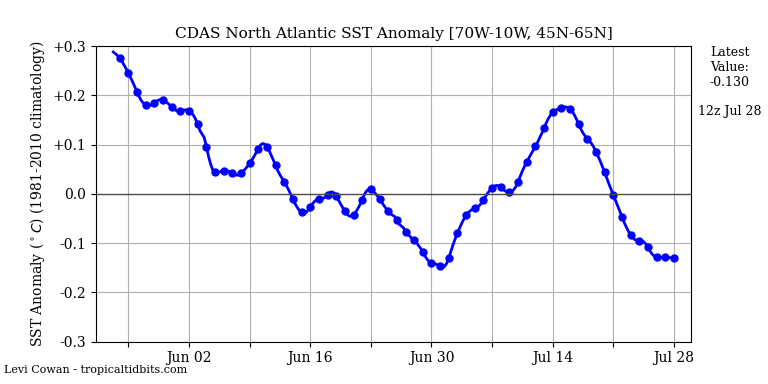

The graph shows that deaths are less than 5 a day, compared to a daily death rate of 906 in Canada from all causes. Also significant is the positivity ratio: the % of persons testing positive out of all persons tested each day. That % has been fairly steady for months now: 1% positive means 99% of people are not infected. And this is despite more than doubling the rate of testing.

But what does testing positive actually mean? Herein lies more truth that has been hidden from the public for the sake of an agenda to control free movement and activity. Background context comes from Could Rapid Coronavirus Testing Help Life Return To Normal?, an interview at On Point with Dr. Michael Mina. Excerpts in italics with my bolds. H/T Kip Hansen

A sign displays a new rapid coronavirus test on the new Abbott ID Now machine at a ProHEALTH center in Brooklyn on August 27, 2020 in New York City. (Spencer Platt/Getty Images)

Dr. Michael Mina:

COVID tests can actually be put onto a piece of paper, very much like a pregnancy test. In fact, it’s almost exactly like a pregnancy test. But instead of looking for the hormones that tell if somebody is pregnant, it looks for the virus proteins that are part of SA’s code to virus. And it would be very simple: You’d either swab the front of your nose or you’d take some saliva from under your tongue, for example, and put it onto one of these paper strips, essentially. And if you see a line, it means you’re positive. And if you see no line, it means you are negative, at least for having a high viral load that could be transmissible to other people.

An antigen is one of the proteins in the virus. And so unlike the PCR test, which is what most people who have received a test today have generally received a PCR test. And looking those types of tests look for the genome of the virus to RNA and you could think of RNA the same way that humans have DNA. This virus has RNA. But instead of looking for RNA like the PCR test, these antigen tests look for pieces of the protein. It would be like if I wanted a test to tell me, you know, that somebody was an individual, it would actually look for features like their eyes or their nose. And in this case, it is looking for different parts of the virus. In general, the spike protein or the nuclear capsid, these are two parts of the virus.

The reason that these antigen tests are going to be a little bit less sensitive to detect the virus molecules is because there’s no step that we call an amplification step. One of the things that makes the PCR test that looks for the virus RNA so powerful is that it can take just one molecule, which the sensor on the machine might not be able to detect readily, but then it amplifies that molecule millions and millions of times so that the sensor can see it. These antigen tests, because they’re so simple and so easy to use and just happen on a piece of paper, they don’t have that amplification step right now. And so they require a larger amount of virus in order to be able to detect it. And that’s why I like to think of these types of tests having their primary advantage to detect people with enough virus that they might be transmitting or transmissible to other people.”

The PCR test, provides a simple yes/no answer to the question of whether a patient is infected.

Source: Covid Confusion On PCR Testing: Maybe Most Of Those Positives Are Negatives.

Similar PCR tests for other viruses nearly always offer some measure of the amount of virus. But yes/no isn’t good enough, Mina added. “It’s the amount of virus that should dictate the infected patient’s next steps. “It’s really irresponsible, I think, to [ignore this]” Dr. Mina said, of how contagious an infected patient may be.

“We’ve been using one type of data for everything,” Mina said. “for [diagnosing patients], for public health, and for policy decision-making.”

The PCR test amplifies genetic matter from the virus in cycles; the fewer cycles required, the greater the amount of virus, or viral load, in the sample. The greater the viral load, the more likely the patient is to be contagious.

This number of amplification cycles needed to find the virus, called the cycle threshold, is never included in the results sent to doctors and coronavirus patients, although if it was, it could give them an idea of how infectious the patients are.

One solution would be to adjust the cycle threshold used now to decide that a patient is infected. Most tests set the limit at 40, a few at 37. This means that you are positive for the coronavirus if the test process required up to 40 cycles, or 37, to detect the virus.

Any test with a cycle threshold above 35 is too sensitive, Juliet Morrison, a virologist at the University of California, Riverside told the New York Times. “I’m shocked that people would think that 40 could represent a positive,” she said.

A more reasonable cutoff would be 30 to 35, she added. Dr. Mina said he would set the figure at 30, or even less.

Another solution, researchers agree, is to use even more widespread use of Rapid Diagnostic Tests (RDTs) which are much less sensitive and more likely to identify only patients with high levels of virus who are a transmission risk.

Comment: In other words, when they analyzed the tests that also reported cycle threshold (CT), they found that 85 to 90 percent were above 30. According to Dr. Mina a CT of 37 is 100 times too sensitive (7 cycles too much, 2^7 = 128) and a CT of 40 is 1,000 times too sensitive (10 cycles too much, 2^10 = 1024). Based on their sample of tests that also reported CT, as few as 10 percent of people with positive PCR tests actually have an active COVID-19 infection. Which is a lot less than reported.

Here is a graph showing how this applies to Canada.

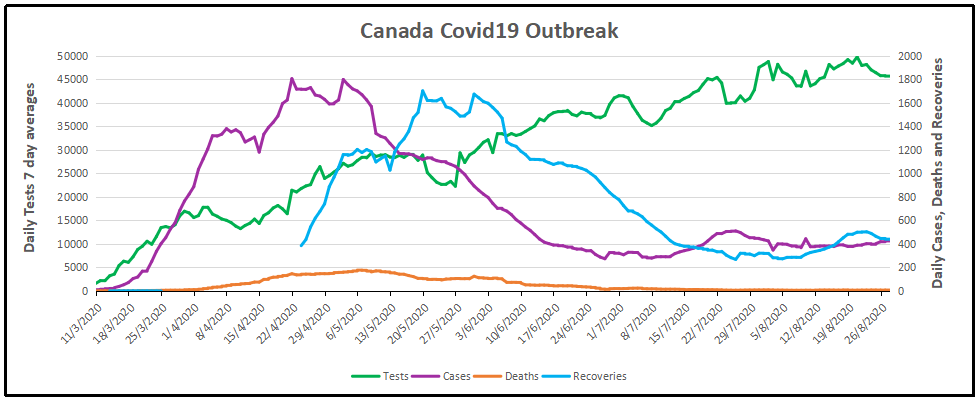

It is evident that increased testing has resulted in more positives, while the positivity rate is unchanged. Doubling the tests has doubled the positives, up from 300 a day to nearly 600 a day presently. Note these are PCR results. And the discussion above suggests that the number of persons with an active infectious viral load is likely 10% of those reported positive: IOW up from 30 a day to 60 a day. And in the graph below, the total of actual cases in Canada is likely on the order of 13,000 total from the last 7 months, an average of 62 cases a day.

WuFlu Exposes a Fundamental Flaw in US Health System

Dr. Mina goes on to explain what went wrong in US response to WuFlu:

In the U.S, we have a major focus on clinical medicine, and we have undervalued and underfunded the whole concept of public health for a very long time. We saw an example of this for, for example, when we tried to get the state laboratories across the country to be able to perform the PCR tests back in March, February and March, we very quickly realized that our public health infrastructure in this country just wasn’t up to the task. We had very few labs that were really able to do enough testing to just meet the clinical demands. And so such a reduced focus on public health for so long has led to an ecosystem where our regulatory agencies, this being primarily the FDA, has a mandate to approve clinical medical diagnostic tests. But there’s actually no regulatory pathway that is available or exists — and in many ways, we don’t even have a language for it — for a test whose primary purpose is one of public health and not personal medical health

That’s really caused a problem. And a lot of times, it’s interesting if you think about the United States, every single test that we get, with the exception maybe of a pregnancy test, has to go through a physician. And so that’s a symptom of a country that has focused, and a society really, that has focused so heavily on the medical industrial complex. And I’m part of that as a physician. But I also am part of the public health complex as an epidemiologist. And I see that sometimes these are at odds with each other, medicine and public health. And this is an example where because all of our regulatory infrastructure is so focused on medical devices… If you’re a public health person, you can actually have a huge amount of leeway in how your tests are working and still be able to get epidemics under control. And so there’s a real tension here between the regulations that would be required for these types of tests versus a medical diagnostic test.

Footnote: I don’t think the Chinese leaders were focusing on the systemic weakness Dr. MIna mentions. But you do have to bow to the inscrutable cleverness of the Chinese Communists releasing WuFlu as a means to set internal turmoil within democratic capitalist societies. On one side are profit-seeking Big Pharma, aided and abetted by Big Media using fear to attract audiences for advertising revenues. The panicked public demands protection which clueless government provides by shutting down the service and manufacturing industries, as well as throwing money around and taking on enormous debt. The world just became China’s oyster.

Background from Previous Post: Covid Burnout in Canada August 28

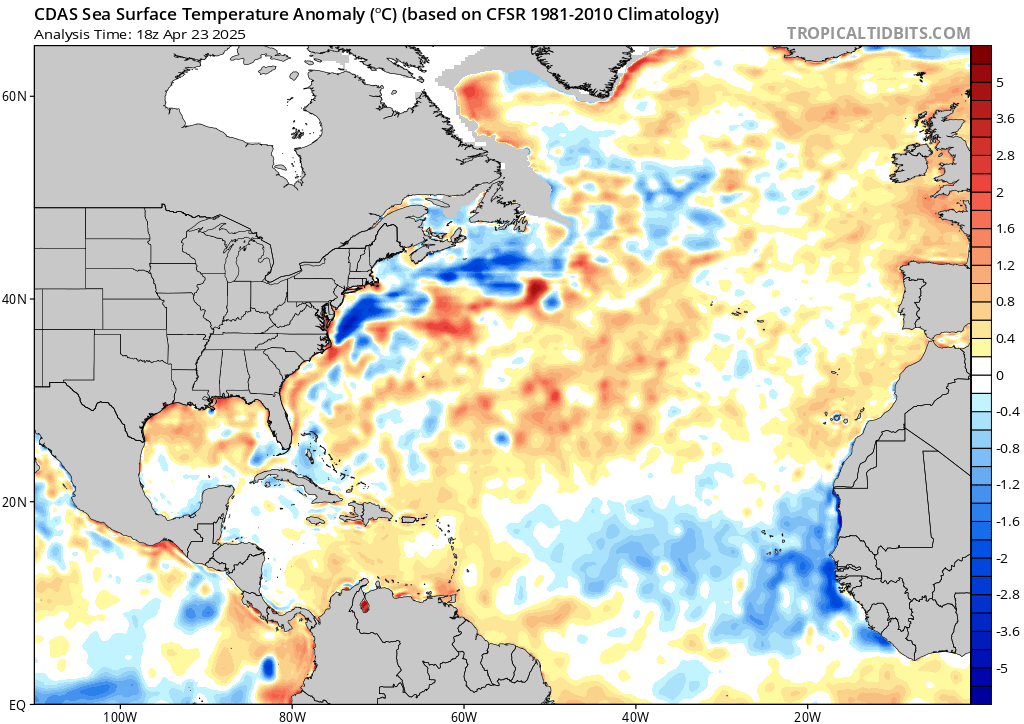

The map shows that in Canada 9108 deaths have been attributed to Covid19, meaning people who died having tested positive for SARS CV2 virus. This number accumulated over a period of 210 days starting January 31. The daily death rate reached a peak of 177 on May 6, 2020, and is down to 6 as of yesterday. More details on this below, but first the summary picture. (Note: 2019 is the latest demographic report)

|

Canada Pop |

Ann Deaths |

Daily Deaths |

Risk per

Person |

| 2019 |

37589262 |

330786 |

906 |

0.8800% |

| Covid 2020 |

37589262 |

9108 |

43 |

0.0242% |

Over the epidemic months, the average Covid daily death rate amounted to 5% of the All Causes death rate. During this time a Canadian had an average risk of 1 in 5000 of dying with SARS CV2 versus a 1 in 114 chance of dying regardless of that infection. As shown later below the risk varied greatly with age, much lower for younger, healthier people.

Background Updated from Previous Post

In reporting on Covid19 pandemic, governments have provided information intended to frighten the public into compliance with orders constraining freedom of movement and activity. For example, the above map of the Canadian experience is all cumulative, and the curve will continue upward as long as cases can be found and deaths attributed. As shown below, we can work around this myopia by calculating the daily differentials, and then averaging newly reported cases and deaths by seven days to smooth out lumps in the data processing by institutions.

A second major deficiency is lack of reporting of recoveries, including people infected and not requiring hospitalization or, in many cases, without professional diagnosis or treatment. The only recoveries presently to be found are limited statistics on patients released from hospital. The only way to get at the scale of recoveries is to subtract deaths from cases, considering survivors to be in recovery or cured. Comparing such numbers involves the delay between infection, symptoms and death. Herein lies another issue of terminology: a positive test for the SARS CV2 virus is reported as a case of the disease COVID19. In fact, an unknown number of people have been infected without symptoms, and many with very mild discomfort.

August 7 in the UK it was reported (here) that around 10% of coronavirus deaths recorded in England – almost 4,200 – could be wiped from official records due to an error in counting. Last month, Health Secretary Matt Hancock ordered a review into the way the daily death count was calculated in England citing a possible ‘statistical flaw’. Academics found that Public Health England’s statistics included everyone who had died after testing positive – even if the death occurred naturally or in a freak accident, and after the person had recovered from the virus. Numbers will now be reconfigured, counting deaths if a person died within 28 days of testing positive much like Scotland and Northern Ireland…

Professor Heneghan, director of the Centre for Evidence-Based Medicine at Oxford University, who first noticed the error, told the Sun:

‘It is a sensible decision. There is no point attributing deaths to Covid-19 28 days after infection…

For this discussion let’s assume that anyone reported as dying from COVD19 tested positive for the virus at some point prior. From the reasoning above let us assume that 28 days after testing positive for the virus, survivors can be considered recoveries.

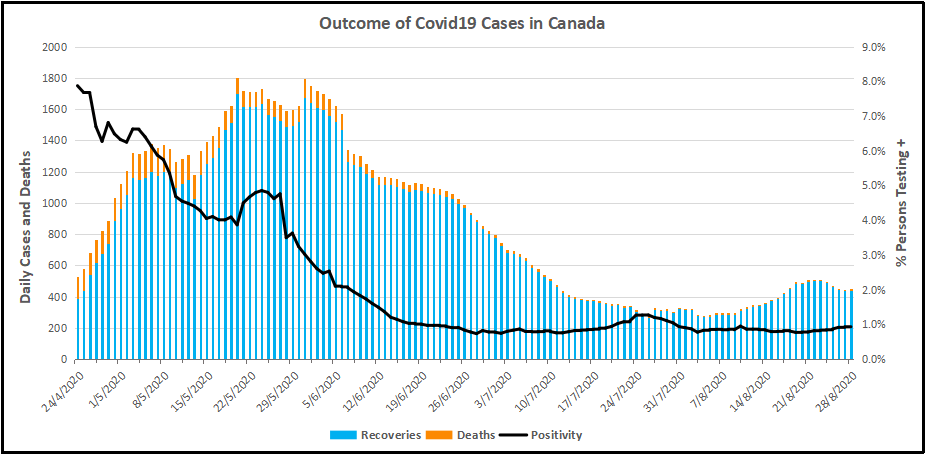

Recoveries are calculated as cases minus deaths with a lag of 28 days. Daily cases and deaths are averages of the seven days ending on the stated date. Recoveries are # of cases from 28 days earlier minus # of daily deaths on the stated date. Since both testing and reports of Covid deaths were sketchy in the beginning, this graph begins with daily deaths as of April 24, 2020 compared to cases reported on March 27, 2020.

The line shows the Positivity metric for Canada starting at nearly 8% for new cases April 24, 2020. That is, for the 7 day period ending April 24, there were a daily average of 21,772 tests and 1715 new cases reported. Since then the rate of new cases has dropped down, now holding steady at ~1% since mid-June. Yesterday, the daily average number of tests was 45,897 with 427 new cases. So despite more than doubling the testing, the positivity rate is not climbing. Another view of the data is shown below.

The scale of testing has increased and now averages over 45,000 a day, while positive tests (cases) are hovering at 1% positivity. The shape of the recovery curve resembles the case curve lagged by 28 days, since death rates are a small portion of cases. The recovery rate has grown from 83% to 99% steady over the last 2 weeks, so that recoveries exceed new positives. This approximation surely understates the number of those infected with SAR CV2 who are healthy afterwards, since antibody studies show infection rates multiples higher than confirmed positive tests (8 times higher in Canada). In absolute terms, cases are now down to 427 a day and deaths 6 a day, while estimates of recoveries are 437 a day.

The key numbers:

99% of those tested are not infected with SARS CV2.

99% of those who are infected recover without dying.

Summary of Canada Covid Epidemic

It took a lot of work, but I was able to produce something akin to the Dutch advice to their citizens.

The media and governmental reports focus on total accumulated numbers which are big enough to scare people to do as they are told. In the absence of contextual comparisons, citizens have difficulty answering the main (perhaps only) question on their minds: What are my chances of catching Covid19 and dying from it?

A previous post reported that the Netherlands parliament was provided with the type of guidance everyone wants to see.

For canadians, the most similar analysis is this one from the Daily Epidemiology Update: :

The table presents only those cases with a full clinical documentation, which included some 2194 deaths compared to the 5842 total reported. The numbers show that under 60 years old, few adults and almost no children have anything to fear.

Update May 20, 2020

It is really quite difficult to find cases and deaths broken down by age groups. For Canadian national statistics, I resorted to a report from Ontario to get the age distributions, since that province provides 69% of the cases outside of Quebec and 87% of the deaths. Applying those proportions across Canada results in this table. For Canada as a whole nation:

| Age |

Risk of Test + |

Risk of Death |

Population

per 1 CV death |

| <20 |

0.05% |

None |

NA |

| 20-39 |

0.20% |

0.000% |

431817 |

| 40-59 |

0.25% |

0.002% |

42273 |

| 60-79 |

0.20% |

0.020% |

4984 |

| 80+ |

0.76% |

0.251% |

398 |

In the worst case, if you are a Canadian aged more than 80 years, you have a 1 in 400 chance of dying from Covid19. If you are 60 to 80 years old, your odds are 1 in 5000. Younger than that, it’s only slightly higher than winning (or in this case, losing the lottery).

As noted above Quebec provides the bulk of cases and deaths in Canada, and also reports age distribution more precisely, The numbers in the table below show risks for Quebecers.

| Age |

Risk of Test + |

Risk of Death |

Population

per 1 CV death |

| 0-9 yrs |

0.13% |

0 |

NA |

| 10-19 yrs |

0.21% |

0 |

NA |

| 20-29 yrs |

0.50% |

0.000% |

289,647 |

| 30-39 |

0.51% |

0.001% |

152,009 |

| 40-49 years |

0.63% |

0.001% |

73,342 |

| 50-59 years |

0.53% |

0.005% |

21,087 |

| 60-69 years |

0.37% |

0.021% |

4,778 |

| 70-79 years |

0.52% |

0.094% |

1,069 |

| 80-89 |

1.78% |

0.469% |

213 |

| 90 + |

5.19% |

1.608% |

62 |

While some of the risk factors are higher in the viral hotspot of Quebec, it is still the case that under 80 years of age, your chances of dying from Covid 19 are better than 1 in 1000, and much better the younger you are.

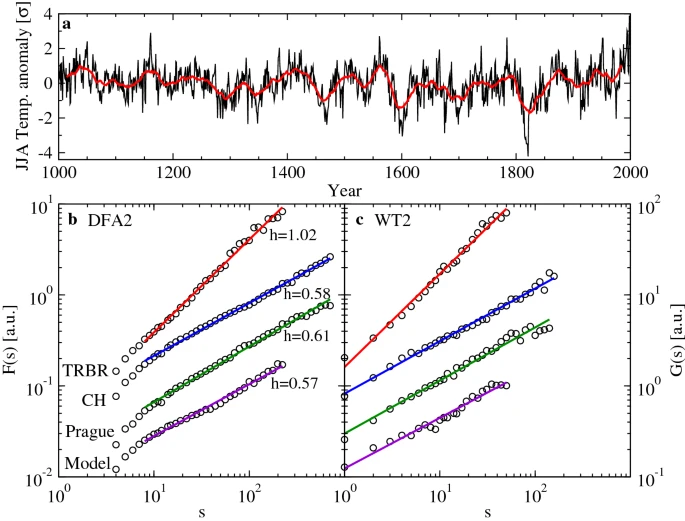

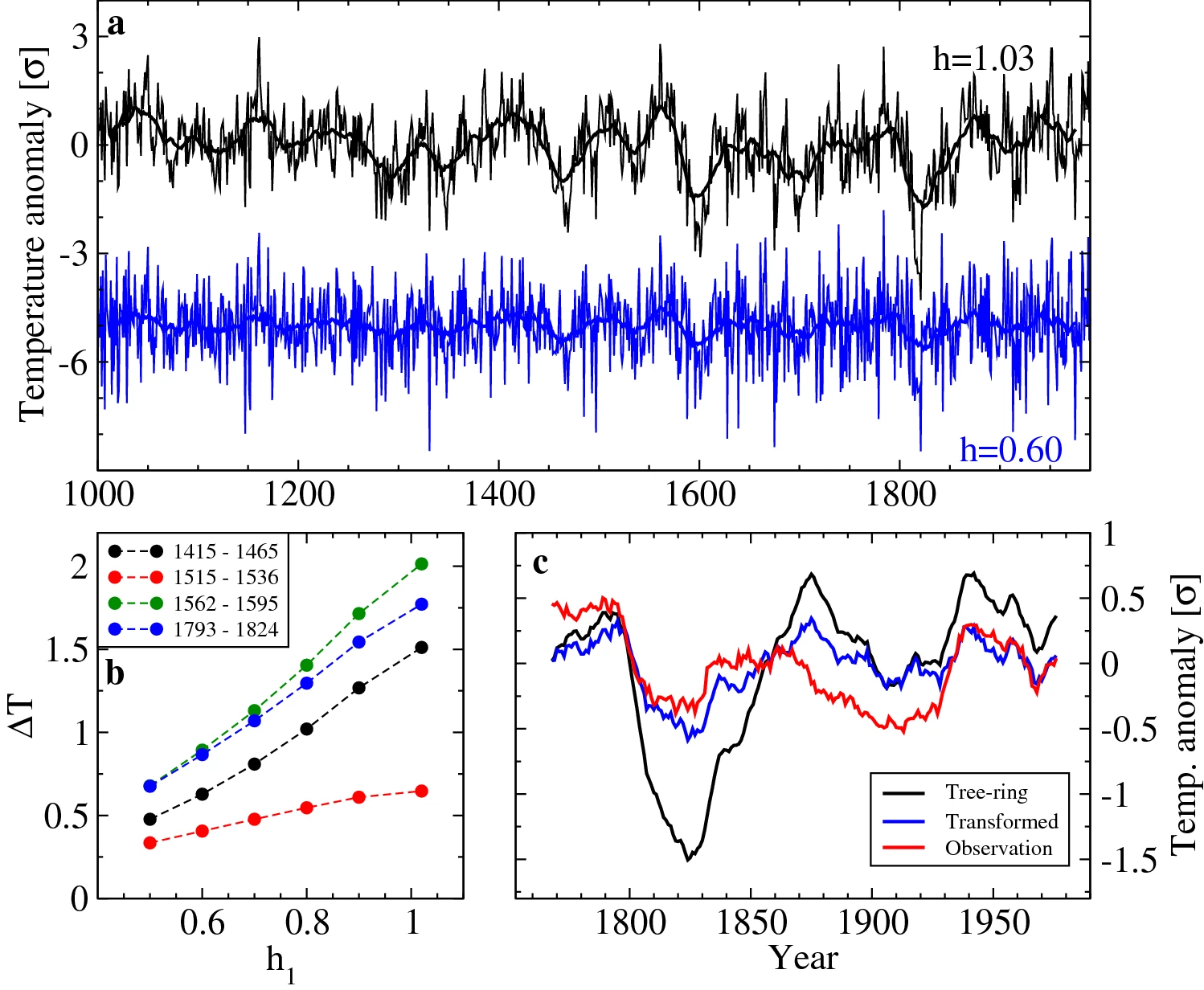

The paper is Setting the tree-ring record straight by Josef Ludescher, Armin Bunde, Ulf Büntgen & Hans Joachim Schellnhuber. The title is extremely informative, since the trick is to flatten the tree-ring proxies, removing any warm periods to compare with the present. Excerpts below with my bolds.

The paper is Setting the tree-ring record straight by Josef Ludescher, Armin Bunde, Ulf Büntgen & Hans Joachim Schellnhuber. The title is extremely informative, since the trick is to flatten the tree-ring proxies, removing any warm periods to compare with the present. Excerpts below with my bolds.