A thorough examination of the trials and tribulations of the mRNA shots proclaimed and sometimes forced upon people comes from a dream team of experts in the field: The authors of this research paper are highly qualified experts, including, according to Liberty Counsel, “biologist and nutritional epidemiologist M. Nathaniel Mead; research scientist Stephanie Seneff, Ph.D.; biostatistician and epidemiologist Russ Wolfinger, Ph.D.; immunologist and biochemist Dr. Jessica Rose; biostatistician and epidemiologist Kris Denhaerynck, Ph.D.; Vaccine Safety Research Foundation Executive Director Steve Kirsch; and cardiologist, internist, and epidemiologist Dr. Peter McCullough.”

The peer reviewed paper is at Cureus COVID-19 mRNA Vaccines: Lessons Learned from the Registrational Trials and Global Vaccination Campaign. Excerpts in italics with my bolds and added images.

Abstract

Our understanding of COVID-19 vaccinations and their impact on health and mortality has evolved substantially since the first vaccine rollouts. Published reports from the original randomized phase 3 trials concluded that the COVID-19 mRNA vaccines could greatly reduce COVID-19 symptoms. In the interim, problems with the methods, execution, and reporting of these pivotal trials have emerged. Re-analysis of the Pfizer trial data identified statistically significant increases in serious adverse events (SAEs) in the vaccine group. Numerous SAEs were identified following the Emergency Use Authorization (EUA), including death, cancer, cardiac events, and various autoimmune, hematological, reproductive, and neurological disorders.

Furthermore, these products never underwent adequate safety and toxicological testing in accordance with previously established scientific standards. Among the other major topics addressed in this narrative review are:

♦ the published analyses of serious harms to humans;

♦ quality control issues and process-related impurities;

♦ mechanisms underlying adverse events (AEs):

♦ the immunologic basis for vaccine inefficacy; and

♦ concerning mortality trends based on the registrational trial data.

The risk-benefit imbalance substantiated by the evidence to date contraindicates further booster injections and suggests that, at a minimum, the mRNA injections should be removed from the childhood immunization program until proper safety and toxicological studies are conducted. Federal agency approval of the COVID-19 mRNA vaccines on a blanket-coverage population-wide basis had no support from an honest assessment of all relevant registrational data and commensurate consideration of risks versus benefits.

Given the extensive, well-documented SAEs and unacceptably high harm-to-reward ratio, we urge governments to endorse a global moratorium on the modified mRNA products until all relevant questions pertaining to causality, residual DNA, and aberrant protein production are answered.

Background

Political and financial incentives may have played a key role in undermining the scientific evaluation process leading up to the EUA. Lalani and colleagues documented the major investments made by the US government well before authorization [18]. Even prior to the pandemic, the US National Institutes of Health invested $116 million (35%) in mRNA vaccine technology, the Biomedical Advanced Research and Development Authority (BARDA) had invested $148 million (44%), while the Department of Defense (DOD) contributed $72 million (21%) to mRNA vaccine development. BARDA and the DOD also collaborated closely in the co-development of Moderna’s mRNA vaccine, dedicating over $18 billion, which included guaranteed vaccine purchases [18]. This entailed pre-purchasing hundreds of millions of mRNA vaccine doses, alongside direct financial support for the clinical trials and the expansion of Moderna’s manufacturing capabilities. The public funding provided for developing these products through Operation Warp Speed surpassed investments in any prior public initiative [19]. Once the pandemic began, $29.2 billion (92% of which came from US public funds) was dedicated to the purchase of COVID-19 mRNA products; another $2.2 billion (7%) was channelled into supporting clinical trials, and $108 million (less than 1%) was allocated for manufacturing and basic research [18]. This profuse spending of taxpayer dollars continued throughout the pandemic: BARDA spent another $40 billion in 2021 alone [20].

Using US taxpayer money to purchase so many doses in advance would suggest that, prior to the EUA process, US federal agencies were strongly biased toward successful outcomes for the registrational trials. Moreover, it is reasonable to surmise that such extensive vested interests could have influenced the decision to prematurely halt the registrational trials. Unblinding essentially nullified the “placebo-controlled” element of the trials, eliminating the control group and thus undermining the ability to objectively assess the mRNA vaccines’ safety profile and potential serious AEs (SAEs). Thus, while the accelerated authorization showcased the government’s dedication to provide these novel products, it also raised concerns among many experts regarding risk-benefit issues and effectively eliminated the opportunity to learn about the potential long-range harms of the mRNA inoculations. The political pressures to rapidly deliver a solution may have compromised the thoroughness and integrity of the scientific evaluation process while downplaying and obfuscating scientific concerns about the potential risks associated with mRNA technology.

Concerns about inadequate safety testing extend beyond the usual regulatory approval standards and practices. Although we employ the terms “vaccine” and “vaccination” throughout this paper, the COVID-19 mRNA products are also accurately termed gene therapy products (GTPs) because, in essence, this was a case of GTP technology being applied to vaccination [21]. European regulations mandate the inclusion of an antigen in vaccines, but these immunogenic proteins are not intrinsic to the mRNA vaccines [22]. The GTP vaccine platform has been studied for over 30 years as an experimental cancer treatment, with the terms gene therapy and mRNA vaccination often used interchangeably [23]. This is due to the mRNA products’ specific mode of action: synthetic mRNA strands, encapsulated within a protective lipid nanoparticle (LNP) vehicle, are translated within the cells into a specific protein that subsequently stimulates the immune system against a specific disease. Another accurate label would be prodrugs because these products stimulate the recipient’s body to manufacture the target protein [24]. As there were no specific regulations at the time of the rapid approval process, regulatory agencies quickly “adapted” the products, generalized the definition of “vaccine” to accommodate them, and then authorized them for EUA for the first time ever against a viral disease. However, the rationale for regulating these products as vaccines and excluding them from regulatory oversight as GTPs lacks both scientific and ethical justification [21]. (Note: Throughout this review, the terms vaccines and vaccinations will be used interchangeably with injections, inoculations, biologicals, or simply, products.)

Due to the GTPs’ reclassification as vaccines, none of their components have been thoroughly evaluated for safety. The main concern, in a nutshell, is that the COVID-19 mRNA products may transform body cells into viral protein factories that have no off-switch (i.e., no built-in mechanism to stop or regulate such proliferation), with the spike protein (S-protein) being generated for prolonged periods, causing chronic, systemic inflammation and immune dysfunction [25,26]. This S-protein is the common denominator between the coronavirus and the vaccine, which helps to explain the frequent overlap in AEs generated by both the infection and the inoculation [25]. The vaccine-induced S-protein is more immunogenic than its viral counterpart; and yet, the increased antibody production is also associated with more severe immunopathology and other adverse effects [27]. The Pfizer and Moderna mRNA products contain mRNA with two modified codons that result in a version of the S-protein that is stabilized in its prefusion state [28]. This nucleoside-modified messenger RNA technology is intended to extend the synthetic mRNA’s persistence in the body. When the S-protein enters the bloodstream and disseminates systemically, it may become a contributing factor to diverse AEs in susceptible individuals [25].

Revisiting the registrational trials

Although randomized controlled trials (RCTs) are viewed as the gold standard for testing the safety and efficacy of medical products (due to minimizing bias), trials of limited scope can readily obscure the true safety and efficacy issues with respect to different segments of the population. In this case, the trials excluded key sub-groups, notably children, pregnant women, frail elderly persons, and immuno-compromised individuals, as well as those with cancer, autoimmune disease, and other chronic inflammatory conditions [45]. Whereas the founding trials did not recruit individuals with comorbidities, vaccine recipients in the rollouts showed the actual presence of these underlying conditions. Rather than assess these well-known safety and comorbid risk concerns, the focus was narrowly placed on the potential for inflammatory lung injury as had been seen in COVID-19 patients and, many years earlier, in immunized animal models infected with SARS-CoV [46]. We are now beginning to recognize the folly of this narrow safety focus, as millions of severe and life-threatening events associated with the COVID-19 vaccines continue to be documented in the medical literature [47-51].

What did the pivotal trials reveal about overall (all-cause) mortality? After carefully analyzing the ACM for the Pfizer and Moderna trials, Benn and colleagues found 61 deaths total (31 in vaccine, 30 in placebo) and a mortality RR of 1.03 (0.63-1.71), comparing the vaccinated to placebo [52]. These findings can be interpreted as “no significant difference” or no gold-standard evidence showing these mRNA vaccines reduce mortality. The lack of significant differences in deaths between the study arms is noteworthy. The true mortality impact remains unknown in this context, and this fact alone is relevant, as it would be preferable to take a vaccine with good trial evidence of reduced mortality than to take a vaccine where trial evidence does not show convincing evidence of improved survival [53]. Similarly, a subsequent analysis of the Pfizer trial data concluded that mortality rates were comparable between vaccinated and placebo groups during the initial 20-week period of the randomized trial [54]. The fact that the mRNA vaccinations did not lead to a reduction in overall mortality implies that, if the injections were indeed averting deaths specifically attributable to COVID-19, any such reduction might be offset by an increase in mortality stemming from other causes, such as SAEs.

For the Pfizer and Moderna registrational trials, Benn et al. also reported a non-significant 45% increase in cardiovascular deaths (RR=1.45; 95%CI 0.67-3.13) in the vaccine arms of the trials [52]. This outcome was consistent with numerous reports of COVID-19 vaccine-related cardiovascular pathology among both young and old segments of the population [57-63]. None of the mortality estimates from the trials are statistically significant. Nevertheless, the upward trends for both ACM and cardiovascular deaths are concerning. If the Pfizer trial had not been prematurely discontinued, and assuming death rates remain the same in both arms as observed in the first six months, the ACM difference would reach the standard threshold for statistical significance (p < 0.05) at approximately 2.8 years (34 months). The p-value is 0.065 at 2.5 years and 0.053 at 2.75 years (see Appendix 1). These calculations were independently confirmed by Masterjohn [64].

Absolute risk and the “number needed to vaccinate (NNV)”

It is imperative to carefully weigh all potential risks associated with the COVID-19 mRNA products. Should substantial harms be linked to their use, the perceived “reward” conveyed by the NNV would necessitate a re-appraisal. For example, assuming an NNV of 119 and an IFR of 0.23% (both conservative estimates), approximately 52,000 vaccinations would be needed to prevent one COVID-19-related death. Thus, for the BNT162b2 injection, a generous estimate would be two lives saved from COVID-19 for every 100,000 courses of the biological. Given the evidence of trial misconduct and data integrity problems (see next section), we conjecture that this estimate is an “upper bound”, and therefore the true benefit is likely to be much lower. Regarding potential harms, assuming 30% false-positive reports and a moderate under-reporting factor of 21, we calculate a risk of 27 deaths per 100,000 doses of BNT162b2. Thus, applying these reasonable, conservative assumptions, the estimated harms of the COVID-19 mRNA vaccines greatly outweigh the rewards: for every life saved, there were nearly 14 times more deaths caused by the modified mRNA injections (for details, see Appendix 2).

Underreporting of harms and data integrity issues

When Pfizer’s Six-Month Interim Report of Adverse Events (C4591001) revealed a total death count of 38 [35], the number seemed unexpectedly low for a clinical trial involving 44,060 participants amidst a pandemic. To investigate, Michels and colleagues estimated the anticipated deaths based on US mortality rates in 2020, presuming comparability across participating countries [54]. With 132 trial sites in the US and 80% of subjects, they estimated that 222 deaths should have occurred between July 27, 2020, and March 13, 2021, making the observed 38 deaths only 17% of the projected number. Most of the trial sites had fewer deaths than anticipated, possibly attributed to a considerable percentage of “Lost to Follow-up” subjects (4.2% of randomized subjects), including 395 unique subjects within the study period. While some sites recorded negligible losses, others exhibited substantial figures, up to 5% of the site’s subjects [54]. These numbers likely contributed to the seemingly low overall death count and should have prompted increased efforts to locate these individuals. Losing track of nearly 400 study participants in the follow-up observation period could have substantially compromised the validity and generalizability of the results. The missing data can produce biased estimates, leading to invalid conclusions. This could result in a distortion of vaccine efficacy and underestimation of SAEs (including deaths), thus misrepresenting the safety profile of the mRNA products. In short, Pfizer’s failure to minimize participant attrition seriously undermined the accuracy and reliability of the six-month study’s conclusions.

These concerns are further compounded by revelations concerning substandard research practices and inadequate data management in the pivotal trials. A whistleblower report by a former employee of the contract research organization responsible for enrolling patients in Pfizer’s pivotal trial raises significant questions regarding data integrity and the safety of trial participants [85]. Among the trial conduct issues documented were failure to report protocol deviations, improper storage of vaccines, mislabeling of laboratory specimens, and lack of timely follow-up for patients experiencing AEs, possibly leading to underreporting. In terms of regulatory oversight, the FDA inspected only nine out of the 153 study sites involved in the Pfizer trial [86].

Finally, an unblinding of participants occurred early in the trial, potentially on a wide scale across different study sites. Participants were not presented with clear information regarding potential AEs in both trial protocols and consent forms [87]. Some parts of the consent form were misleading and merely intended to elicit participation that might not otherwise have occurred if the volunteers had been made aware that what was promised in theory or “on paper” was unlikely to happen in reality [87]. As a result, participants were not being granted truly informed consent; the potential injuries and AEs most likely to be caused by the vaccinations were never openly stated.

This lack of informed consent carried over into the real-world setting following the EUA. For example, not publicly disclosing the Pfizer trial’s exclusion of pregnant women is arguably among the CDC’s most egregious oversights when asserting the safety of COVID-19 vaccine administration during pregnancy [1]. The Nuremberg Code established patients’ rights to voluntary informed consent in the aftermath of World War II [88]. US courts consistently support informed consent as a fundamental right for patients’ autonomy [89]. Informed consent procedures must provide clear distinctions between risks that are frequently observed, risks that occur rarely, and the more obvious risk of lack of effectiveness or waning immunity, which is separate from the risk of SAEs. Whether in a clinical trial or free-living real-world setting, informed consent is essential to providing a clear understanding of the potential risks associated with receiving a genetic vaccine. Throughout the pandemic, healthcare workers were duty-bound to provide clear risk-benefit information to patients. In practice, however, informed consent was non-existent, as information sheets were blank [90], and vaccinees were never informed of potential risks beforehand.

Shifting narratives, illusions of protection

The best evidence for the failure of the COVID-19 mRNA vaccine’s ability to confer protection against COVID-19 comes from two large cohort studies of employees within the Cleveland Clinic Health System (CCHS) after the bivalent mRNA boosters became available [99,100]. In the first study (n=51,017), COVID-19 occurred in 4,424 (8.7%) during the 26-week observation period [99]. In terms of preventing infections by the three prevailing Omicron subvariants, the vaccine effectiveness was 29%, 20%, and a non-significant 4%, respectively [99]. No protection was provided when the XBB lineages were dominant. Notably, the risk of “breakthrough” infection was significantly higher among those who received the earlier vaccine, and a higher frequency of vaccinations resulted in a greater risk of COVID-19 [100]. In a second CCHS cohort study (n= 48,344), adults who were “not up-to-date” by the CDC definition had a 23% lower incidence of COVID-19 than those “up-to-date” with their vaccinations [100]. These findings are further reinforced by multiple real-world studies showing rapidly waning protection against Omicron infection after the boosters [101]. The vaccine effectiveness against laboratory-confirmed Omicron infection and symptomatic disease rapidly wanes within three months of the primary vaccination cycle and booster dose [97].

In a recent study of nearly five million adults, those who had a SARS-CoV-2 infection within 21 days post injection showed an eight-fold increased risk of ischemic stroke (OR=8.00, 95%CI 4.18-15.31) and a five-fold increased risk of hemorrhagic stroke when compared to vaccinees without concurrent infection (OR=5.23, 95%CI 1.11-24.64) [121]. The risk was highest for those receiving the mRNA-1273 injections. Thus, SARS-CoV-2 infection close to the time of vaccination produced a strong association with early incidence of ischemic and hemorrhagic strokes [121]. Again, with a hybrid immunity approach, the potential harms may greatly outweigh the rewards.

Natural immunity carries none of these risks and is more than sufficient against the mild virulence of Omicron subvariants. Much evidence now indicates that natural immunity confers robust, durable, and high-level protection against COVID-19 severe illness [122-126]. A large United Kingdom (UK) study of over 30,000 healthcare workers, having a prior history of SARS-CoV-2 infection, showed an 84% reduced risk of reinfection, with a median protective period of seven months [125]. In a large observational study in Israel, previously infected individuals who remained unvaccinated were 6-13 times less likely to contract the virus compared to those who were vaccinated [122]. Among 32,000 individuals within the same healthcare system, vaccinated individuals had a 27-time higher risk of developing symptomatic COVID-19 and an eight-time higher risk of hospitalization compared to their unvaccinated counterparts [122].

After recovering from COVID-19, the body harbors long-lived memory immune cells, indicating an enduring capacity to respond to new infections, potentially lasting many years [127]. Mounting evidence suggests that the training of antibodies and induction of T-cell memory resulting from repeated natural infection with Omicron can augment the mitigation of future infections [128,129]. In a recent cohort study, children who had experienced prior infection showed long-lasting protection against reinfection with SARS-CoV-2 for a minimum of 18 months [130]. Such children between the ages of five and 11 years demonstrated no decline in protection during the entire study, while those aged 12-18 experienced a mild yet measurable decline in protection over time [130]. For these younger generations in particular, natural immunity is more than sufficient and of course vastly safer than the mRNA inoculations.

Analyses of serious harms to humans

For both the Pfizer and Moderna trials combined, there were about 125 SAEs per 100,000 vaccine recipients, which translates into one SAE for every 800 vaccinees [50]. Because the trials avoided the most frail as participants, one would expect to see even higher proportions of SAEs in the population-wide rollouts. Remarkably, the Pfizer trial exhibited a 36% higher risk of SAEs in the vaccine group compared to the placebo, with a risk difference of 18.0 (95%CI 1.2-34.9) per 10,000 vaccinated; risk ratio 1.36 (95%CI 1.02-1.83). These findings stand in sharp contrast with the FDA’s initial claim that SAEs reported by the two pivotal trials were “balanced between treatment groups” [15,50]. The discrepancy may be partly explained by the fact that the FDA was focusing only on individual participant data, and yet many of those individuals were experiencing multiple SAEs. Instead of analyzing individuals, Fraiman et al. focused on total SAEs to take into account the multiple, concurrent events [50]. When the SAEs were viewed collectively, the risks in the vaccine group were substantially elevated beyond those previously determined by the FDA.

Analyses of two large drug safety reporting systems in the US and Europe revealed over 7.8 million AEs reported by approximately 1.6 million individuals following COVID-19 vaccination [47]. When compared to individuals aged 18-64 years, the older age groups exhibited a higher frequency of death, hospitalizations, and life-threatening reactions, with RR estimates ranging from 1.49 (99%CI 1.44-1.55) to 8.61 (99%CI 8.02-9.23). Signals were identified for myocardial infarction, pulmonary embolism, cardio-respiratory arrest, cerebral infarction, and cerebral hemorrhage associated with both mRNA vaccines. These signals, along with ischemic strokes, were confirmed by a large disproportionality analysis [48]. In an independent risk-benefit analysis, BNT162b2 produced 25 times more SAEs than the number of severe COVID-19 cases prevented [51].

Finally, autopsy studies have provided additional evidence of serious harms. In a comprehensive systematic review with full independent adjudication, 74% of autopsy findings (240 out of 325 cases), were judged to have been caused by the COVID-19 mRNA products [139]. The mean time from injection to death was 14.3 days, and the vast majority of deaths had the cardiovascular system as the single fatal organ system injury to the body.

These findings help explain the wide range of well-documented COVID-19 vaccine-induced toxicities that impact the nervous, gastrointestinal, hepatic, renal, hematological, immune, and reproductive systems [25,144,145]. Post-mortem examinations are critical for identifying potential SAEs of the mRNA inoculations. However, as clinics and hospital administrations have a large vested interest in the COVID-19 vaccines’ distribution, the common administrative practice of discouraging autopsies and postponing autopsy reports only serves to undermine comprehensive risk assessment, perpetuate public misconceptions regarding safety, and weaken public health policymaking [145].

Conclusions

Based on the research presented in this narrative review, the global COVID-19 vaccination campaign should be regarded as a grave medical error. Medical errors represent a substantial threat to personal and public safety and have long constituted a leading cause of death [288-290]. Misguided political and regulatory decisions were made at the highest levels and may have been heavily influenced by financial incentives. Government agencies should have considered all reasonable treatment alternatives and deflected away pressures from the medical-pharmaceutical industry rather than allowing population-wide distribution of experimental genetic vaccines.

Had the FDA recognized the nearly four-fold increase in cardiac SAEs (including deaths) subsequently identified in the Pfizer trial’s vaccine group [54], it is doubtful that the EUA would have transpired in December 2020. An in-depth investigation of the COVID-19 vaccine’s long-term safety profile is now urgently needed. Despite the many striking revelations discussed in this review, most developed countries continue to advocate the ongoing adoption of COVID-19 mRNA boosters for the entire eligible population. US federal agencies still emphasize the safety of the vaccines in reducing severe illness and deaths caused by the coronavirus, despite the absence of any randomized, double-blind, placebo-controlled trials to support such claims. This reflects a bewildering disconnect between evidence-based scientific thinking and public health policy.

Careful, objective evaluation of COVID-19 mRNA product safety is crucial for upholding ethical standards and evidence-informed decision-making. Our narrative review concerning the registrational trials and the EUA’s aftermath offers evidence-informed insights into how these genetic vaccines were able to enter the market. In the context of the two pivotal trials, safety was never assessed in a manner commensurate with previously established scientific standards either for vaccines or for GTPs, the more accurate classification of these products. Many key trial findings were either misreported or omitted entirely from published reports. The usual safety testing protocols and toxicology requirements were bypassed by the FDA and vaccine manufacturers, and the premature termination of both trials obviated any unbiased assessment of potential SAEs due to an insufficient timeframe for proper trial evaluation.

It was only after the EUA that the serious biological consequences of rushing the trials became evident, with numerous cardiovascular, neurological, reproductive, hematological, malignant, and autoimmune SAEs identified and published in the peer-reviewed medical literature. Moreover, the COVID-19 mRNA vaccines produced via Process 1 and evaluated in the trials were not the same products eventually distributed worldwide; all of the COVID-19 mRNA products released to the public were produced via Process 2 and have been shown to have varying degrees of DNA contamination. The failure of regulatory authorities to heretofore disclose process-related impurities (e.g., SV40) has further increased concerns regarding safety and quality control oversight of mRNA vaccine manufacturing processes.

Since early 2021, excess deaths, cardiac events, strokes, and other SAEs have often been wrongly ascribed to COVID-19 rather than to the COVID-19 mRNA vaccinations. Misattribution of SAEs to COVID-19 often may be due to the amplification of adverse effects when mRNA injections are followed by SARS-CoV-2 subvariant infection. Injuries from the mRNA products overlap with both PACS and severe acute COVID-19 illness, often obscuring the vaccines’ etiologic contributions. Multiple booster injections appear to cause immune dysfunction, thereby paradoxically contributing to heightened susceptibility to COVID-19 infections with successive doses. For the vast majority of adults under the age of 50, the perceived benefits of the mRNA boosters are profoundly outweighed by their potential disabling and life-threatening harms. Potential harms to older adults appear to be excessive as well.

Given the well-documented SAEs and unacceptable harm-to-reward ratio, we urge governments to endorse and enforce a global moratorium on these modified mRNA products until all relevant questions pertaining to causality, residual DNA, and aberrant protein production are answered.

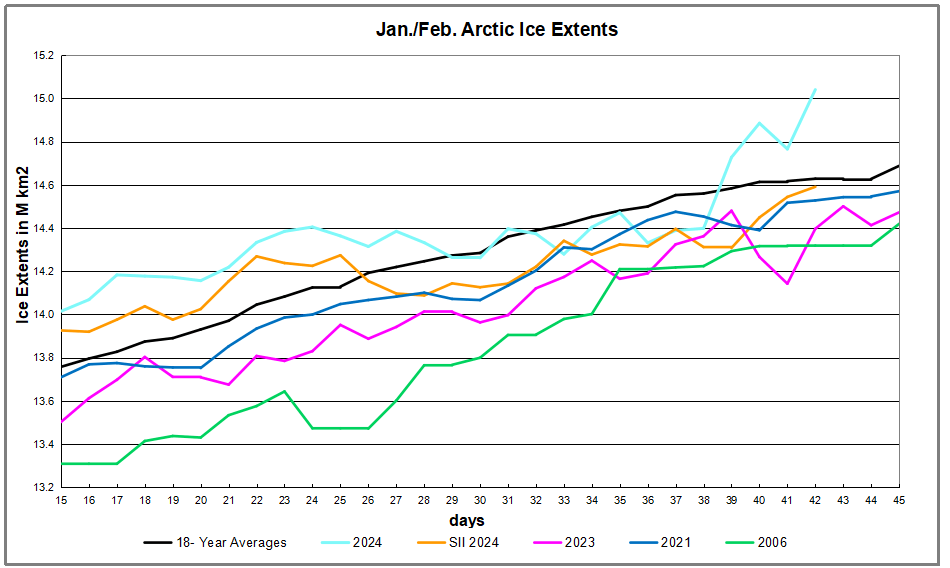

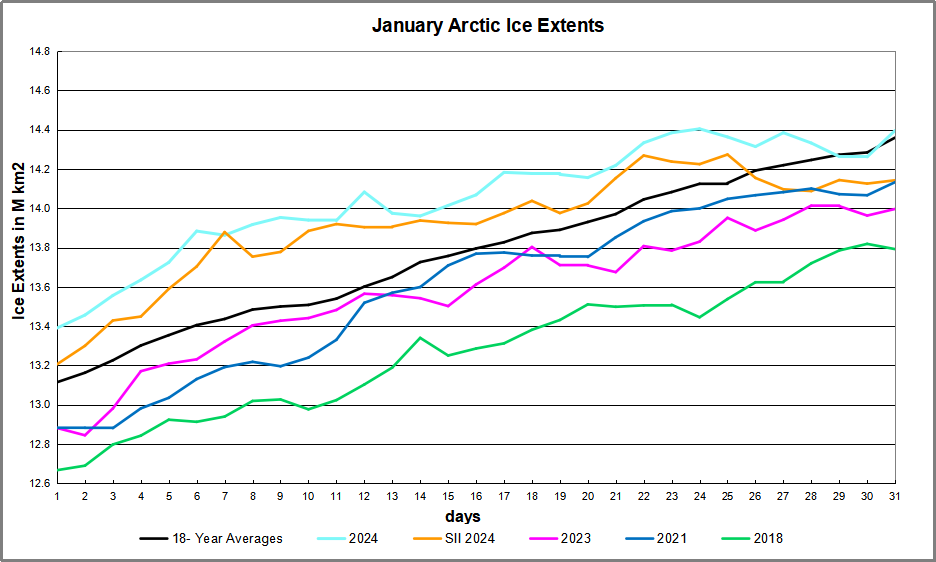

For ice extent in the Arctic, the bar is set at 15M km2. The highest daily average in the last 18 years occurs on day 61 at 15.08M before descending. Most years are able to clear 15M, but in recent previous years, 2017, 2018, 2019 and 2021 ice extents failed to clear the bar at 15M km2. Now on February 11, 2024 (day 42) Arctic ice extent has already leaped over that bar 20 days early.

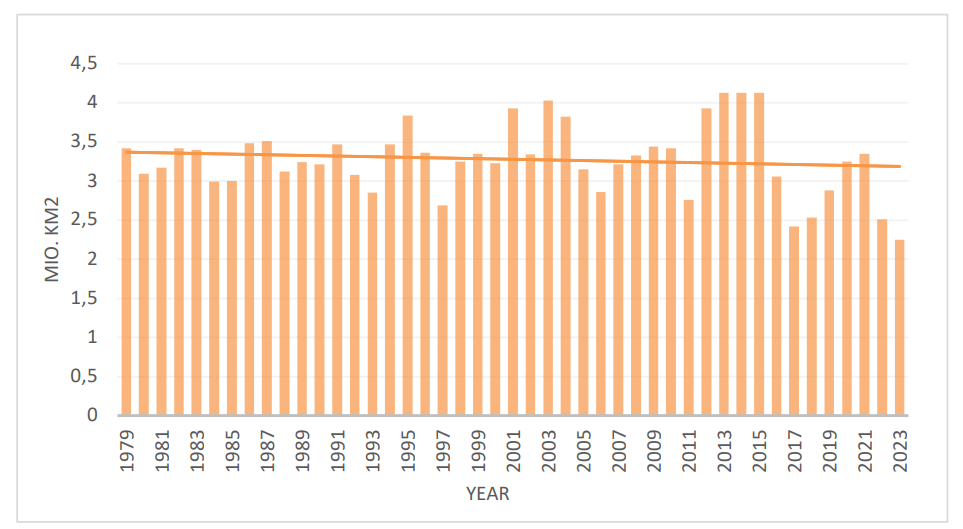

Regarding the extent of the summer (February) sea ice at the Antarctic, the downward trend during the years 1979-2021 was very small but in 2022 and 2023 a considerable decline was observed, and a decline was also clearly observed for the whole period of 2007- 2023. That was in contradiction to what happened in the Arctic. The pattern of the annual levels was not the same for the Arctic and Antarctic, indicating different drivers in the North and the South.

Regarding the extent of the summer (February) sea ice at the Antarctic, the downward trend during the years 1979-2021 was very small but in 2022 and 2023 a considerable decline was observed, and a decline was also clearly observed for the whole period of 2007- 2023. That was in contradiction to what happened in the Arctic. The pattern of the annual levels was not the same for the Arctic and Antarctic, indicating different drivers in the North and the South.