Roots of Climate Change Distortions

Roger Pielke Jr. explains at his blog Why Climate Misinformation Persists. Excerpts in italics with my bolds and added images. H/T John Ray

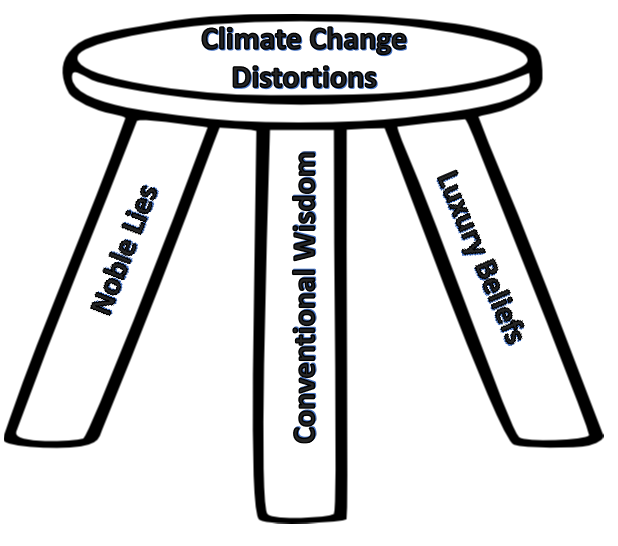

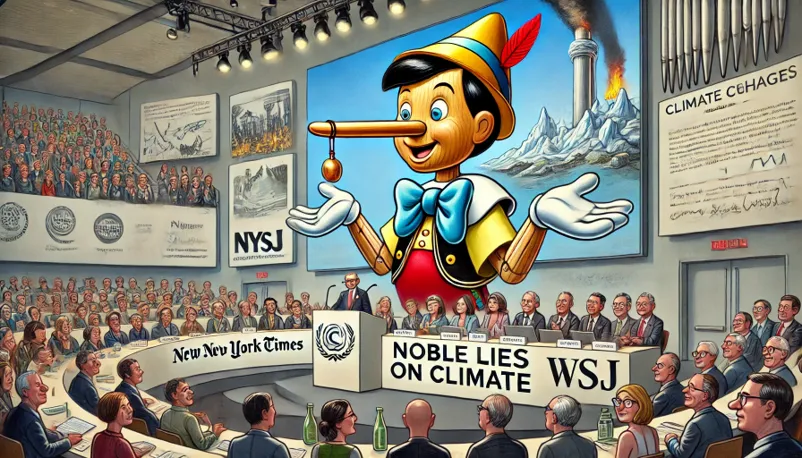

Noble Lies, Conventional Wisdom, and Luxury Beliefs

In 2001, I participated in a roundtable discussion hosted at the headquarters of the National Academy of Sciences (NAS) with a group of U.S. Senators, the Secretary of Treasury, and about a half-dozen other researchers. The event was organized by Idaho Senator Larry Craig (R-ID) following the release of a short NAS report on climate to help the then-new administration of George W. Bush get up to speed on climate change.

At the time I was a 32 year-old fresh-faced researcher about to leave the National Center for Atmospheric Research for a faculty position across town at the University of Colorado. I had never testified before Congress or really had any high-level policy engagement.

When the roundtable was announced, I experienced something completely new in my professional career — several of my much more senior colleagues contacted me to lobby me to downplay or even to misrepresent my research on the roles of climate and society in the economic impacts of extreme weather. I had become fairly well known in the atmospheric sciences research community back then for our work showing that increasing U.S. hurricane damage could be explained entirely by more people and more wealth.

One colleague explained to me that my research, even though scientifically accurate, might distract from efforts to advocate for emissions reductions:

“I think we have a professional (or moral?) obligation to be very careful what we say and how we say it when the stakes are so high.”

At the time, I wrote that the message I heard was that the “ ends justify means or, in other words, doing the “right thing” for the wrong reasons is OK” — even if that meant downplaying or even misrepresenting my own research.

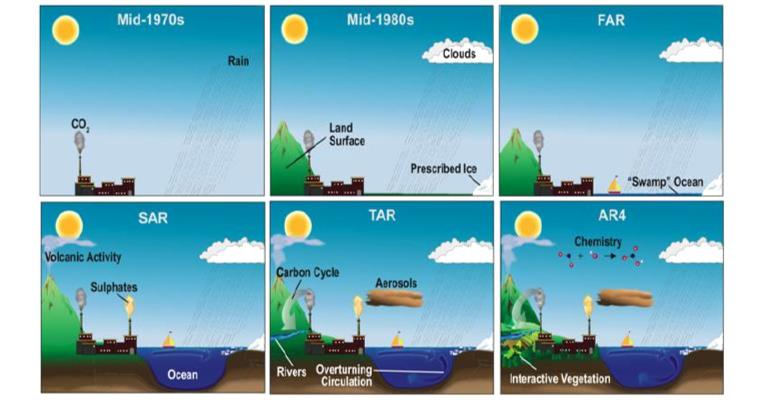

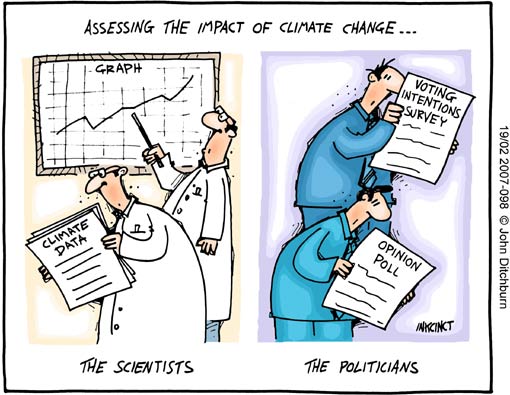

I have thought about that experience over the past few weeks as I have received many comments on the first four installments of the THB series Climate Fueled Extreme Weather (Part 1, Part 2, Part 3, Part 4). One of the most common questions I’ve received asks why it is that the scientific assessments of the Intergovernmental Panel on Climate Change (IPCC) are so different than what is reported in the media, proclaimed in policy, and promoted by the most public-facing climate experts. And, why can’t that gap be closed?

Over the past 23 years, I have wondered a lot myself about this question — not just how misinformation arises in policy discourse (we know a lot about that), but why it is that the expert climate community has been unable or unwilling to correct rampant misinformation about extreme weather, with some even promoting that misinformation.

Obviously, I don’t have good answers, but I will propose three inter-related explanations that help me to make sense of these dynamics — the noble lie, conventional wisdom, and luxury beliefs.

The Noble Lie

The most important explanation is that many in the climate community — like my senior colleague back in 2001 — appear to believe that achieving emissions reductions is so very important that its attainment trumps scientific integrity. The ends justify the means. They also believe that by hyping extreme weather, they will make emissions reductions more likely (I disagree, but that is a subject for another post).

I explained this as a “fear factor” in The Climate Fix:

Typically, the battle over climate-change science focused on convincing (or, rather, defeating) those skeptical has meant advocacy focused on increasing alarm. As one Australian academic put it at a conference at Oxford University in the fall of 2009: “The situation is so serious that, although people are afraid, they are not fearful enough given the science. Personally I cannot see any alternative to ramping up the fear factor.” Similarly, when asked how to motivate action on climate change, economist Thomas Schelling replied, “It’s a tough sell. And probably you have to find ways to exaggerate the threat. . . [P]art of me sympathizes with the case for disingenuousness. . . I sometimes wish that we could have, over the next five or ten years, a lot of horrid things happening—you know, like tornadoes in the Midwest and so forth—that would get people very concerned about climate change. But I don’t think that’s going to happen.” From the opening ceremony of the Copenhagen climate negotiations to Al Gore’s documentary, An Inconvenient Truth, to public comments from leading climate scientists, to the echo-chambers of the blogosphere, fear and alarm have been central to advocacy for action on climate change.

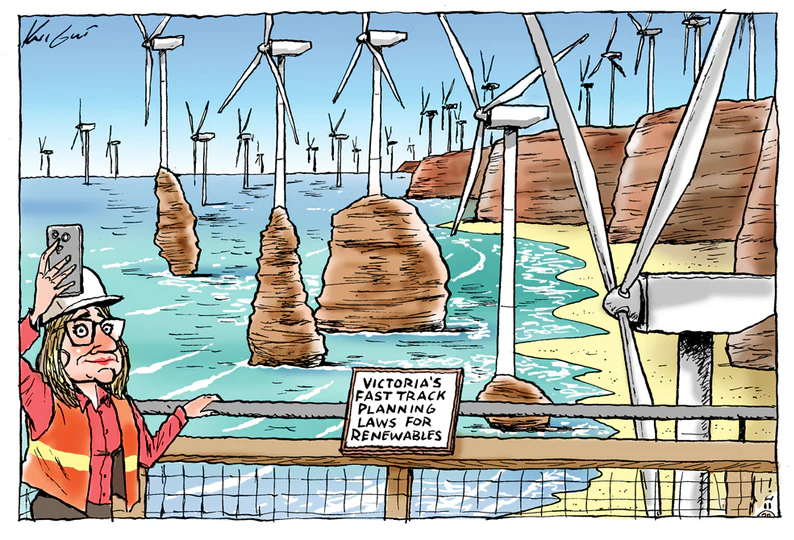

It’s just a short path from climate fueled extreme weather

to the noble lie at the heart of fear-based campaigns.

Conventional Wisdom

The phrase was popularized by John Kenneth Galbraith in his 1958 book, The Affluent Society, where he explained:

It will be convenient to have a name for the ideas which are esteemed at any time for their acceptability, and it should be a term that emphasizes this predictability. I shall refer to these ideas henceforth as the conventional wisdom.

The key point here is “acceptability” regardless of an idea’s conformance with truth. Conventional wisdom is that which everyone knows to be true whether actually true or not. Examples of beliefs that at one time or another were/are conventional wisdom include — Covid-19 did not come from a lab, Sudafed helps with hay fever, spinach is high in iron, and climate change is fueling extreme weather.

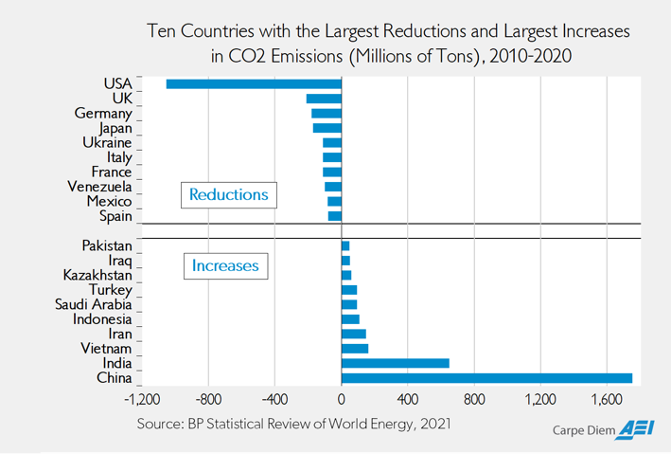

As the noble lie of climate fueled extreme weather has taken hold as conventional wisdom, few have been willing to offer correctives — Though there are important exceptions out in plain sight, like the IPCC Working Group 1 and the NOAA GFDL Hurricanes and Global Warming page.

Actively challenging conventional wisdom has professional and social costs. For instance, those who suggested that Covid-19 may have had a research-related origin were labeled conspiracy theorists and racists, those who suggested that President Biden was too old to serve another term were called Trump enablers, and those who accurately represent the science of climate and extreme weather are tarred as climate deniers and worse.

A few years ago I wrote an op-ed for a U.S. national newspaper and included a line about how hurricane landfalls had not increased in the U.S. in frequency or in their intensity since at least 1900. The editor removed the sentence and told me that while the statement was correct, most readers wouldn’t believe it and that would compromise the whole piece.

When noble lies become conventional wisdom,

they become harder to correct.

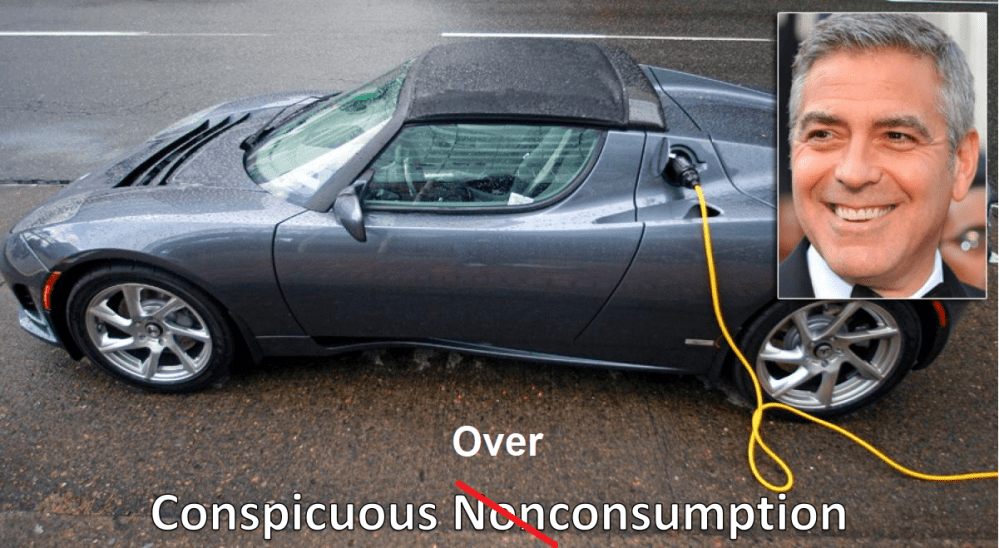

Luxury Beliefs

Yascha Mounk offers a useful definition:

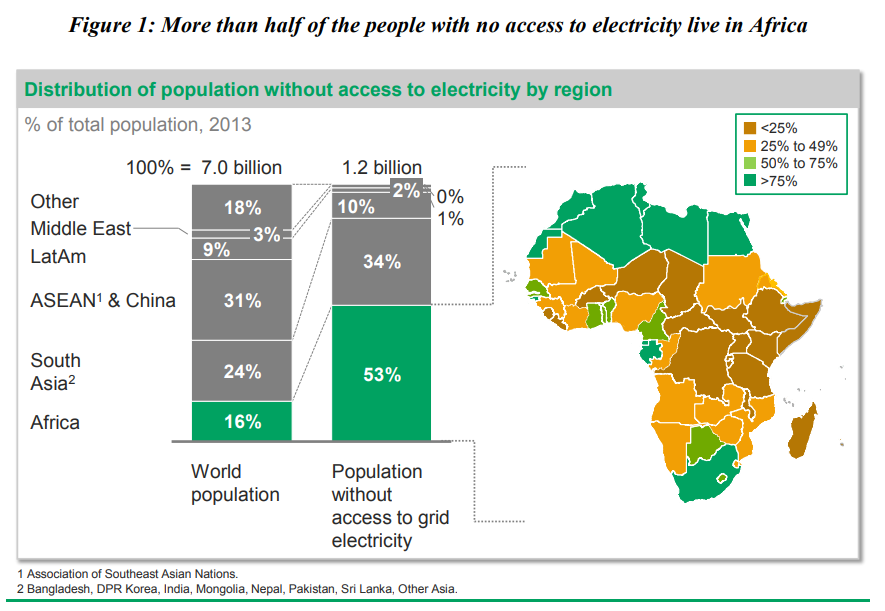

Luxury beliefs are ideas professed by people who would be much less likely to hold them if they were not insulated from, and had therefore failed seriously to consider, their negative effects.

After all, what is the real harm if people believe in climate fueled extreme weather? If people believe that the extreme weather outside their window or on their feeds make aggressive climate policies more compelling, then that’s a good thing, right?

Surely there can only be positive outcomes that result from promoting misunderstandings of climate and extreme weather at odds with evidence and scientific assessments?

Well, no.

Last year I attended a forum at Lloyd’s of London — the event was held under the Chatham House rule, and in the event, later reported by the Financial Times, the company made a surprising admission contrary to the conventional wisdom (emphasis added):

Lloyd’s of London has warned insurers that the full impact of climate change has yet to translate into claims data despite annual natural catastrophe losses borne by the sector topping $100bn.

Insurance prices are surging as companies look to repair their margins after years of significant losses from severe weather to insured properties, exacerbated by inflation in rebuild costs. A warming planet has been identified by insurance experts and campaigners alike as a key factor.

But at a private event last month, one executive at the corporation that oversees the market told underwriters that it has not yet seen clear evidence that a warming climate is a major driver in claims costs.

It turns out that misunderstandings of the science of climate and extreme weather actually lead to unnecessary costs for people whose jobs involve making decisions about climate and extreme weather. Those unnecessary costs often trickle down to ordinary people. Kudos to Lloyd’s for calling things straight, even if only in a private forum — That is of course their professional responsibility.

Conclusion

In a classic paper in 2012, the late Steve Rayner explained that some forms of social ignorance are created and maintained on purpose:

To make sense of the complexity of the world so that they can act, individuals and institutions need to develop simplified, self-consistent versions of that world. The process of doing so means that much of what is known about the world needs to be excluded from those versions, and in particular that knowledge which is in tension or outright contradiction with those versions must be expunged. This is ‘uncomfortable knowledge’.

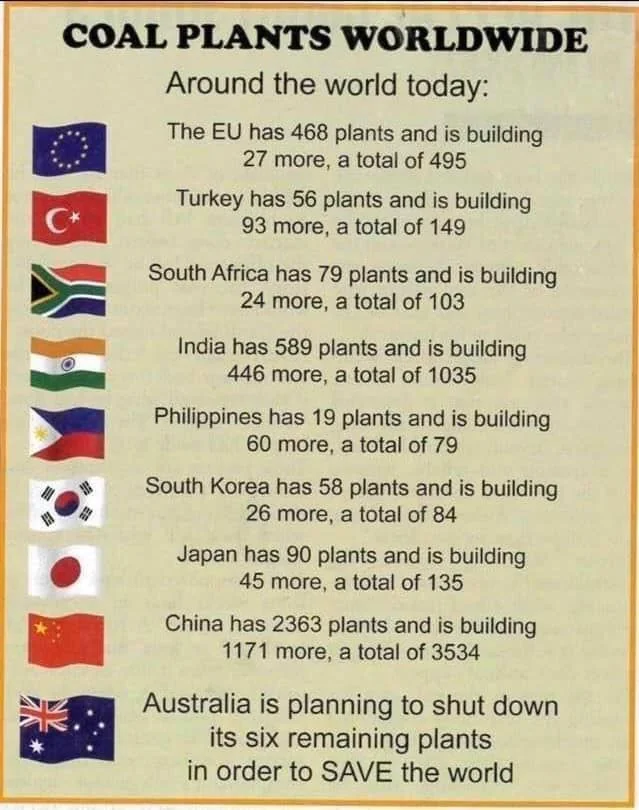

Climate fueled extreme weather is perhaps the canonical example

of dysfunctional socially constructed ignorance. It may be

uncorrectable — a falsehood that persists permanently.

Here at THB, I am an optimist that science and policy are self-correcting, even if that takes a while. That means that the series on climate fueled extreme weather will keep going — Have a great weekend!