Tomas Fürst explains the dangers in believing models are reality in his Brownstone article Mathematical Models Are Weapons of Mass Destruction. Excerpts in italics with my bolds and added images.

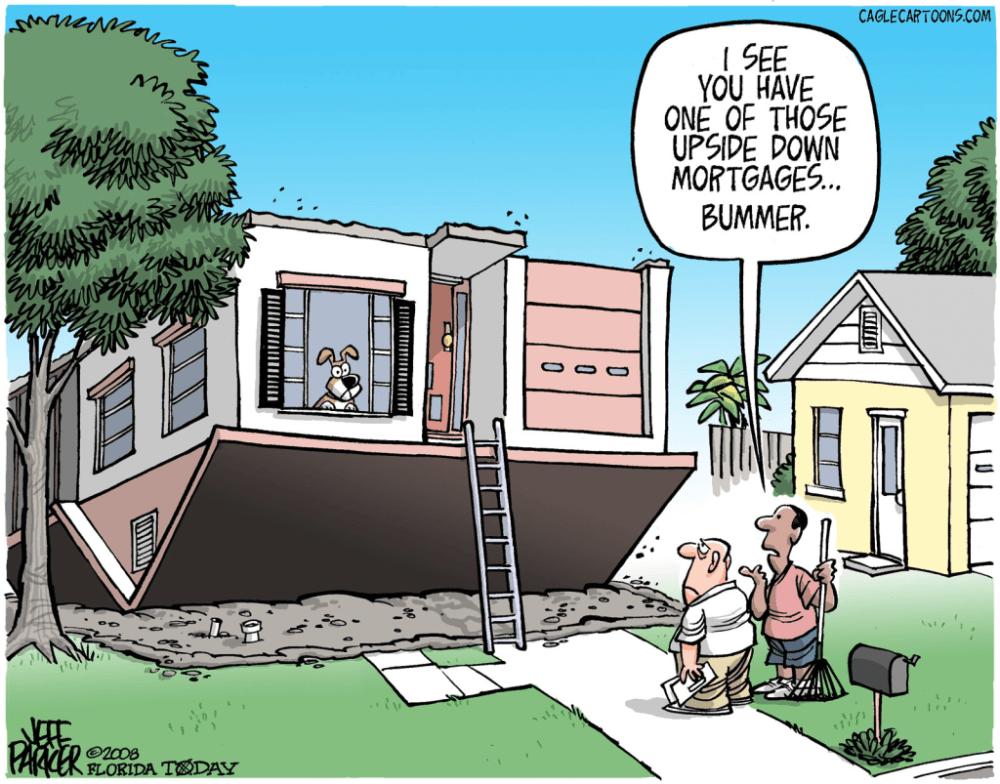

Great Wealth Destroyed in Mortgage Crisis by Trusting a Financial Model

In 2007, the total value of an exotic form of financial insurance called Credit Default Swap (CDS) reached $67 trillion. This number exceeded the global GDP in that year by about fifteen percent. In other words – someone in the financial markets made a bet greater than the value of everything produced in the world that year.

What were the guys on Wall Street betting on? If certain boxes of financial pyrotechnics called Collateralized Debt Obligations (CDOs) are going to explode. Betting an amount larger than the world requires a significant degree of certainty on the part of the insurance provider.

What was this certainty supported by?

A magic formula called the Gaussian Copula Model. The CDO boxes contained the mortgages of millions of Americans, and the funny-named model estimated the joint probability that holders of any two randomly selected mortgages would both default on the mortgage.

The key ingredient in this magic formula was the gamma coefficient, which used historical data to estimate the correlation between mortgage default rates in different parts of the United States. This correlation was quite small for most of the 20th century because there was little reason why mortgages in Florida should be somehow connected to mortgages in California or Washington.

But in the summer of 2006, real estate prices across the United States began to fall, and millions of people found themselves owing more for their homes than they were currently worth. In this situation, many Americans rationally decided to default on their mortgage. So, the number of delinquent mortgages increased dramatically, all at once, across the country.

The gamma coefficient in the magic formula jumped from negligible values towards one and the boxes of CDOs exploded all at once. The financiers – who bet the entire planet’s GDP on this not happening – all lost.

This entire bet, in which a few speculators lost the entire planet, was based on a mathematical model that its users mistook for reality. The financial losses they caused were unpayable, so the only option was for the state to pay for them. Of course, the states didn’t exactly have an extra global GDP either, so they did what they usually do – they added these unpayable debts to the long list of unpayable debts they had made before. A single formula, which has barely 40 characters in the ASCII code, dramatically increased the total debt of the “developed” world by tens of percent of GDP. It has probably been the most expensive formula in the history of mankind.

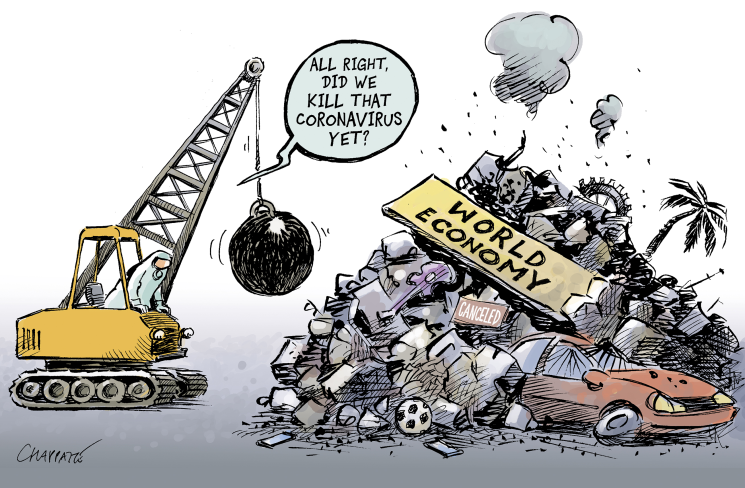

Covid Panic and Social Devastation from Following an Epidemic Model

After this fiasco, one would assume people would start paying more attention to the predictions of various mathematical models. In fact, the opposite happened. In the fall of 2019, a virus began to spread from Wuhan, China, which was named SARS-CoV-2 after its older siblings. His older siblings were pretty nasty, so at the beginning of 2020, the whole world went into a panic mode.

If the infection fatality rate of the new virus was comparable to its older siblings, civilization might really collapse. And exactly at this moment, many dubious academic characters emerged around the world with their pet mathematical models and began spewing wild predictions into the public space.

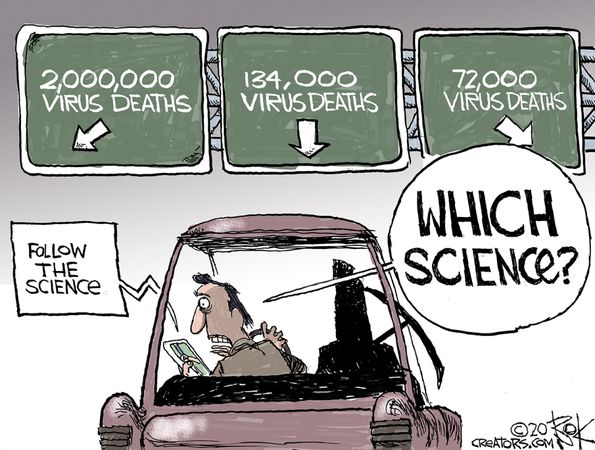

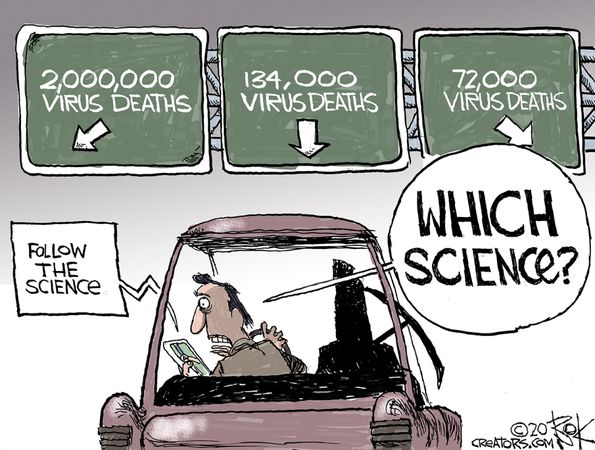

Journalists went through the predictions, unerringly picked out only the most apocalyptic ones, and began to recite them in a dramatic voice to bewildered politicians. In the subsequent “fight against the virus,” any critical discussion about the nature of mathematical models, their assumptions, validation, the risk of overfitting, and especially the quantification of uncertainty was completely lost.

Most of the mathematical models that emerged from academia were more or less complex versions of a naive game called SIR. These three letters stand for Susceptible–Infected–Recovered and come from the beginning of the 20th century, when, thanks to the absence of computers, only the simplest differential equations could be solved. SIR models treat people as colored balls that float in a well-mixed container and bump into each other.

When red (infected) and green (susceptible) balls collide, two reds are produced. Each red (infected) turns black (recovered) after some time and stops noticing the others. And that’s all. The model does not even capture space in any way – there are neither cities nor villages. This completely naive model always produces (at most) one wave of contagion, which subsides over time and disappears forever.

And exactly at this moment, the captains of the coronavirus response made the same mistake as the bankers fifteen years ago: They mistook the model for reality. The “experts” were looking at the model that showed a single wave of infections, but in reality, one wave followed another. Instead of drawing the correct conclusion from this discrepancy between model and reality—that these models are useless—they began to fantasize that reality deviates from the models because of the “effects of the interventions” by which they were “managing” the epidemic. There was talk of “premature relaxation” of the measures and other mostly theological concepts. Understandably, there were many opportunists in academia who rushed forward with fabricated articles about the effect of interventions.

Meanwhile, the virus did its thing, ignoring the mathematical models. Few people noticed, but during the entire epidemic, not a single mathematical model succeeded in predicting (at least approximately) the peak of the current wave or the onset of the next wave.

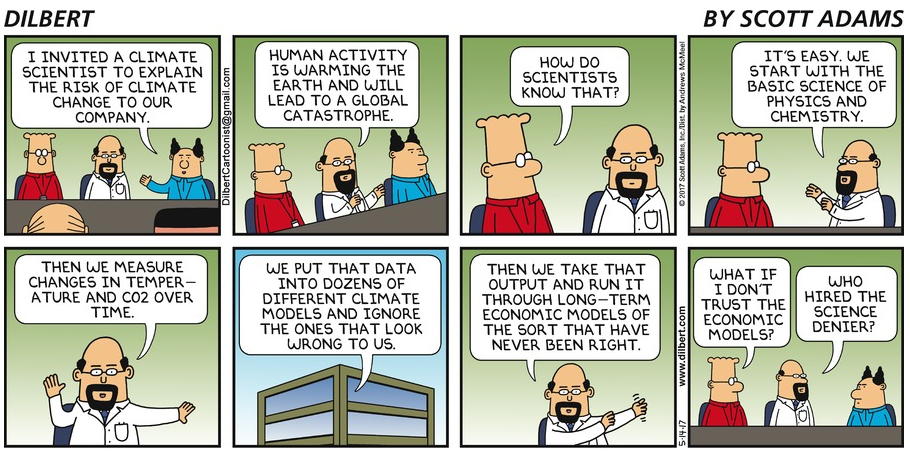

Unlike Gaussian Copula Models, which – besides having a funny name – worked at least when real estate prices were rising, SIR models had no connection to reality from the very beginning. Later, some of their authors started to retrofit the models to match historical data, thus completely confusing the non-mathematical public, which typically does not distinguish between an ex-post fitted model (where real historical data are nicely matched by adjusting the model parameters) and a true ex-ante prediction for the future. As Yogi Berra would have it: It’s tough to make predictions, especially about the future.

While during the financial crisis, misuse of mathematical models brought mostly economic damage, during the epidemic it was no longer just about money. Based on nonsensical models, all kinds of “measures” were taken that damaged many people’s mental or physical health.

Nevertheless, this global loss of judgment had one positive effect: The awareness of the potential harm of mathematical modelling spread from a few academic offices to wide public circles. While a few years ago the concept of a “mathematical model” was shrouded in religious reverence, after three years of the epidemic, public trust in the ability of “experts” to predict anything went to zero.

Moreover, it wasn’t just the models that failed – a large part of the academic and scientific community also failed. Instead of promoting a cautious and sceptical evidence-based approach, they became cheerleaders for many stupidities the policymakers came forward with. The loss of public trust in the contemporary Science, medicine, and its representatives will probably be the most significant consequence of the epidemic.

Demolishing Modern Civilization Because of Climate Model Predictions

Which brings us to other mathematical models, the consequences of which can be much more destructive than everything we have described so far. These are, of course, climate models. The discussion of “global climate change” can be divided into three parts.

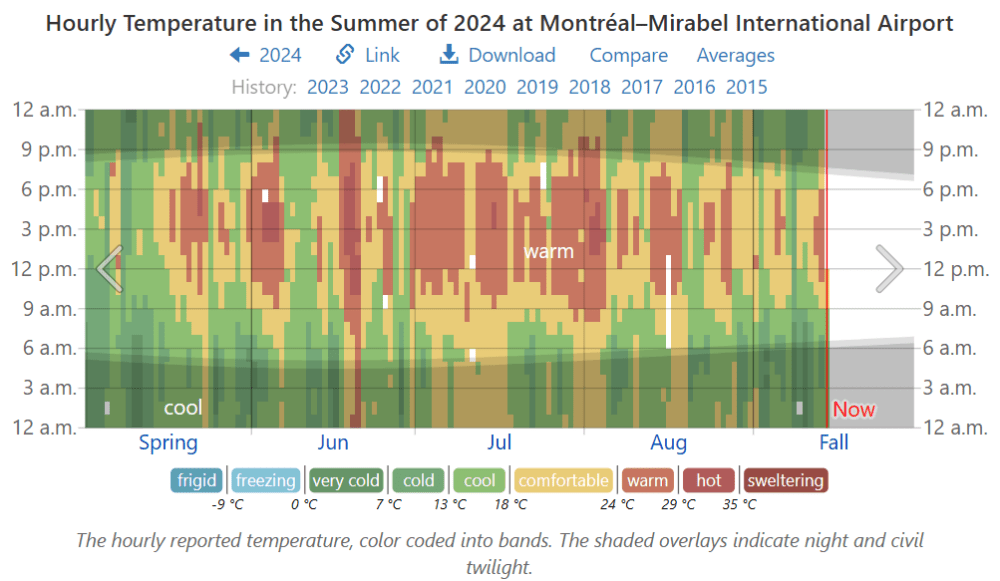

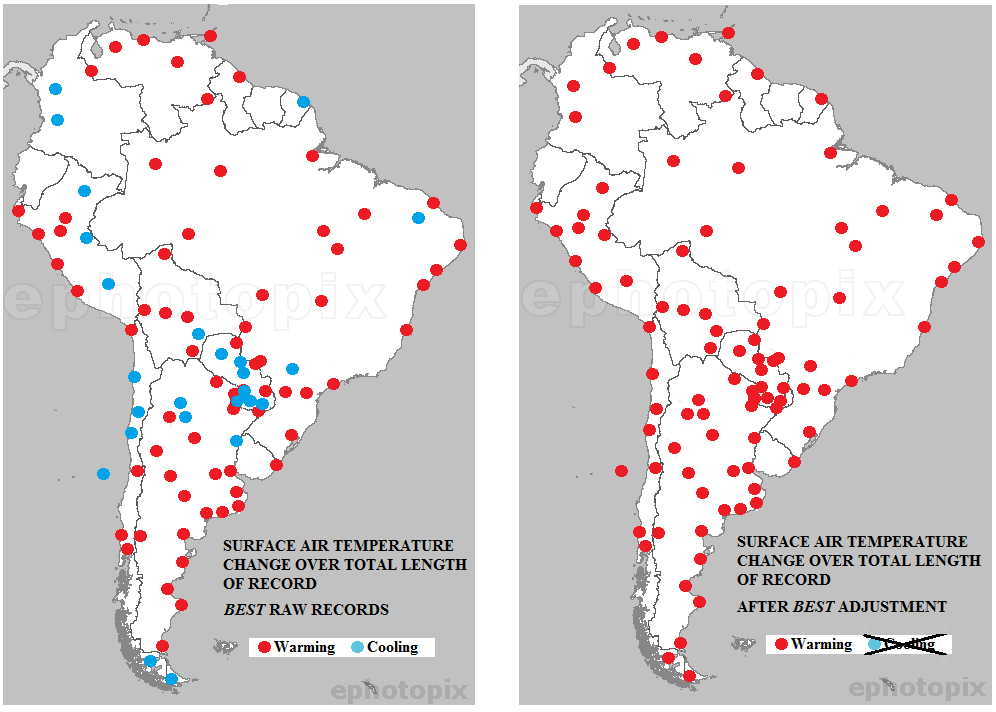

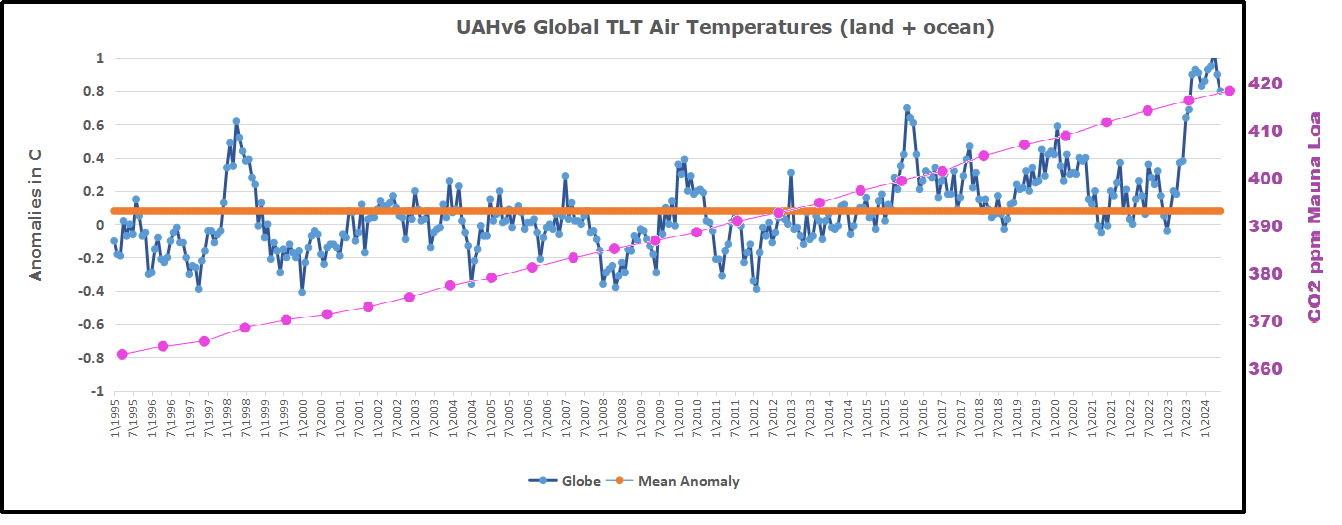

1. The real evolution of temperature on our planet. For the last few decades, we have had reasonably accurate and stable direct measurements from many places on the planet. The further we go into the past, the more we have to rely on various temperature reconstruction methods, and the uncertainty grows. Doubts may also arise as to what temperature is actually the subject of the discussion: Temperature is constantly changing in space and time, and it is very important how the individual measurements are combined into some “global” value. Given that a “global temperature” – however defined – is a manifestation of a complex dynamic system that is far from thermodynamic equilibrium, it is quite impossible for it to be constant. So, there are only two possibilities: At every moment since the formation of planet Earth, “global temperature” was either rising or falling. It is generally agreed that there has been an overall warming during the 20th century, although the geographical differences are significantly greater than is normally acknowledged. A more detailed discussion of this point is not the subject of this essay, as it is not directly related to mathematical models.

2. The hypothesis that increase in CO2 concentration drives increase in global temperature. This is a legitimate scientific hypothesis; however, evidence for the hypothesis involves more mathematical modelling than you might think. Therefore, we will address this point in more detail below.

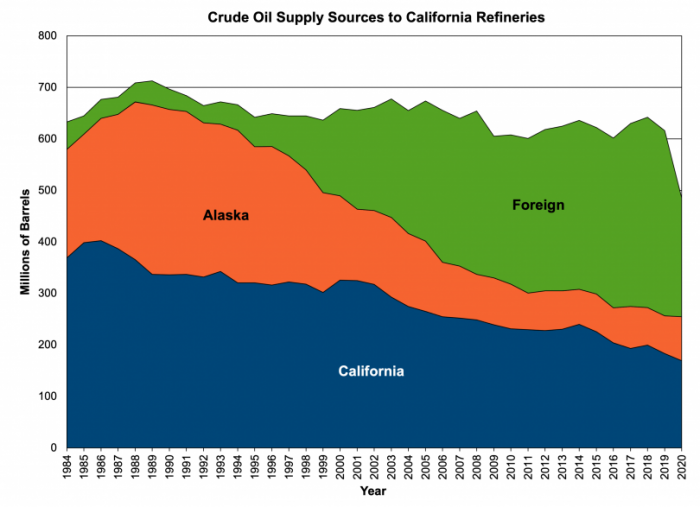

3. The rationality of the various “measures” that politicians and activists propose to prevent global climate change or at least mitigate its effects. Again, this point is not the focus of this essay, but it is important to note that many of the proposed (and sometimes already implemented) climate change “measures” will have orders of magnitude more dramatic consequences than anything we did during the Covid epidemic. So, with this in mind, let’s see how much mathematical modelling we need to support hypothesis 2.

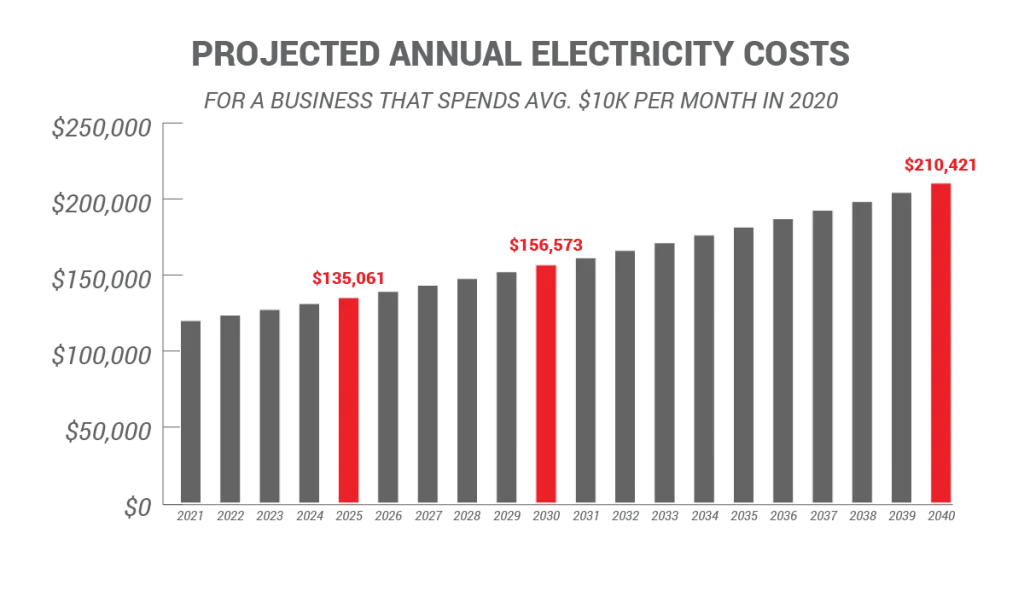

Yes, they are projecting spending more than 100 Trillion US$.

At first glance, there is no need for models because the mechanism by which CO2 heats the planet has been well understood since Joseph Fourier, who first described it. In elementary school textbooks, we draw a picture of a greenhouse with the sun smiling down on it. Short-wave radiation from the sun passes through the glass, heating the interior of the greenhouse, but long-wave radiation (emitted by the heated interior of the greenhouse) cannot escape through the glass, thus keeping the greenhouse warm. Carbon dioxide, dear children, plays a similar role in our atmosphere as the glass in the greenhouse.

This “explanation,” after which the entire greenhouse effect is named, and which we call the “greenhouse effect for kindergarten,” suffers from a small problem: It is completely wrong. The greenhouse keeps warm for a completely different reason. The glass shell prevents convection – warm air cannot rise and carry the heat away. This fact was experimentally verified already at the beginning of the 20th century by building an identical greenhouse but from a material that is transparent to infrared radiation. The difference in temperatures inside the two greenhouses was negligible.

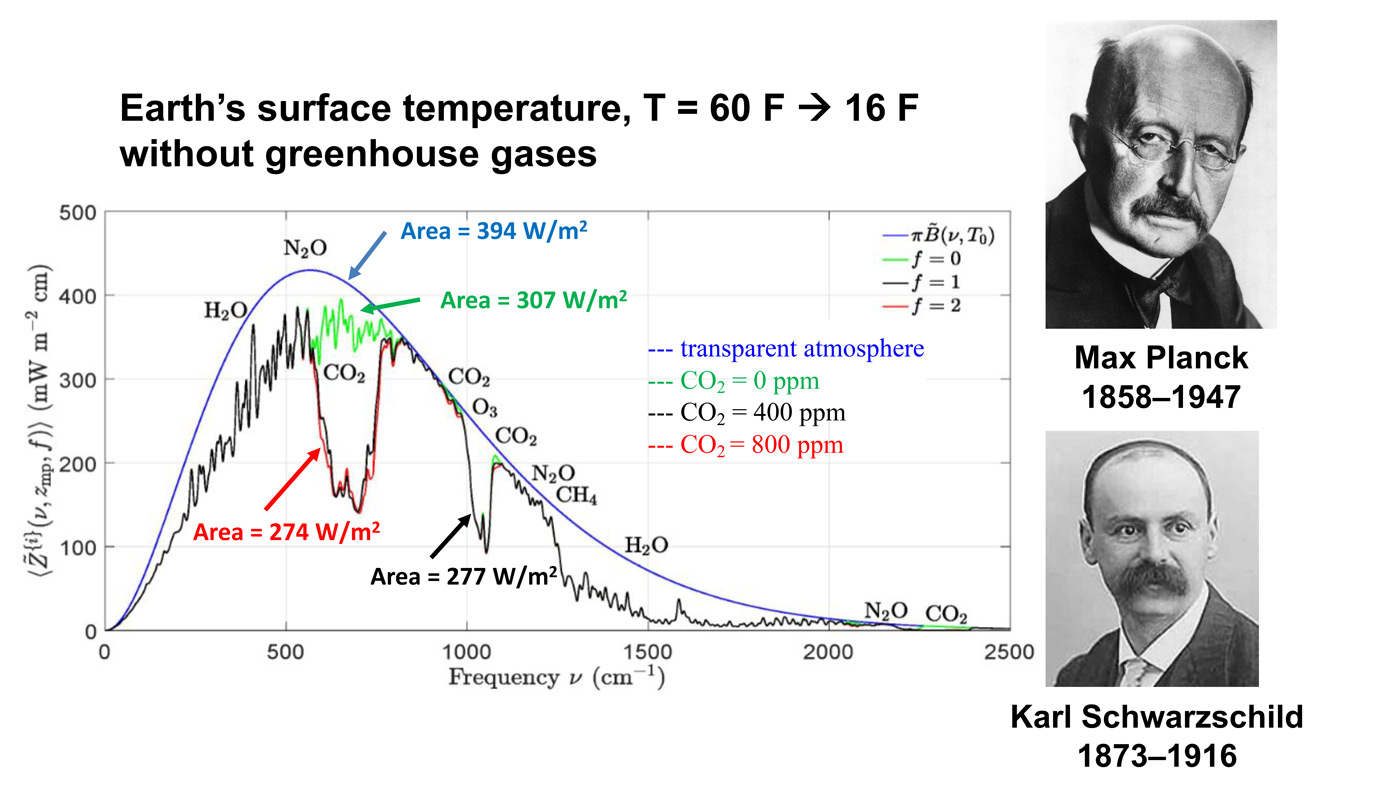

OK, greenhouses are not warm due to greenhouse effect (to appease various fact-checkers, this fact can be found on Wikipedia). But that doesn’t mean that carbon dioxide doesn’t absorb infrared radiation and doesn’t behave in the atmosphere the way we imagined glass in a greenhouse behaved. Carbon dioxide actually does absorb radiation in several wavelength bands. Water vapor, methane, and other gases also have this property. The greenhouse effect (erroneously named after the greenhouse) is a safely proven experimental fact, and without greenhouse gases, the Earth would be considerably colder.

It follows logically that when the concentration of CO2 in the atmosphere increases, the CO2 molecules will capture even more infrared photons, which will therefore not be able to escape into space, and the temperature of the planet will rise further. Most people are satisfied with this explanation and continue to consider the hypothesis from point 2 above as proven. We call this version of the story the “greenhouse effect for philosophical faculties.”

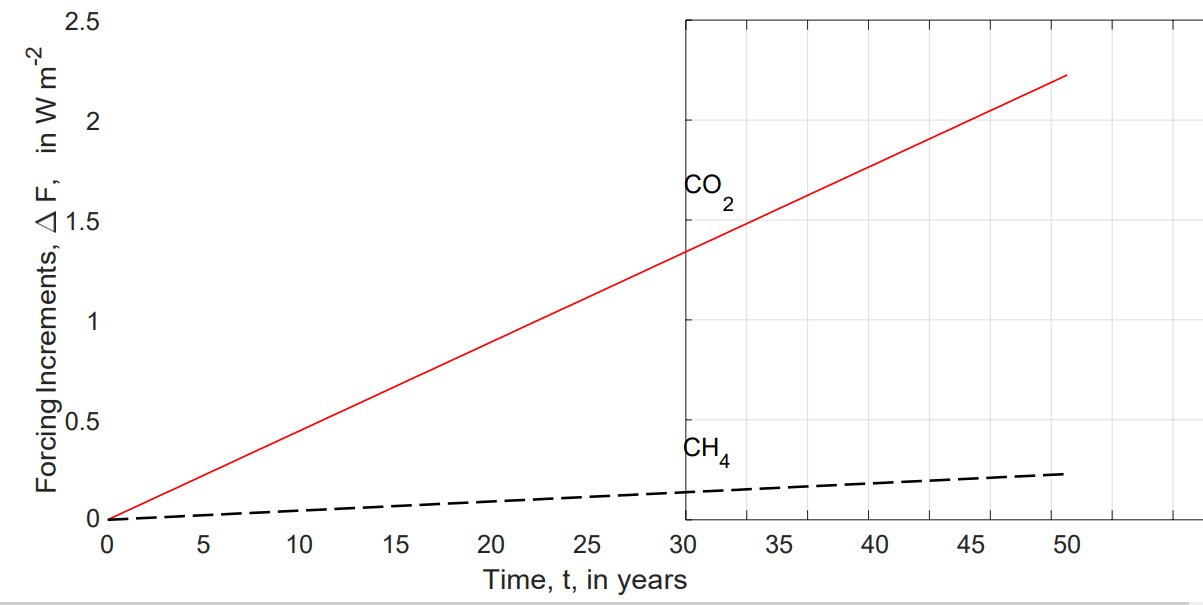

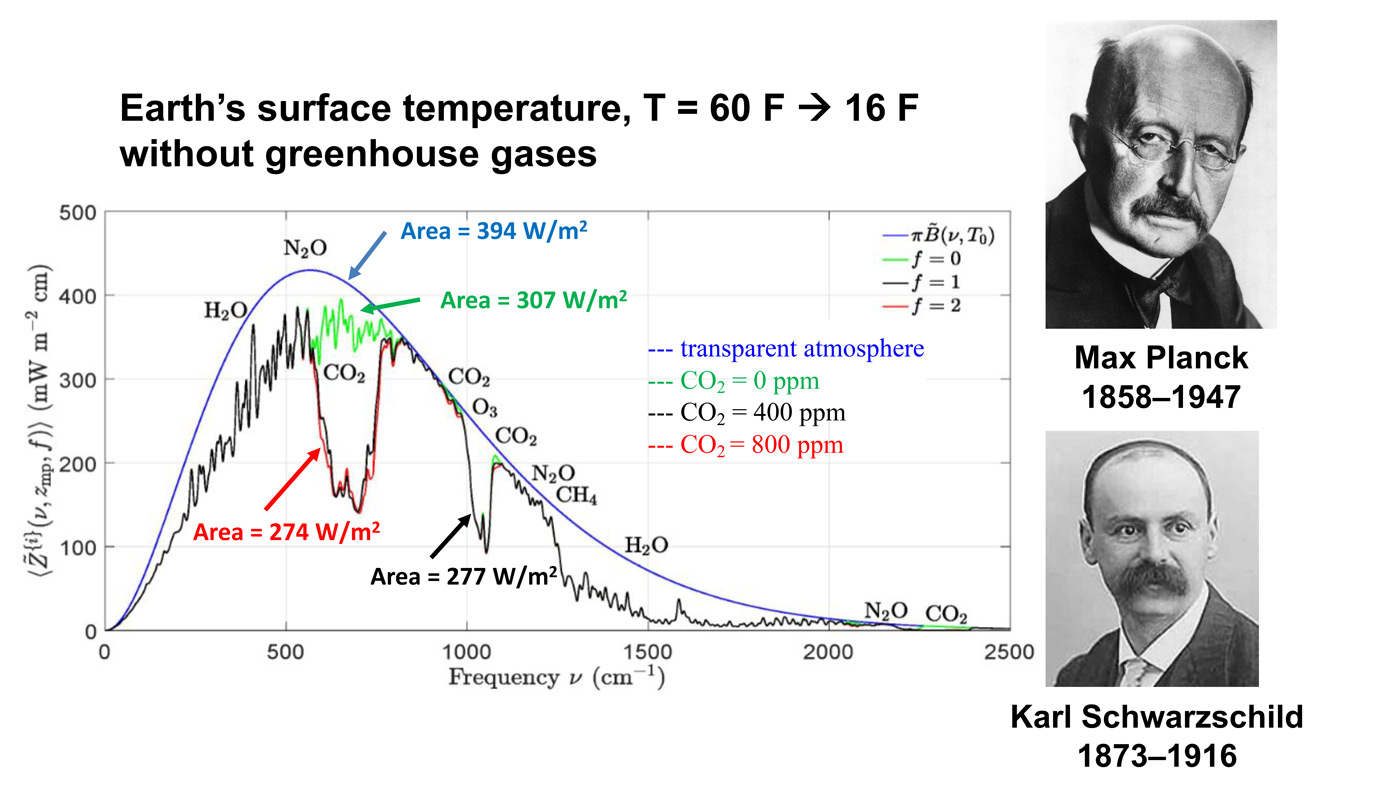

The important point here is the red line. This is what Earth would radiate to space if you were to double the CO2 concentration from today’s value. Right in the middle of these curves, you can see a gap in spectrum. The gap is caused by CO2 absorbing radiation that would otherwise cool the Earth. If you double the amount of CO2, you don’t double the size of that gap. You just go from the black curve to the red curve, and you can barely see the difference.

The problem is, of course, that there is so much carbon dioxide (and other greenhouse gases) in the atmosphere already that no photon with the appropriate frequency has a chance to escape from the atmosphere without being absorbed and re-emitted many times by some greenhouse gas molecule.

A certain increase in the absorption of infrared radiation induced by higher concentration of CO2 can thus only occur at the edges of the respective absorption bands. With this knowledge – which, of course, is not very widespread among politicians and journalists – it is no longer obvious why an increase in the concentration of CO2 should lead to a rise in temperature.

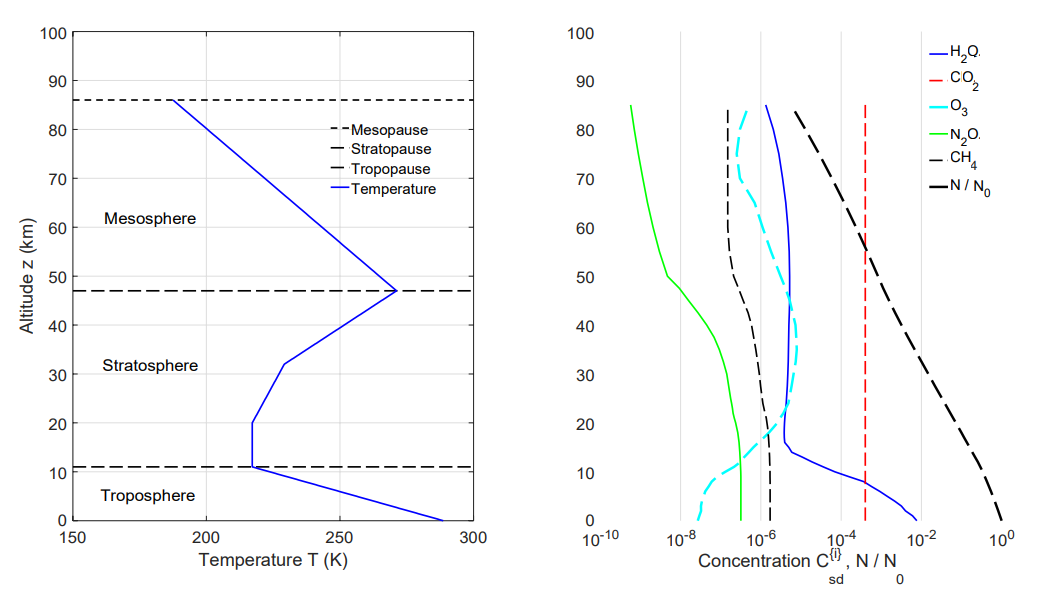

In reality, however, the situation is even more complicated, and it is therefore necessary to come up with another version of the explanation, which we call the “greenhouse effect for science faculties.” This version for adults reads as follows: The process of absorption and re-emission of photons takes place in all layers of the atmosphere, and the atoms of greenhouse gases “pass” photons from one to another until finally one of the photons emitted somewhere in the upper layer of the atmosphere flies off into space. The concentration of greenhouse gases naturally decreases with increasing altitude. So, when we add a little CO2, the altitude from which photons can already escape into space shifts a little higher. And since the higher we go, the colder it is, the photons there emitted carry away less energy, resulting in more energy remaining in the atmosphere, making the planet warmer.

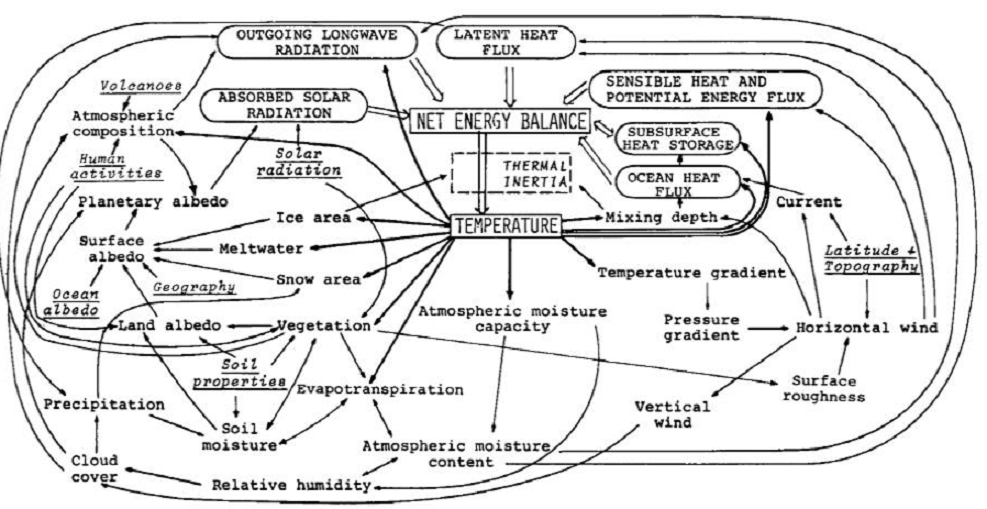

Note that the original version with the smiling sun above the greenhouse got somewhat more complicated. Some people start scratching their heads at this point and wondering if the above explanation is really that clear. When the concentration of CO2 increases, perhaps “cooler” photons escape to space (because the place of their emission moves higher), but won’t more of them escape (because the radius increases)? Shouldn’t there be more warming in the upper atmosphere? Isn’t the temperature inversion important in this explanation? We know that temperature starts to rise again from about 12 kilometers up. Is it really possible to neglect all convection and precipitation in this explanation? We know that these processes transfer enormous amounts of heat. What about positive and negative feedbacks? And so on and so on.

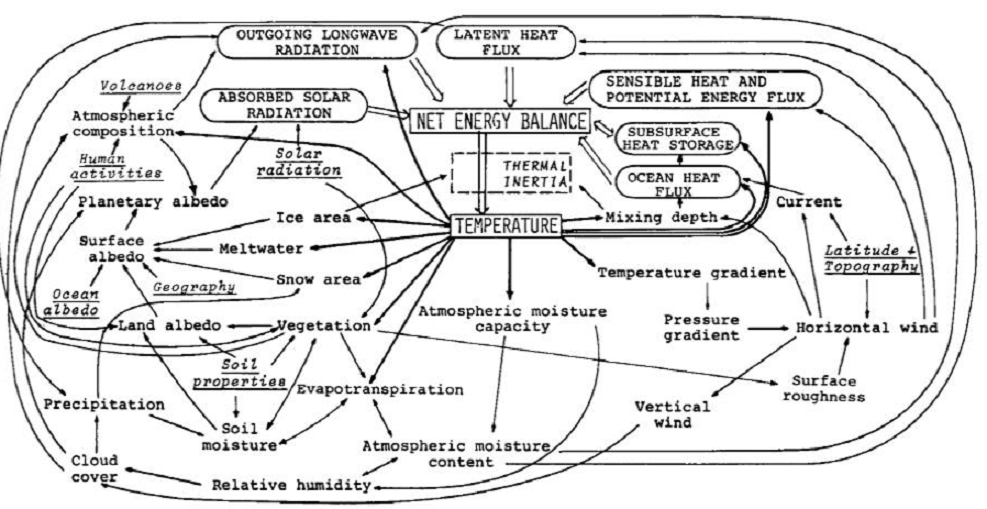

The more you ask, the more you find that the answers are not directly observable but rely on mathematical models. The models contain a number of experimentally (that is, with some error) measured parameters; for example, the spectrum of light absorption in CO2 (and all other greenhouse gases), its dependence on concentration, or a detailed temperature profile of the atmosphere.

This leads us to a radical statement: The hypothesis that an increase in the concentration of carbon dioxide in the atmosphere drives an increase in global temperature is not supported by any easily and comprehensibly explainable physical reasoning that would be clear to a person with an ordinary university education in a technical or natural science field. This hypothesis is ultimately supported by mathematical modelling that more or less accurately captures some of the many complicated processes in the atmosphere.

Flows and Feedbacks for Climate Models

However, this casts a completely different light on the whole problem. In the context of the dramatic failures of mathematical modelling in the recent past, the “greenhouse effect” deserves much more attention. We heard the claim that “science is settled” many times during the Covid crisis and many predictions that later turned out to be completely absurd were based on “scientific consensus.”

Almost every important scientific discovery began as a lone voice going against the scientific consensus of that time. Consensus in science does not mean much – science is built on careful falsification of hypotheses using properly conducted experiments and properly evaluated data. The number of past instances of scientific consensus is basically equal to the number of past scientific errors.

Mathematical modelling is a good servant but a bad master. The hypothesis of global climate change caused by the increasing concentration of CO2 in the atmosphere is certainly interesting and plausible. However, it is definitely not an experimental fact, and it is most inappropriate to censor an open and honest professional debate on this topic. If it turns out that mathematical models were – once again – wrong, it may be too late to undo the damage caused in the name of “combating” climate change.

Beware getting sucked into any model, climate or otherwise.

Addendum on Chameleon Models

Chameleon Climate Models

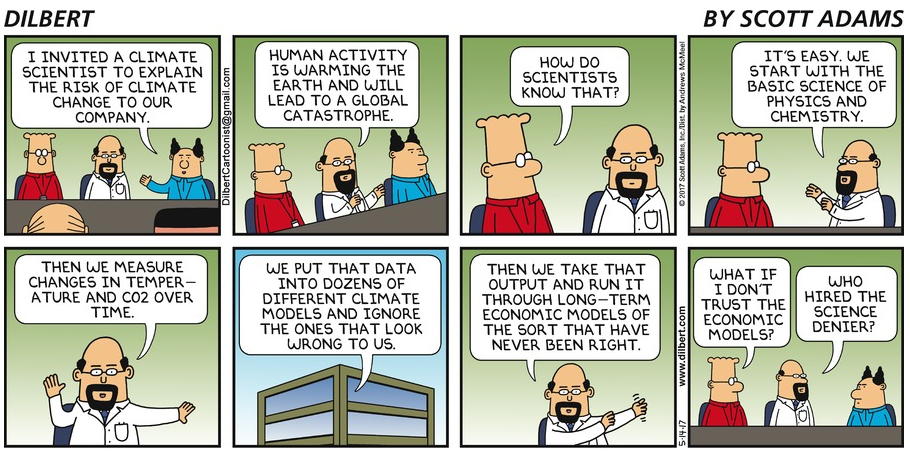

Footnote: Classic Cartoon on Models

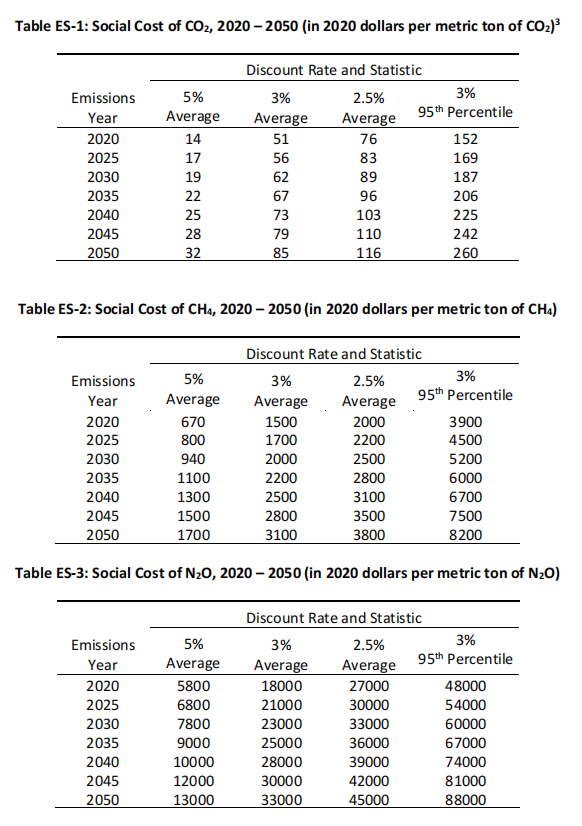

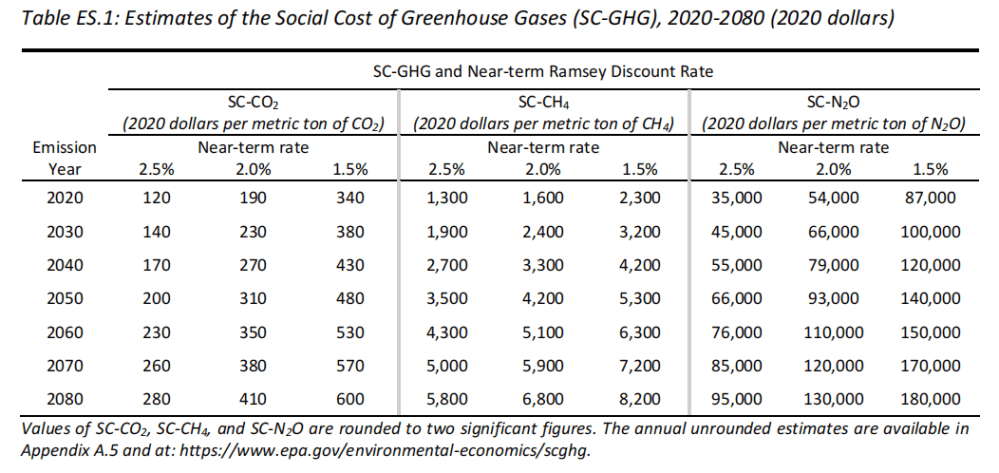

A reminder that first there was Social Cost of Carbon (SCC) which purported to estimate future costs of damages from CO2 emissions. Now there is Social Costs of Green House Gases (SC-GHG) which ups the ante by adding purported damages from methane (CH4) and Nitrogen oxides (N2O). At the end of this post are references describing this sordid history.

A reminder that first there was Social Cost of Carbon (SCC) which purported to estimate future costs of damages from CO2 emissions. Now there is Social Costs of Green House Gases (SC-GHG) which ups the ante by adding purported damages from methane (CH4) and Nitrogen oxides (N2O). At the end of this post are references describing this sordid history.

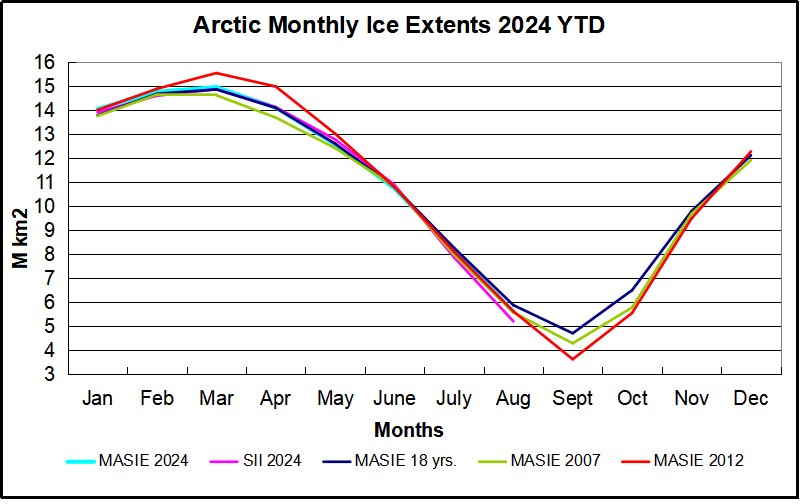

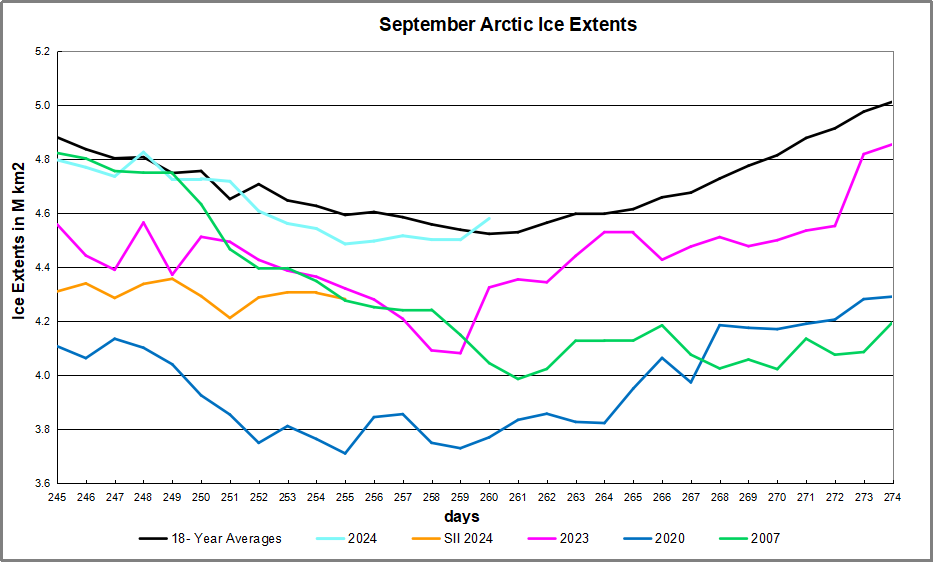

The table below shows the distribution of Sea Ice on day 260 across the Arctic Regions, on average, this year and 2007. At this point in the year, Bering and Okhotsk seas are open water and thus dropped from the table.

The table below shows the distribution of Sea Ice on day 260 across the Arctic Regions, on average, this year and 2007. At this point in the year, Bering and Okhotsk seas are open water and thus dropped from the table.

Kip Hansen gives the game away in his Climate Realism article

Kip Hansen gives the game away in his Climate Realism article