Beware “Fact Checking” by Innuendo

Kip Hansen gives the game away in his Climate Realism article Illogically Facts —’Fact-Checking’ by Innuendo. Excerpts in italics with my bolds and added images.

Kip Hansen gives the game away in his Climate Realism article Illogically Facts —’Fact-Checking’ by Innuendo. Excerpts in italics with my bolds and added images.

The latest fad in all kinds of activism to attack one’s ideological opponents via “fact checking”. We see this in politics and all the modern controversies, including, of course, Climate Science.

Almost none of the “fact checking sites” and “fact checking organizations” actually check facts. And, if they accidentally find themselves checking what we would all agree is a fact, and not just an opinion or point of view, invariably it is checked against an contrary opinion, a different point of view or an alternative fact.

The resulting fact check report depends on the purposes of the fact check. Some are done to confirm that “our guy” or “our team” is proved to be correct, or that the opposition is proved to be wrong, lying or misinformation. When a fact is found to be different in any way from the desired fact, even the tiniest way, the original being checked is labelled a falsehood, or worse, an intentional lie. (or conversely, other people are lying about our fact!). Nobody likes a liar, so this sort of fake fact checking accomplishes two goals – it casts doubt on the not-favored fact supposedly being checked and smears an ideological opponent as a liar. One stone – two birds.

While not entirely new on the fact-checking scene, an AI-enhanced effort has popped to the surface of the roiling seas of controversy: Logically Facts. “Logically Facts is part of Meta’s Third Party Fact-Checking Program (3PFC) and works with TikTok in Europe. We have been a verified signatory of the International Fact-Checking Network (IFCN) since 2020 and are a member of the Misinformation Combat Alliance (MCA) in India and the European Digital Media Observatory (EDMO) in Europe.” [ source ] Meta? “Meta Platforms…is the undisputed leader in social media. The technology company owns three of the four biggest platforms by monthly active users (Facebook, WhatsApp, and Instagram).” “Meta’s social networks are known as its Family of Apps (FoA). As of the fourth quarter of 2023, they attracted almost four billion users per month.” And TikTok? It has over a billion users.

I’m doubting that one can add up the 4 billion and the 1 billion to make 5 billion users of META and TikTok combined, but in any case, that’s a huge percentage of humanity any way one looks at it.

And who is providing fact-checking to those billion of people? Logically Facts [LF].

And what kind of fact-checking does LF do? Let’s look at an example that will deal with something very familiar with readers here: Climate Science Denial.

The definition put forward by the Wiki is:

“Climate change denial (also global warming denial) is a form of science denial characterized by rejecting, refusing to acknowledge, disputing, or fighting the scientific consensus on climate change.”

Other popular definitions of climate change denial include: attacks on solutions, questioning official climate change science and/or the climate movement itself.

If I had all the time left to me in this world, I could do a deep, deep dive into the Fact-Checking Industry. But, being limited, let’s look, together, at one single “analysis” article from Logically Facts:

‘Pseudoscience, no crisis’: How fake experts are fueling climate change denial

This article is a fascinating study in “fake-fact-checking by innuendo”.

As we go through the article, sampling its claims, I’ll alert you to any check of an actual fact – don’t hold your breath. If you wish to be pro-active, read the LF piece first, and you’ll have a better handle on what they are doing.

The lede in their piece is this:

“Would you seek dental advice from an ophthalmologist? The answer is obvious. Yet, on social media, self-proclaimed ‘experts’ with little to no relevant knowledge of climate science are influencing public opinion.”

The two editors of this “analysis” are listed as Shreyashi Roy [MA in Mass Communications and a BA in English Literature] and Nitish Rampal [ … based out of New Delhi and has …. a keen interest in sports, politics, and tech.] The author is said to be [more on “said to be” in a minute…] Anurag Baruah [MA in English Language and a certificate in Environmental Journalism: Storytelling earned online from the Thompson Founation.]

Why do you say “said to be”, Mr. Hansen? If you had read the LF piece, as I suggested, you would see that it reads as if it was “written” by an AI Large Language Model, followed by editing for sense and sensibility by a human, probably, Mr. Baruah, followed by further editing by Roy and Rampal.

The lede is itself an illogic. First it speaks of medical/dental advice, pointing out, quite rightly, that they are different specializations. But then complains that unnamed so-called self-proclaimed experts who LF claims “have little to no relevant knowledge of climate science” are influencing public opinion. Since these persons are so-far unnamed, LF’s AI, author and subsequent editors could not possibly know what their level of knowledge about climate science might be.

Who exactly are they smearing here?

The first is:

“One such ‘expert,’ Steve Milloy, a prominent voice on social media platform X (formerly Twitter), described a NASA Climate post (archive) about the impact of climate change on our seas as a “lie” on June 26, 2024.”

It is absolutely true that Milloy, who is well-known to be an “in-your-face” and “slightly over the-top” critic of all things science that he considers poorly done, being over-hyped, or otherwise falling into his category of “Junk Science”, posted on X the item claimed.

LF , its AI, author and editors make no effort to check what fact/facts

Milloy was calling a lie, or to check NASA’s facts in any way whatever.

You see, Milloy calling any claim from NASA “a lie” would be an a priori case of Climate Denial: he is refuting or refusing to accept some point of official climate science.

Who is Steve Milloy?

Steve Milloy is a Board Member & Senior Policy Fellow of the Energy and Environment Legal Institute, author of seven books and over 600 articles/columns published in major newspapers, magazines and internet outlets. He has testified by request before the U.S. Congress many times, including on risk assessment and Superfund issues. He is an Adjunct Fellow of the National Center for Public Policy Research.

“He holds a B.A. in Natural Sciences, Johns Hopkins University; Master of Health Sciences (Biostatistics), Johns Hopkins University School of Hygiene and Public Health; Juris Doctorate, University of Baltimore; and Master of Laws (Securities regulation) from the Georgetown University Law Center.”

It seems that many consider Mr. Milloy to be an expert in many things.

And the evidence for LF’s dismissal of Milloy as a “self-proclaimed expert” having “little to no relevant knowledge of climate science”? The Guardian, co-founder of the climate crisis propaganda outfit Covering Climate Now, said “JunkScience.com, has been called “the main entrepôt for almost every kind of climate-change denial”” and after a link listing Milloy’s degrees, pooh-poohed him for “lacking formal training in climate science.” Well, a BA in Natural Sciences might count for something. And a law degree is not nothing. The last link which gives clear evidence that Milloy is a well-recognized expert and it is obvious that the LF AI, author, and editors either did not read the contents of the link or simply chose to ignore it.

Incredibly, LF’s next target is “… John Clauser, a 2022 Nobel Prize winner in physics, claimed that no climate crisis exists and that climate science is “pseudoscience.” Clauser’s Nobel Prize lent weight to his statements, but he has never published a peer-reviewed paper on climate change.“

LF’s evidence against Clauser is The Washington Post in an article attacking not just Clauser, but a long list of major physicists who do not support the IPCC consensus on climate change: Willie Soon (including the lie that Soon’s work was financed by fossil fuel companies) , Steve Koonin, Dick Lindzen and Will Happer. The Post article fails to discuss any of the reasons these esteemed, world-class physicists are not consensus-supporting club members.

Their non-conforming is their crime. No facts are checked.

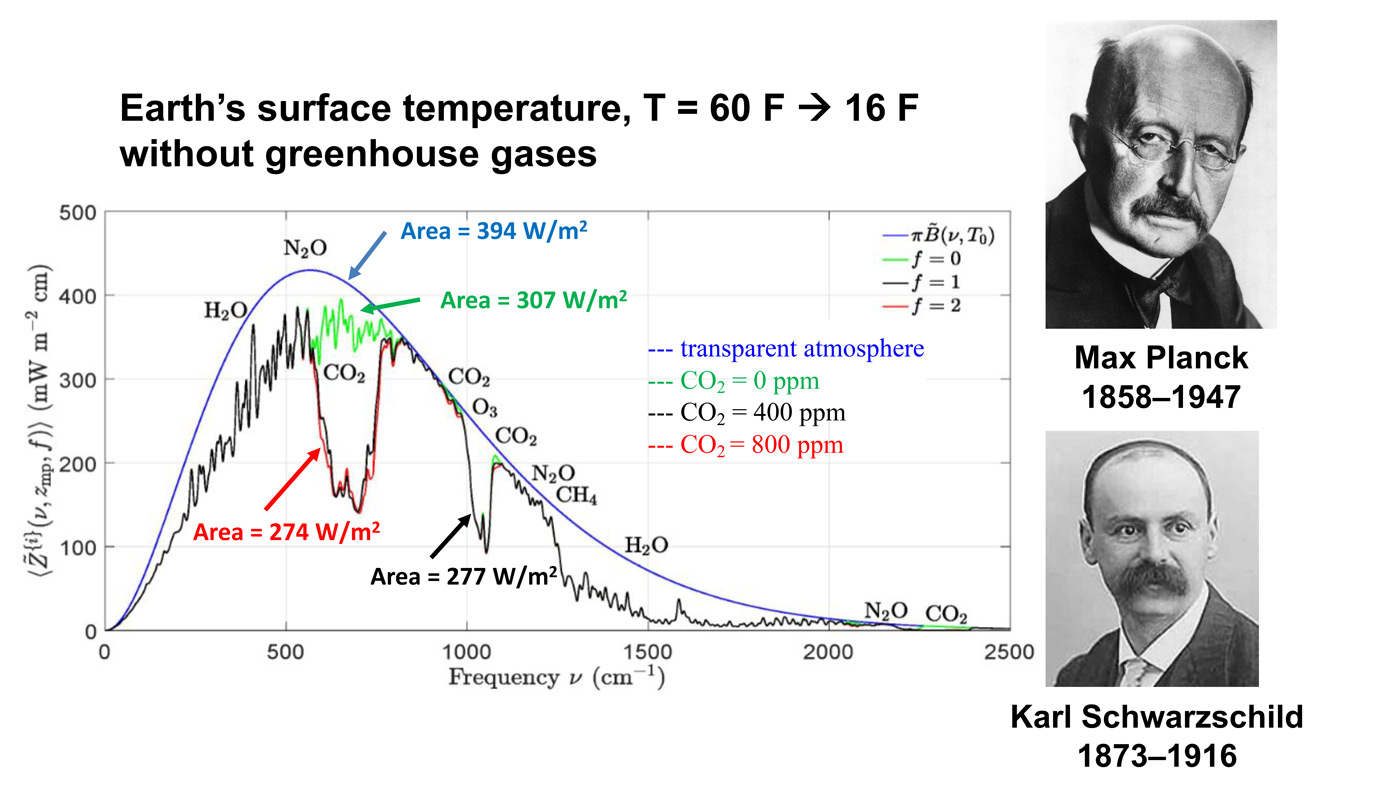

LF reinforces the attack on world-renown physicists with a quote from Professor Bill McGuire: “Such fake experts are dangerous and, in my opinion, incredibly irresponsible—Nobel Prize or not. A physicist denying anthropogenic climate change is actually denying the well-established physical properties of carbon dioxide, which is simply absurd.”

McGuire, is not a physicist and is not a climate scientist, but has a PhD in Geology and is a volcanologist and an IPCC contributor. He also could be seen as “lacking formal training in climate science.”

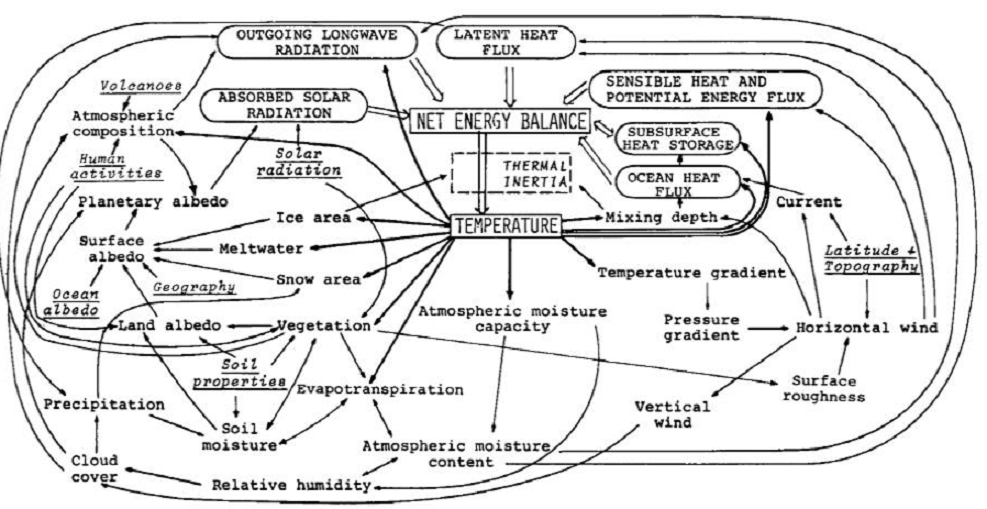

But, McGuire has a point, which LF, its AI and its human editors seem to miss, the very basis of the CO2 Global Warming hypothesis is based on physics, not based on what is today called “climate science”. Thus, the physicists are the true experts . (and not the volcanologists….)

LF then launches into the gratuitous comparison of “fake experts” in the anti-tobacco fight, alludes to oil industry ties, and then snaps right to John Cook.

John Cook, a world leader in attacking Climate Change Denial, is not a climate scientist. He is not a geologist, not an atmospheric scientist, not an oceanic scientist, not a physicist, not even a volcanologist. He “earned his PhD in Cognitive Science at the University of Western Australia in 2016”.

The rest of the Logically Facts fake-analysis is basically a re-writing of some of Cook’s anti-Climate Denialists screeds. Maybe/probably resulting from an AI large language model trained on pro-consensus climate materials. Logically Facts is specifically and openly an AI-based effort.

LF proceeds to attack a series of persons, not their ideas, one after another: Tony Heller, Dr. Judith Curry, Patrick Moore and Bjørn Lomborg.

The expertise of these individuals in their respective fields

are either ignored or brushed over.

Curry is a world renowned climate scientist, former chair of the School of Earth and Atmospheric Sciences at the Georgia Institute of Technology. Curry is the author the book on Thermodynamics of Atmospheres and Oceans, another book on Thermodynamics, Kinetics, and Microphysics of Clouds, and the marvelous groundbreaking Climate Uncertainty and Risk: Rethinking Our Response. Google scholar returns over 10,000 references to a search of “Dr. Judith Curry climate”.

Lomborg is a socio-economist with an impressive record, a best selling author and a leading expert on issues of energy dependence, value for money spent on international anti-poverty and public health efforts, etc. Richard Tol, is mention negatively for daring to doubt the “97% consensus”, with no mention of his qualifications as a Professor of Economics and a Professor of the Economics of Climate Change.

Bottom Line:

Logically Facts is a Large Language Model-type AI, supplemented by writers and editors meant to clean-up the mess returned by this chat-bot type AI. Thus, it is entirely incapable to making any value judgements between repeated slander, enforced consensus views, the prevailing biases of scientific fields and actual facts. Further, any LLM-based AI is incapable of Critical Thinking and drawing logical conclusions.

In short, Logically Facts is Illogical.