Thomas Allmendinger is a Swiss physicist educated at Zurich ETH whose practical experience is in the fields of radiology and elemental particles physics. His complete biography is here.

His independent research and experimental analyses of greenhouse gas (GHG) theory over the last decade led to several published studies, including the latest summation The Real Origin of Climate Change and the Feasibilities of Its Mitigation, 2023, at Atmospheric and Climate Sciences journal. The paper is a thorough and detailed discussion of which I provide here a synopsis of his methods, findings and conclusions. Excerpts are in italics with my bolds and added images.

Abstract

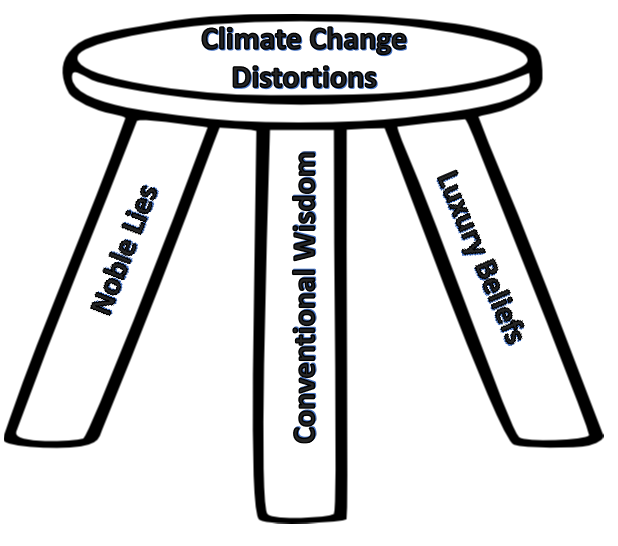

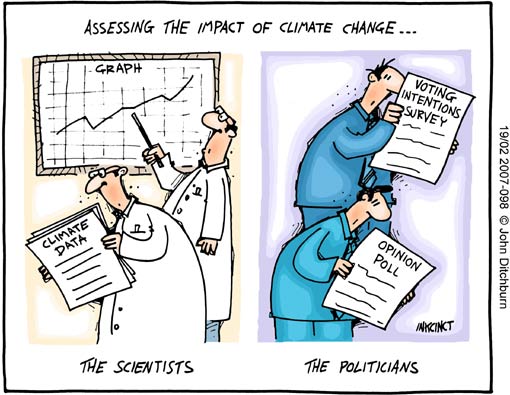

The actual treatise represents a synopsis of six important previous contributions of the author, concerning atmospheric physics and climate change. Since this issue is influenced by politics like no other, and since the greenhouse-doctrine with CO2 as the culprit in climate change is predominant, the respective theory has to be outlined, revealing its flaws and inconsistencies.

But beyond that, the author’s own contributions are focused and deeply discussed. The most eminent one concerns the discovery of the absorption of thermal radiation by gases, leading to warming-up, and implying a thermal radiation of gases which depends on their pressure. This delivers the final evidence that trace gases such as CO2 don’t have any influence on the behaviour of the atmosphere, and thus on climate.

But the most useful contribution concerns the method which enables to determine the solar absorption coefficient βs of coloured opaque plates. It delivers the foundations for modifying materials with respect to their capability of climate mitigation. Thereby, the main influence is due to the colouring, in particular of roofs which should be painted, preferably light-brown (not white, from aesthetic reasons).

It must be clear that such a drive for brightening-up the World would be the only chance of mitigating the climate, whereas the greenhouse doctrine, related to CO2, has to be abandoned. However, a global climate model with forecasts cannot be aspired to since this problem is too complex, and since several climate zones exist.

Background

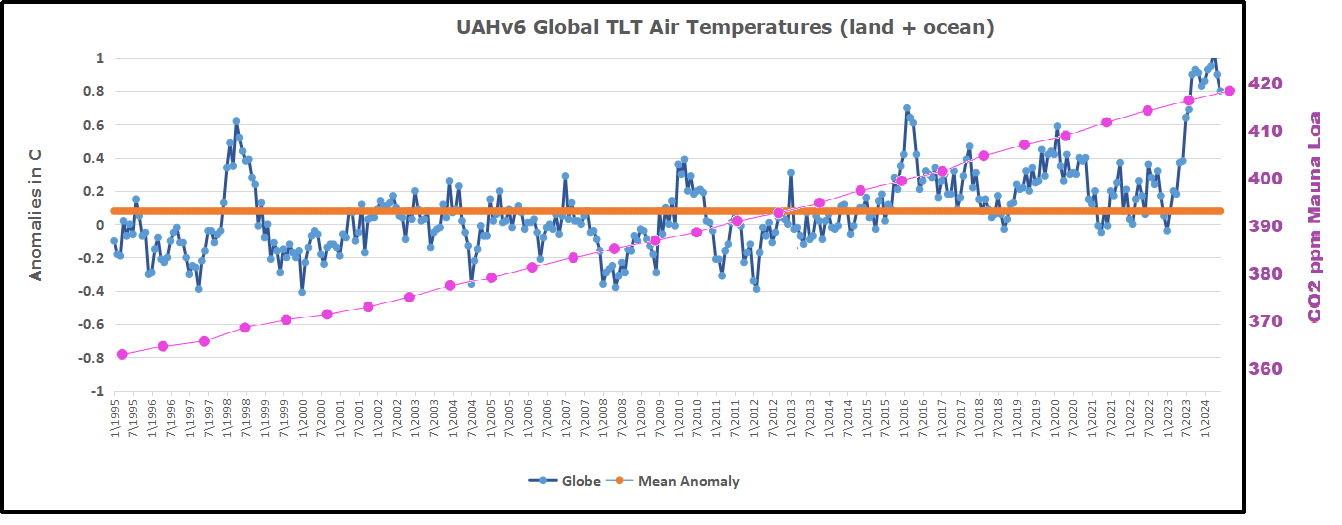

The alleged proof for the correctness of this theory was delivered 25 years later by an article in the Scientific American of the year 1982 [4]. Therein, the measurements of C.D. Keeling were reported which had been made at two remote locations, namely at the South Pole and in Hawaii, and according to which a continuous rise of the atmospheric CO2-concentration from 316 to 336 ppm had been detected between the years 1958 and 1978 (cf. Figure 1), suggesting coherence between the CO2 concentration and the average global temperature.

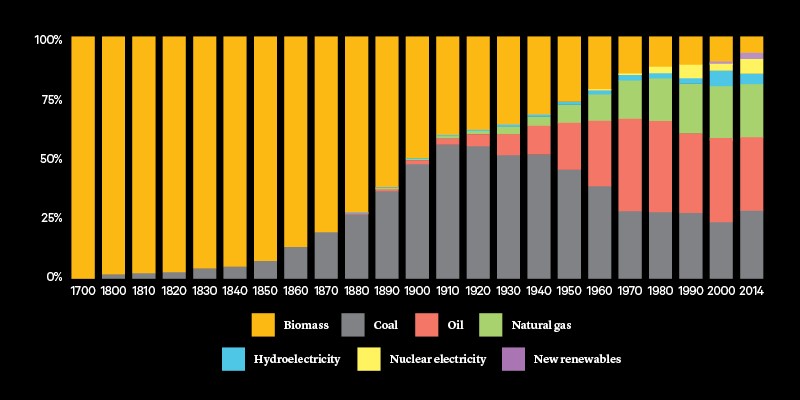

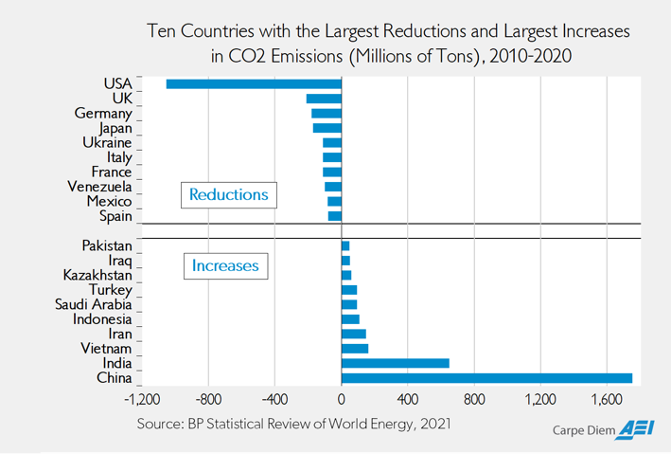

But apart from the fact that these CO2-concentrations are quite minor (400 ppm = 0.04%), and that a constant proportion between the atmospheric CO2-concentration and the average global temperature could not be asserted over a longer period, it should be borne in mind that this conclusion was an analogous one, and not a causal one, since solely a temporal coincidence existed. Rather, other influences could have been effective which happened simultaneously, in particular the increasing urbanisation, influencing the structure and the coloration of large parts of Earth surface.

However, this contingency was, and still is, categorically excluded. Solely the two possibilities are considered as explanation of the climate change: either the anthropogenic influence due to CO2-production, or a natural one which cannot be influenced. A third influence, the one suggested here, namely the one of colours, is a priori excluded, even though nobody denies the influence of colouring on the surface temperature of Earth and the existence of urban heat islands, and although an increase of winds and storms cannot be explained by the greenhouse theory.

However, already well in advance institutions were founded which aimed at mitigating climate change through political measures. Thereby, climate change was equated with the industrial CO2 production, although physical evidence for such a relation was not given. It was just a matter of belief. In this regard, in 1992 the UNFCCC (United Nations Framework Convention on Climate Change) was founded, supported by the IPCC (Intergovernmental Panel on Climate Change). In advance, side by the side with the UNO, numerous so-called COPs (Conferences on the Parties) were hold: the first one in 1985 in Berlin, the most popular one in 1997 in Kyoto, and the most important one in 2015 in Paris, leading to a climate convention which was signed by representatives of 195 nations. Thereby, numerous documents were compiled, altogether more than 40,000. But actually these documents didn’t fulfil the standards of scientific publications since they were not peer reviewed.

Subsequently, intensive research activities emerged, accompanied by a flood of publications, and culminating in several text books. Several climate models were presented with different scenarios and diverging long-term forecasts. Thereby, the fact was disregarded that indeed no global climate exists but solely a plurality of climates, or rather of micro-climates and at best of climate-zones, and that the Latin word “clima” (as well as the English word “clime”) means “region”. Moreover, an average global temperature is not really defined and thus not measurable because the temperature-differences are immense, for instance with regard to the geographic latitude, the altitude, the distinct conditions over sea and over land, and not least between the seasons and between day and night. Moreover, the term “climate” implicates rain and snow as well as winds and storms which, in the long-term, are not foreseeable. In particular, it should be realized that atmospheric processes are energetically determined, whereto the temperature contributes only a part.

2. The Historical Inducement for the Greenhouse Theory and Their Flaws

The scientific literature about the greenhouse theory is so extensive that it is difficult to find a clearly outlined and consistent description. Nevertheless, the publications of James E. Hansen [5] and of V. Ramanathan et al. [6] may be considered as authoritative. Moreover, the textbooks [7] [8] and [9] are worth mentioning. Therein it is assumed that Earth surface, which is heated up by sun irradiation, emits thermal radiation into the atmosphere, warming it up due to heat absorption by “greenhouse gases” such as CO2 and CH4. Thereby, counter-radiation occurs which induces a so-called radiative transfer. This aspect involved the rise of numerous theories (e.g. [10] [11] [12]). But the co-existence of theories is in contrast to the scientific principle that for each phenomenon solely one explanation or theory is admissible.

Already simple thoughts may lead one to question this theory. For instance: Supposing the present CO2-concentration of approx. 400 ppm (parts per million) = 0.04%, one should wonder how the temperature of the atmosphere can depend on such an extremely low gas amount, and why this component can be the predominant or even the sole cause for the atmospheric temperature. This would actually mean that the temperature would be situated near the absolute zero of −273˚C if the air would contain no CO2 or other greenhouse gases.

Indeed, no special physical knowledge is needed in order to realize that this theory cannot be correct. However, the fact that it has settled in the public mind, becoming an important political issue, requires a more detailed investigation of the measuring methods and their results which delivered the foundations of this theory, and why misinterpretations arose. Thereto, the two subsequent points have to be particularly considered: The first point concerns the photometrical measurements on gases in the electromagnetic range of thermal radiation which initially Tyndall had carried out in the 1860s [13], and which had been expanded to IR-measurements evaluated by Plass nineteen years later [14]. The second point concerns the application of the Stefan/Boltzmann-law on the Earth-atmosphere system firstly made by Arrhenius in 1896 [2], and more or less adopted by modern atmospheric physics. Both approaches are deficient and would question the greenhouse theory without requiring the author’s own approaches.

2.1 The Photometric and IR-Measurement Methods for CO2

By variation of the wave length and measuring the respective absorption, the spectrum of a substance can be evaluated. This IR-spectroscopic method is widely used in order to characterize organic chemical substances and chemical bonds, usually in solution. But even there this method is not suited for quantitative measurements, i.e. the absorption of the IR-active substance is not proportional to its concentration as the Beer-Lambert law predicts. It probably will even less be the case in the gaseous phase and, all the more, at high pressures which were applied in order to imitate the large distances in the atmosphere in the range of several (up to 10) kilometres. Thereby it is disregarded that the pressure of the atmosphere depends on the altitude above sea level, which prohibits the assumption of a linear progress.

Moreover, it is disregarded that at IR-spectrographs the effective radiation intensity is not known, and that in the atmosphere a gas mixture exists where the CO2 amounts solely to a little extent, whereas for the spectroscopic measurements pure CO2 was used. Nevertheless, in the text books for atmospheric physics the Beer-Lambert law is frequently mentioned, however without delivering concrete numerical results about the absorbed radiation.

In both cases solely the absorption degree of the radiation was determined, i.e. the decrease of the radiation intensity due to its run through a gas, but never its heating-up, that means its temperature increase. Instead, it was assumed that a gas is necessarily warmed up when it absorbs thermal radiation. According to this assumption, pure air, or rather a 4:1 mixture of nitrogen and oxygen, is expected to be not warmed up when it is thermally irradiated since it is IR-spectroscopically inactive, in contrast to pure CO2.

However, no physical formula exists which would allow to calculate such an effect, and no respective empirical evidence was given so far. Rather, the measurements which were recently performed by the author delivered converse, surprising results.

2.2. The Impact of Solar Radiation onto the Earth Surface and Its Reflexion

Besides, a further error is implicated in the usual greenhouse theory. It results from the fact that the atmosphere is only partly warmed up by direct solar radiation. In addition, it is warmed up indirectly, namely via Earth surface which is warmed up due to solar irradiation, and which transmits the absorbed heat to the atmosphere either by thermal conduction or by thermal radiation. Moreover, air convection contributes a considerable part. This process is called Anthropogenic Heat Flux (AHF). It has recently been discussed by Lindgren [16]. However, herewith a more fundamental view is outlined.

The thermal radiation corresponds to the radiative emission of a so-called “black body”. Such a body is defined as a body which entirely absorbs electromagnetic radiation in the range from IR to UV light. Likewise, it emits electromagnetic radiation all the more as its temperature grows. Its radiative behaviour is formulated by the law of Stefan and Boltzmann. . . According to this law, the radiation wattage Φ of a black body is proportional to the fourth power of its absolute temperature. Usually, this wattage is related to the area, exhibiting the dimension W/m2.

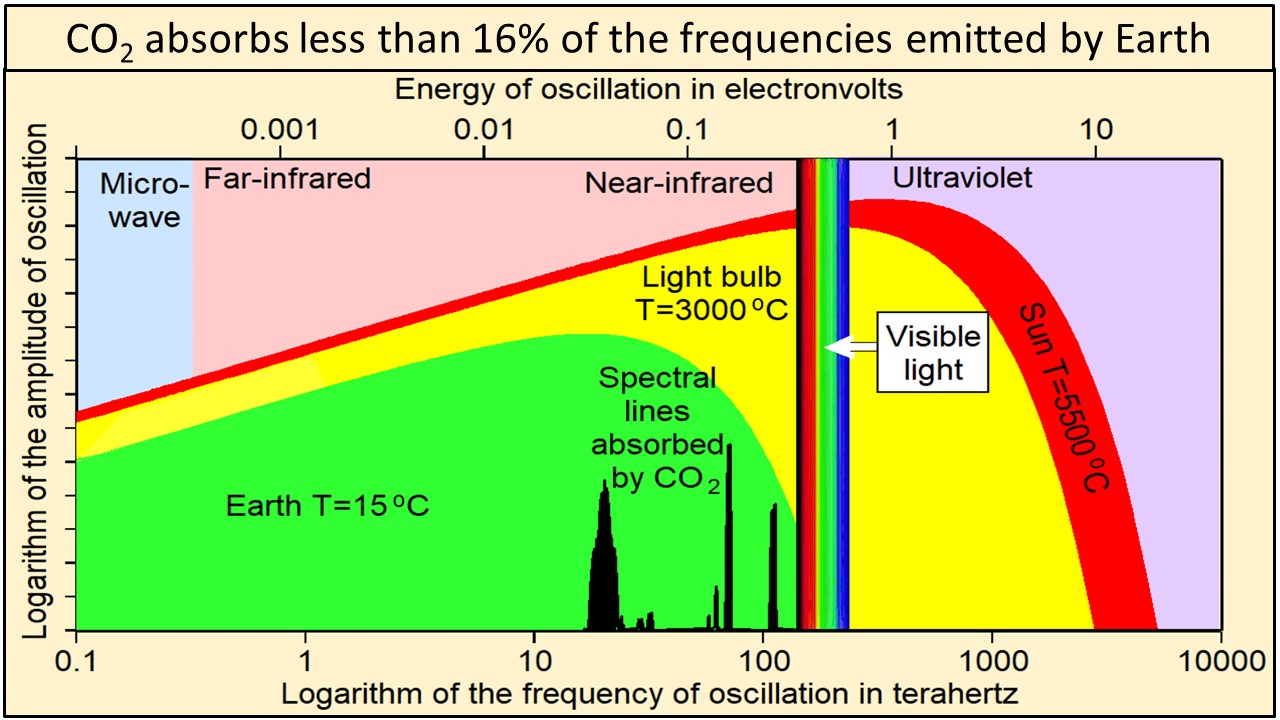

This formula does not allow making a statement about the wave-length or the frequency of the emitted light. This is only possible by means of Max Planck’s formula which was published in 1900. According to that, the frequencies of the emitted light tend to be the higher the temperature is. At low temperatures, only heat is emitted, i.e. IR-radiation. At higher temperatures the body begins to glow: first of all in red, and later in white, a mixture of different colours. Finally, UV-radiation emerges. The emission spectrum of the sun is in quite good accordance with Planck’s emission spectrum for approx. 6000 K.

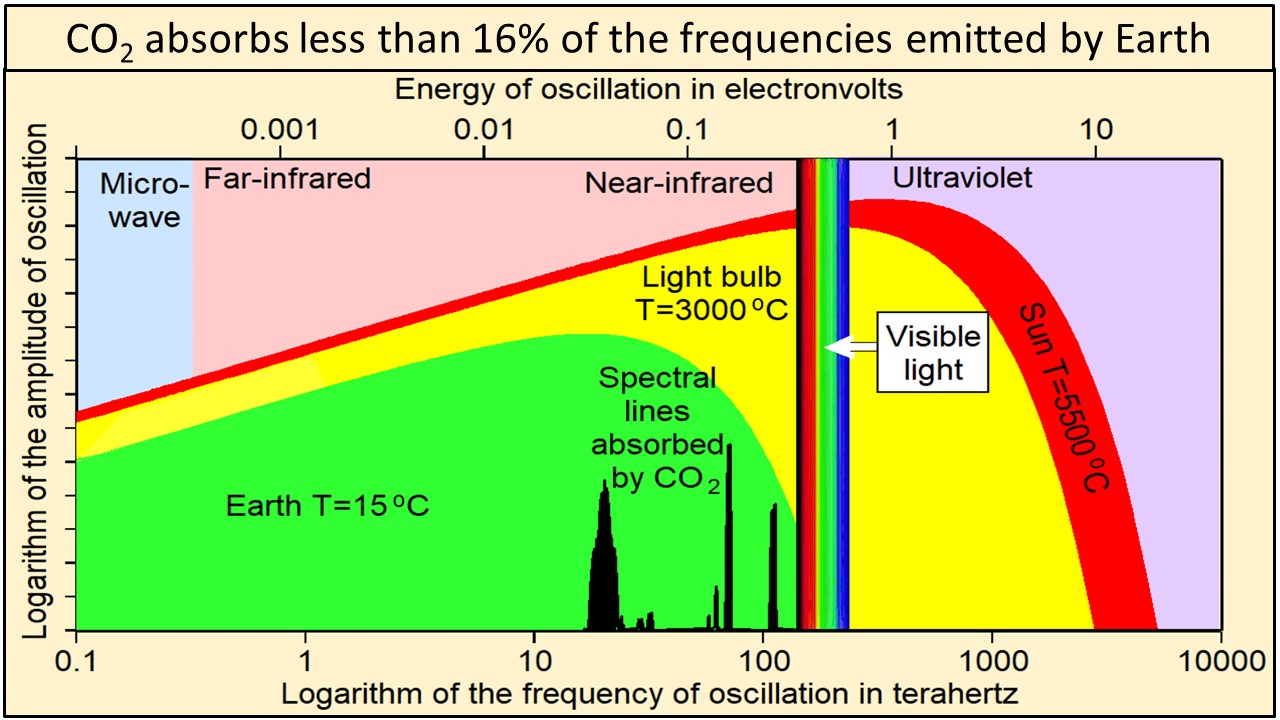

Black co2 absorption lines are not to scale.

This model can be applied on Earth surface considering it as a coloured opaque body: On one side, with respect to its thermal emission, it behaves like a black body fulfilling the Stefan/Boltzmann-law. On the other side, it adsorbs only a part βs of the incident solar light, converting it into heat, whereas the complementary part is reflected. However, the intensity of the incident solar light on Earth surface, Φsurface, is not identically equal with its extra-terrestrial intensity beyond the atmosphere, but depends on the sea level since the atmosphere absorbs a part of the sunlight. Remarkably, the atmosphere behaves like a black body, too, but solely with respect to the emission: On one side, it radiates inwards to the Earth surface, and on the other side, it radiates outwards in the direction of the rest of the atmosphere.

However, this method implies three considerable snags:

• Firstly, TEarth means the constant limiting temperature of the Earth surface which is attained when the sun had constantly shone onto the same parcel and with the same intensity. But this is never the case, except at thin plates which are thermally insulated at the bottom and at the sides, since the position of the sun changes permanently.

• Secondly, this formula does not allow making a statement about the rate of the warming up-process, which depends on the heat capacity of the involved plate, too. This is solely possible using the author’s approach (see Chapter 3). Nevertheless, it is often attempted (e.g. in [35]), not least within radiative transfer approaches.

• Thirdly, it is principally impossible to determine the absolute values of the solar reflection coefficient αs with an Albedometer or a similar apparatus, because the intensity of the incident solar light is independent of the distance to the surface, whereas the intensity of the reflected light depends on it. Thus, the herewith obtained values depend on the distance from Earth surface where the apparatus is positioned. So they are not unambiguous but only relative.

In the modern approach of Hansen et al. [5] the Earth is apprehended as a coherent black body, disregarding its segmentation in a solid and a gaseous part, and thus disregarding the contact area between Earth surface and the atmosphere where the reflexion of the sunlight takes place. As a consequence, in Equation (4b) the expression with Tair disappears, whereas a total Earth temperature appears which is not definable and not determinable. This approach has been widely adopted in the textbooks, even though it is wrong (see also [15]).

Altogether, the matter of fact was neglected that the proportionality of the radiation intensity to the absolute temperature to the fourth is solely valid if a constant equilibrium is attained. In contrast, the subsequently described method enables the direct detection of the colour dependent solar absorption coefficient βs = 1 –αs using well-defined plates. Furthermore, the time/temperature-courses are mathematically modelled up to the limiting temperatures. Finally, relative field measurements are possible based on these results.

3. The Measurement of Solar Absorption-Coefficients with Coloured Plates

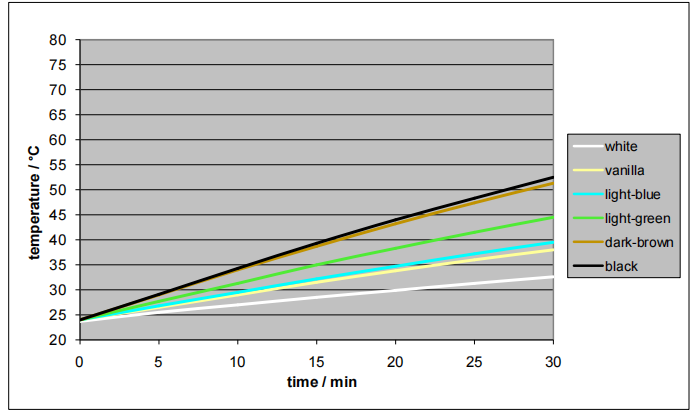

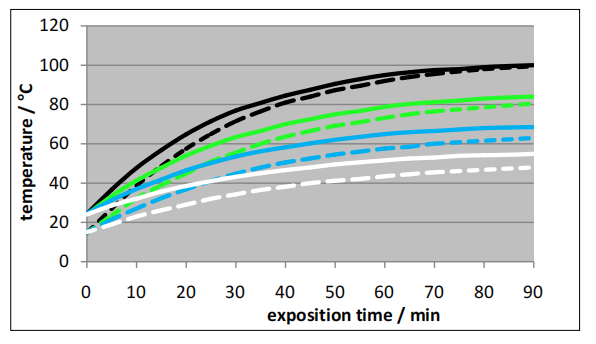

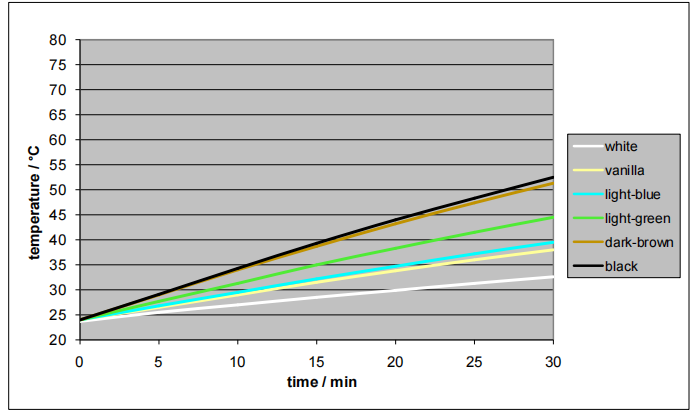

Within the here described and in [20] published lab-like method, not the reflected but the absorbed sun radiation was determined, namely by measuring the temperature courses of coloured quadratic plates (10 × 10 × 2 cm3) when sunlight of known intensity came vertically onto these plates. The temperatures of the plates were determined by mercury thermometers, while the intensity of the sunlight was measured by an electronic “Solarmeter” (KIMO SL 100). The plates were embedded in Styrofoam and covered with a thin transparent foil acting as an outer window in order to minimize erratic cooling by atmospheric turbulence (Figure 5). Their heat capacities were taken from literature values. The colours as well as the plate material were varied. Aluminium was used as a reference material, being favourable due to its high heat capacity which entails a low heating rate and a homogeneous heat distribution. For comparison, additional measurements were made by wooden plates, bricks and natural stones. For enabling a permanent optimal orientation towards the sun, six plate-modules were positioned on an adjustable panel (Figure 6).

The evaluation of the curves of Figure 7 yielded the colour specific solar absorption-coefficients βs rendered in Figure 9. They were independent of the plate material. Remarkably, the value for green was relatively high.

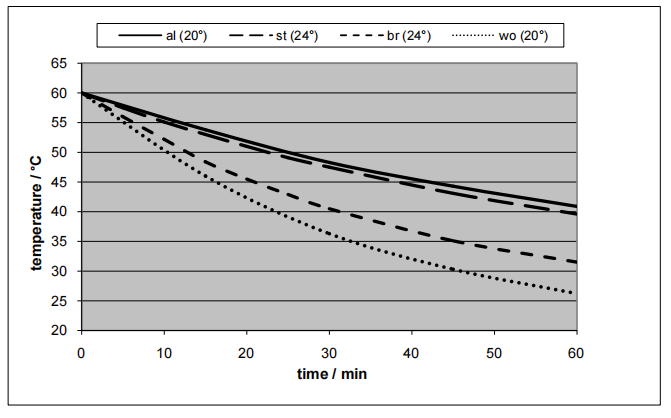

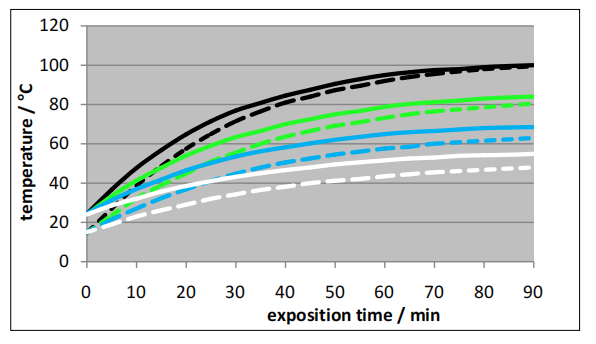

Figure 7. Warming-up of aluminium plates at 1040 W/m2 [20].

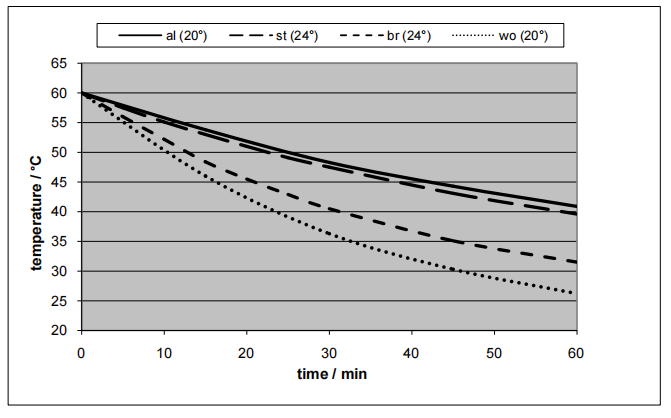

Figure 10. Cooling-down of different materials (in brackets: ambient temperature) [20]. al = aluminium 20 mm; st = stone 20.5 mm; br = brick 14.5 mm; wo = wood 17.5 mm.

4. Thermal Gas Absorption Measurements

If the warming-up behaviour of gases has to be determined by temperature measurements, interference by the walls of the gas vessel should be regarded since they exhibit a significantly higher heat capacity than the gas does, which implicates a slower warming-up rate. Since solid materials absorb thermal radiation stronger than gases do, the risk exists that the walls of the vessel are directly warmed up by the radiation, and that they subsequently transfer the heat to the gas. And finally, even the thin glass-walls of the thermometers may disturb the measurements by absorbing thermal radiation.

By these reasons, quadratic tubes with a relatively large profile (20 cm) were used which consisted of 3 cm thick plates from Styrofoam, and which were covered at the ends by thin plastic foils. In order to measure the temperature course along the tube, mercury-thermometers were mounted at three positions (beneath, in the middle, and atop) whose tips were covered with aluminium foils. The test gases were supplied from steel cylinders being equipped with reducing valves. They were introduced by a connecter during approx. one hour, because the tube was not gastight and not enough consistent for an evacuation. The filling process was monitored by means of a hygrometer since the air, which had to be replaced, was slightly humid. Afterwards, the tube was optimized by attaching adhesive foils and thin aluminium foils (see Figure 13). The equipment and the results are reported in [21].

Figure 13. Solar-tube, adjustable to the sun [21].

Figure 14. Heat-radiation tube with IR-spot [21].

Due to the results with IR-spots at different gases (air, carbon-dioxide, the noble gases argon, neon and helium), essential knowledge could be gained. In each case, the irradiated gas warmed up until a stable limiting temperature was attained. Analogously to the case of irradiated coloured solid plates, the temperature increased until the equilibrium state was attained where the heat absorption rate was identically equal with the heat emission rate.

Figure 15. Time/temperature-curves for different gases [21] (150 W-spot, medium thermometer-position).

Interpretation of the Results

Comparison of the results obtained by the IR-spots, on the one hand, and those obtained with solar radiation, on the other hand, corroborated the conclusion that comparatively short-wave IR-radiation was involved (namely between 0.9 and 1.9 μm). However, subsequent measurements with a hotplate (<90˚C), placed at the bottom of the heat-radiation tube ([15], Figure 16), yielded that long-wave thermal radiation (which is expected at bodies with lower temperatures such as Earth surface) induces also temperature increase of air and of carbon-dioxide, cf. Figure 17.

Thus, the herewith discovered absorption effect at gases proceeds over a relatively wide wave-length range, in contrast to the IR-spectroscopic measurements where only narrow absorption bands appear. This effect is not exceptional, i.e. it occurs at all gases, also at noble gases, and leads to a significant temperature increase, even though it is spectroscopically not detectable. This temperature increase overlays an eventual temperature increase due to the specific IR-absorption since the intensity ratio of the latter one is very small.

This may be explained as follows: In any case, an oscillation of particles, induced by thermal radiation, acts a part. But whereas in the case of the specific IR-absorption the nuclei inside the molecules are oscillating along the chemical bond (which must be polar), in the relevant case here the electronic shell inside the atoms, or rather the electron orbit, is oscillating implicating oscillation energy. Obviously, this oscillation energy can be converted into kinetic translation energy of the entire atoms which correlates to the gas temperature, and vice versa.

5. The Altitude-Paradox of the Atmospheric Temperature

The statement that it’s colder in the mountains than in the lowlands is trivial. Not trivial is the attempt to explain this phenomenon since the reason is not readily evident. The usual explanation is given by the fact that rising air cools down since it expands due to the decreasing air-pressure. However, this cannot be true in the case of plateaus, far away from hillsides which engender ascending air streams. It appears virtually paradoxical in view of the fact that the intensity of the sun irradiation is much greater in the mountains than in the lowlands, in particular with respect to its UV-amount. Thereby, the intensity decrease is due to the scattering and the absorption of sunlight within the atmosphere, not only within the IR-range but also in the whole remaining spectral area. If such an absorption, named Raleigh-scattering, didn’t occur, the sky would not be blue but black.

However, the direct absorption of sunlight is not the only factor which determines the temperature of the atmosphere. Its warming-up via Earth surface,which is warmed up due to absorbed sun-irradiation, is even more important. Thereby, the heat transfer occurs partly by heat conduction and air convection, and partly by thermal radiation. But there is an additional factor which has to be regarded: namely the thermal radiation of the atmosphere. It runs on the one hand towards Earth (as counter-radiation), and on the other hand towards Space. Thus the situation becomes quite complicated, all the more the formal treatment based on the Stefan/Boltzmann-relation would require limiting equilibrated temperature conditions. But in particular, that relation does not reveal an influence of the atmospheric pressure which obviously acts a considerable part.

In order to study the dependency on the atmospheric pressure, it would be desirable solely varying the pressure, whereas the other terms remain constant by varying the altitude of the measuring station above sea level which implicates a variation of the intensity of the sunlight and of the ambient atmosphere temperature, too. The here reported measurements were made at two locations in Switzerland, namely at Glattbrugg (close to Zürich), 430 m above sea level, and at the top of the Furka pass, 2430 m above sea level. Using the barometric height formula, the respective atmospheric pressures were approx. 0.948 and 0.748 bar. At any position, two measurements were made in the same space of time.

Figure 18. Comparison of the temperature courses during two measurements [24] (continuous lines: Glattbrugg; dotted lines: Furka).

These findings indeed confirm that in a way a greenhouse-effect occurs, since the atmosphere thermally radiates back to Earth surface. But this radiation has nothing to do with trace gases such as CO2. It rather depends on the atmospheric pressure which diminishes at higher altitudes.

If the oxygen content of the air would be considerably reduced, a general reduction of the atmospheric pressure and, as a consequence, of the temperature would proceed. This may be an explanation for the appearance of glacial periods. However, other explanations are possible, in particular the temporary decrease of the sun activity.

Over all it can be stated that climate change cannot be explained by ominous greenhouse gases such as CO2, but mainly by artificial alterations of Earth surface, particularly in urban areas by darkening and by enlargement of the surface (so-called roughness). These urban alterations are not least due to the enormous global population growth, but also to the character of modern buildings tending to get higher and higher, and employing alternative materials such as concrete and glass. As a consequence, respective measures have to be focussed, firstly mentioning the previous work, and then applying the here presented method.

7. Conclusions

The herewith summarized work of the author concerns atmospheric physics with respect to climate change, comprising three specific and interrelated points based on several previous publications: The first one consists in a critical discussion and refutation of the customary greenhouse theory; the second one outlines the method for measuring the thermal-radiative behaviour of gases; and the third one describes a lab-like method for the characterization of the solar-reflective behaviour of solid opaque bodies, in particular for the determination of the colour-specific solar absorption coefficients.

As to the first point, three main flaws were revealed:

• Firstly, the insufficiency of photometric methods in order to determine the heating-up of gases in the presence of thermal radiation;

• Secondly, the lack of causal relationship between the CO2-concentration in the atmosphere and the average global temperature, based on the reasoning that the empiric simultaneous increase of its concentration and of the global temperature would prove a causal relationship instead of an analogous one; and

• Thirdly, the inadmissible application of the Stefan/Boltzmann-law to the entire Earth (including the atmosphere) versus Space, instead of the application onto the boundary between the Earth surface and the atmosphere.

As to the second point, the discovery has to be taken into account according to which every gas is warmed up when it is thermally irradiated, even noble gases, attaining a limiting temperature where the absorption of radiation is in equilibrium with the emitted radiation. In particular, pure CO2 behaves similarly to pure air. Applying kinetic gas theory, a dependency of the emission intensity on the pressure, on the root of the absolute temperature, and on the particle size could be found and theoretically explained by oscillation of the electron shell.

As to the third point not only a lab-like measuring method for the colour dependent solar absorption coefficient βs was developed, but also a mathematical modelling of the time/temperature-course where coloured opaque plates are irradiated by sunlight. Thereby, the (colour-dependent) warming-up and the (colour-independent) cooling-down are detected separately. Likewise, a limiting temperature occurs where the intensity of the absorbed solar light is identical equal with the intensity of the emitted thermal radiation. In the absence of wind-convection, the so-called heat transfer coefficient B is invariant. Its value was empirically evaluated, amounting to approx. 9 W·m−2•K−1.

Finally, the theoretically suggested dependency of the atmospheric thermal radiation intensity on the atmospheric pressure could be empirically verified by measurements at different altitudes, namely in Glattbrugg (430 m above sea level and on the top of the Furka-pass (2430 m above sea level), both in Switzerland, delivering a so-called atmospheric emission constant A ≈ 22 W·m−2•bar−1•K−0.5. It explained the altitude-paradox of the atmospheric temperature and delivered the definitive evidence that the atmospheric behavior, and thus the climate, does not depend on trace gases such as CO2. However, the atmosphere thermally reradiates indeed, leading to something similar to a Greenhouse effect. But this effect is solely due to the atmospheric pressure.

Therefore, and also considering the results of Seim and Olsen [23], the customary greenhouse doctrine assuming CO2 as the culprit in climate change has to be abandoned and instead replaced by the here recommended concept of improving the albedo by brightening parts of the Earth surface, particularly in cities, unless fatal consequences will be hazarded.

Figure 24. Up-winds induced by an urban heat island.

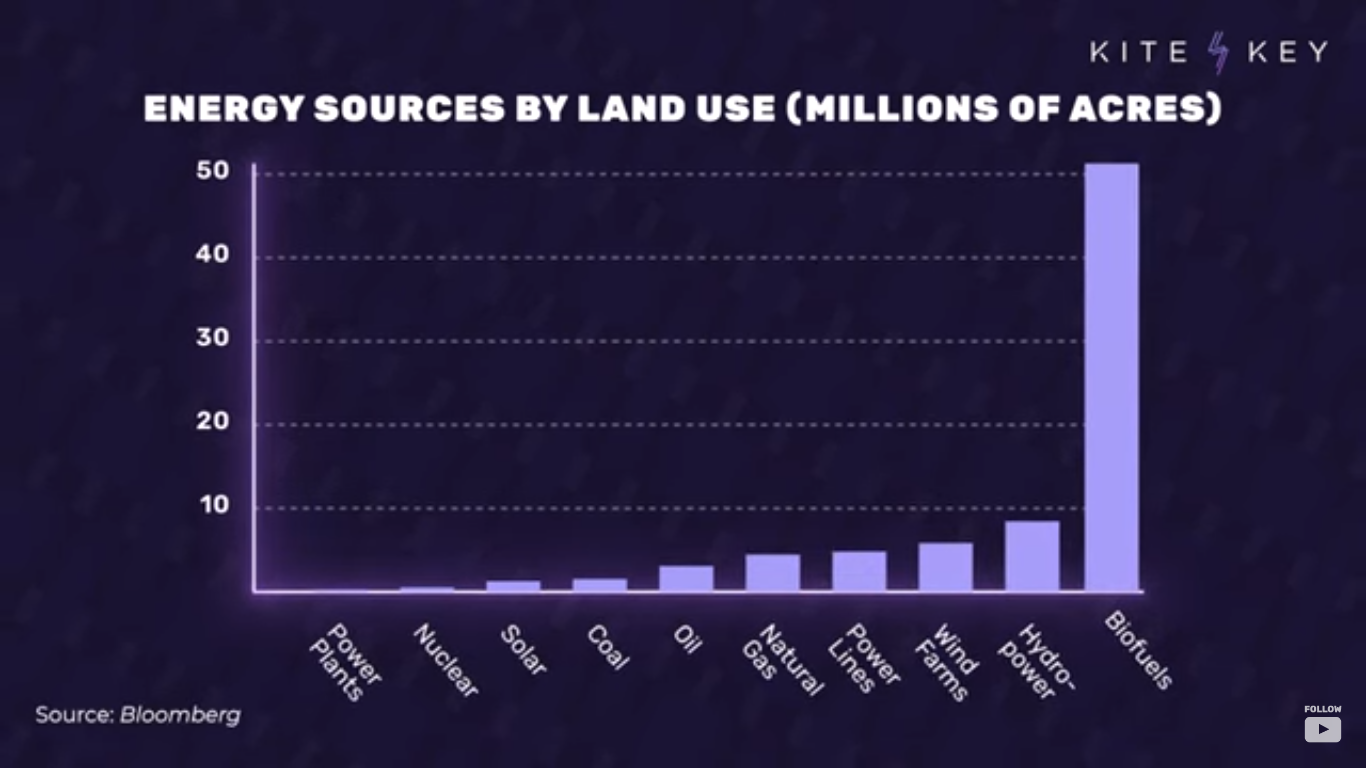

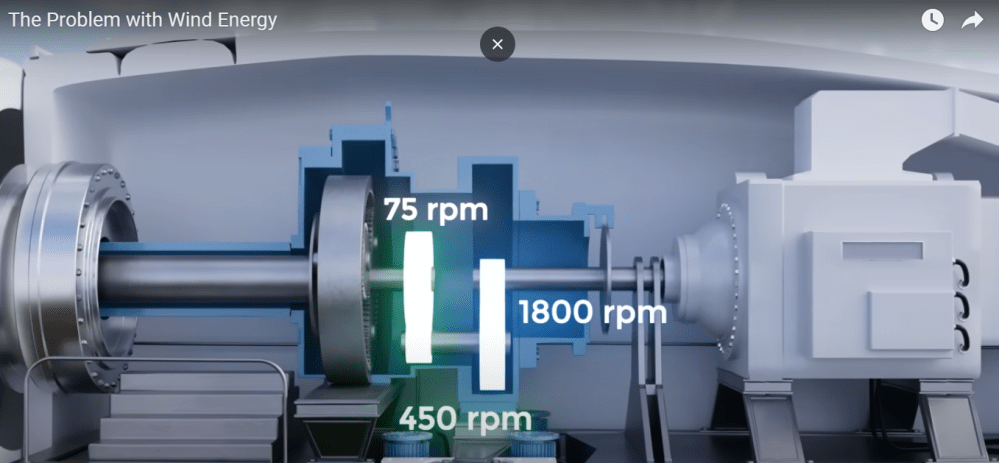

Apparently, diesel-fuelled generators are being used to power some wind turbines as a way of de-icing them in cold weather, that is, to keep them rotating. Also, it appears that the wind turbines have been drawing electric power directly from the grid instead of supplying it to the grid.

Apparently, diesel-fuelled generators are being used to power some wind turbines as a way of de-icing them in cold weather, that is, to keep them rotating. Also, it appears that the wind turbines have been drawing electric power directly from the grid instead of supplying it to the grid.

However, wind turbine rotors have to be shut down if the wind becomes too strong and/or rapidly changes in strength. They are shut down when too much ice forms or when there is insufficient wind. And now we have learned in Scotland they just turn on the diesel generators when that happens or they draw power directly from the grid.

However, wind turbine rotors have to be shut down if the wind becomes too strong and/or rapidly changes in strength. They are shut down when too much ice forms or when there is insufficient wind. And now we have learned in Scotland they just turn on the diesel generators when that happens or they draw power directly from the grid.

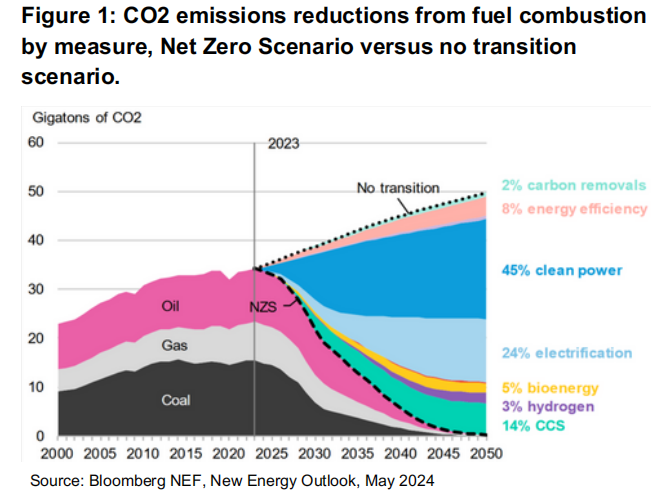

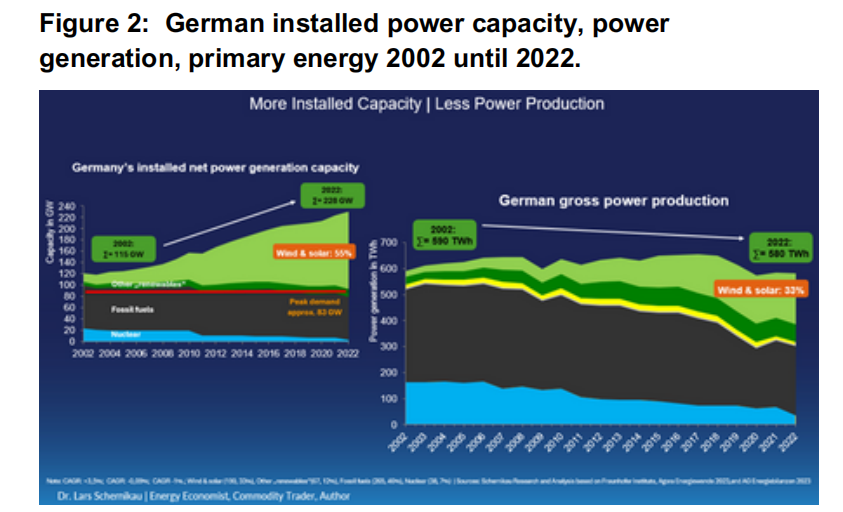

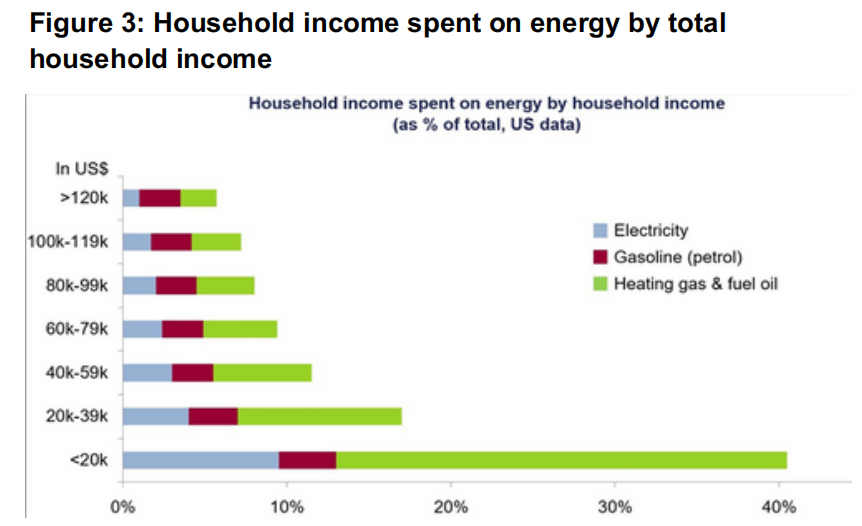

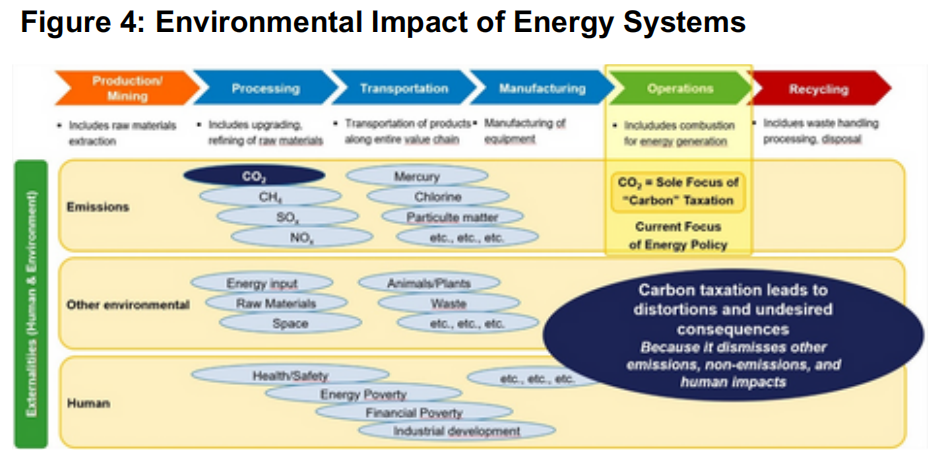

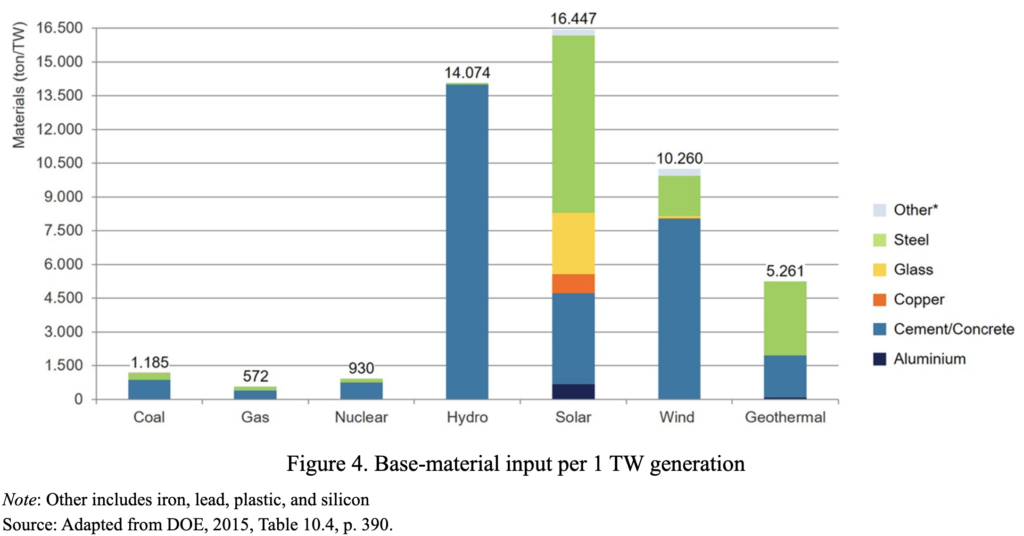

Dr. Lars Schernikau is an energy economist explaining why CO2 pricing (also falsely called “carbon pricing”) is a terrible idea fit only for discarding. His blog article is

Dr. Lars Schernikau is an energy economist explaining why CO2 pricing (also falsely called “carbon pricing”) is a terrible idea fit only for discarding. His blog article is

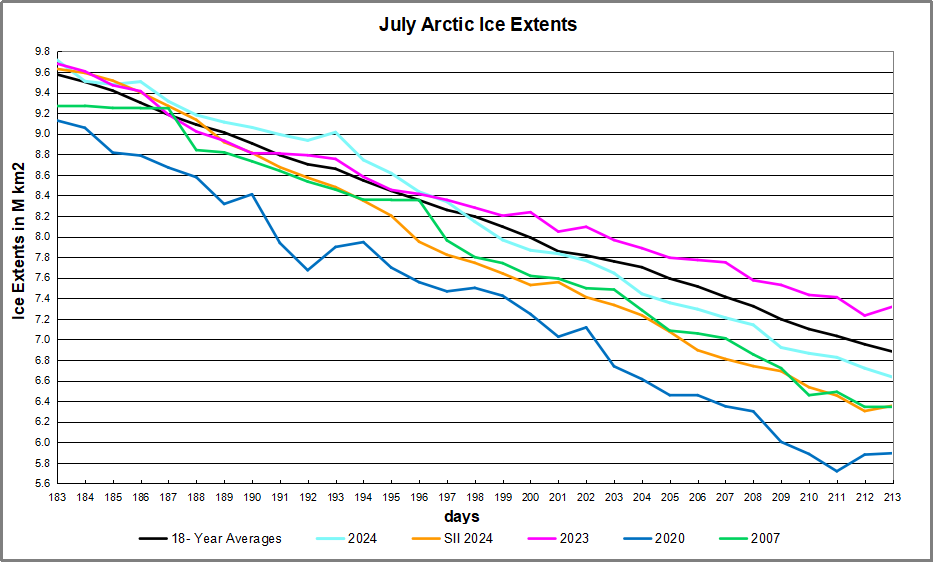

The table below shows the distribution of Sea Ice on day 213 across the Arctic Regions, on average, this year and 2007. At this point in the year, Bering and Okhotsk seas are open water and thus dropped from the table.

The table below shows the distribution of Sea Ice on day 213 across the Arctic Regions, on average, this year and 2007. At this point in the year, Bering and Okhotsk seas are open water and thus dropped from the table.