Bureaucrats Against Democracy

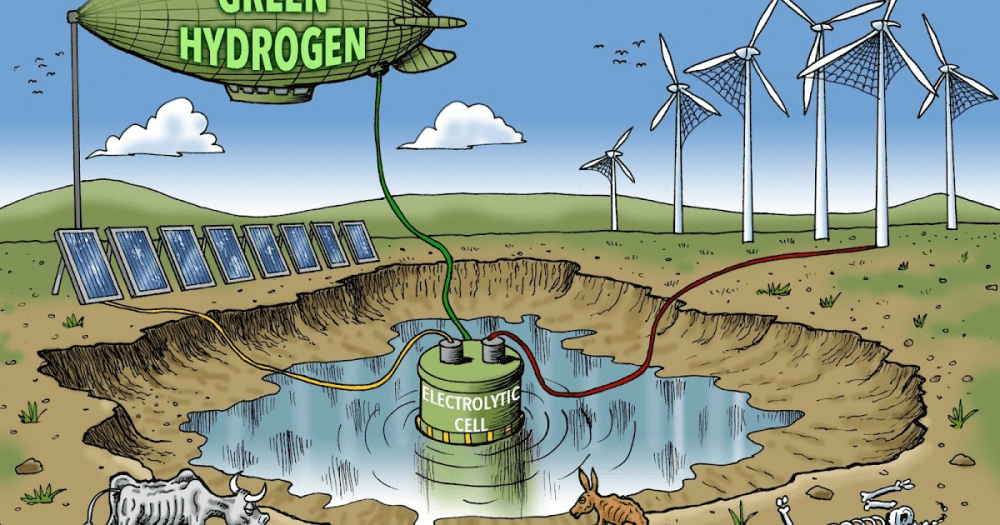

David Blackmon provides the background in his Daily Caller article Bureaucrats Worry Democracy Will Get In The Way Of Their Climate Agenda. As the above image suggests some of those in power have not shied away from acting in defiance of democratic norms. By imposing climate policies and regulations they have diminished the livelihoods and freedoms of the public they supposedly serve. Excerpts in italics with my bolds and added images.

I have frequently written over the last several years that the agenda of the climate-alarm lobby in the western world is not consistent with the maintenance of democratic forms of government.

Governments maintained by free elections, the free flow of communications and other democratic institutions are not able to engage in the kinds of long-term central planning exercises required to force a transition from one form of energy and transportation systems to completely different ones.

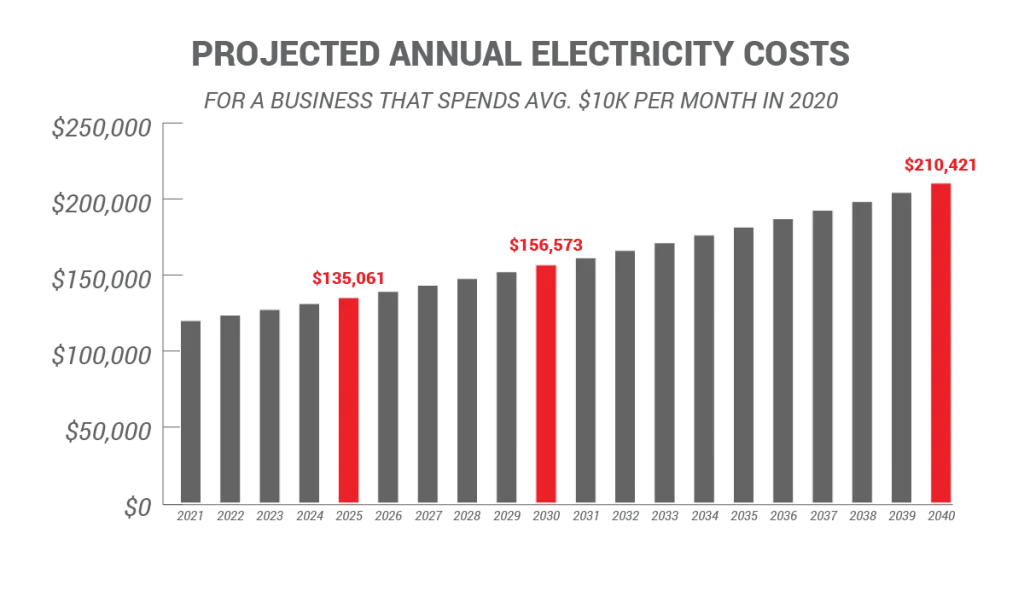

Why? Because once the negative impacts of vastly higher prices for all forms of energy begin to impact the masses, the masses in such democratic societies are going to rebel, first at the ballot box and if that is not allowed by the elites to work, then by more aggressive means.

This is not a problem for authoritarian or totalitarian forms of government, like those in Saudi Arabia, China and Russia, where long-term central planning projects invoking government control of the means of production is a long-ingrained way of life. If the people revolt, then the crackdowns are bound to come.

This societal dynamic is a simple reality of life that the pushers of the climate alarm narrative and forced energy transition in western societies have been loath to admit. But, in recent days, two key figures who have pushed the climate alarm narrative in both the United States and Canada have agreed with my thesis in public remarks.

In so doing, they are uttering the quiet part about

the real agenda of climate alarmism out loud.

Last week, former Obama Secretary of State and Biden climate czar John Kerry made remarks about the “problem” posed by the First Amendment to the U.S. Constitution that should make every American’s skin crawl. Speaking about the inability of the federal government to stamp out what it believes to be misinformation on big social media platforms, Kerry said: “Our First Amendment stands as a major block to the ability to be able to just, you know, hammer it out of existence,” adding, “I think democracies are, are very challenged right now and have not proven they can move fast enough or big enough to deal with the challenges that we are facing.”

Never mind that the U.S. government has long been the most focused purveyor of disinformation and misinformation in our society, Kerry wants to stop the free flow of information on the Internet.

The most obvious targets are Elon Musk and X, which is essentially the only big social media platform that does not willingly submit to the government’s demands for censoring speech.

Kerry’s desired solution is for Democrats to “win the ground, win the right to govern by hopefully having, you know, winning enough votes that you’re free to be able to, to, implement change.” The change desired by Kerry and Vice President Kamala Harris and other prominent Democrats is to obtain enough power in Congress and the presidency to revoke the Senate filibuster, pack the Supreme Court, enact the economically ruinous Green New Deal, and do it all before the public has any opportunity to rebel.

Not to be outdone by Kerry, Deputy Prime Minister Chrystia Freeland of Canada, who is a longtime member of the board of trustees of the World Economic Forum, was quoted Monday as saying: “Our shrinking glaciers, and our warming oceans, are asking us wordlessly but emphatically, if democratic societies can rise to the existential challenge of climate change.”

It should come as no surprise to anyone that the central governments of both Canada and the United States have moved in increasingly authoritarian directions under their current leadership, both of which have used the climate-alarm narrative as justification. This move was widely predicted once the utility of the COVID-19 pandemic to rationalize government censorship and restrictions of individual liberties began to fade in 2021.

Frustrated by their perceived need to move even faster to restrict freedoms and destroy democratic levers of public response to their actions, these zealots are now discarding their soft talking points in favor of more aggressive messaging.

This new willingness to say the quiet part out loud

should truly alarm anyone who values their freedoms.

Chris Morrison provides the analysis in his Daily Sceptic article

Chris Morrison provides the analysis in his Daily Sceptic article

Steve Goreham explains in his Heartland article

Steve Goreham explains in his Heartland article

Emmett Hare reports in City Journal

Emmett Hare reports in City Journal