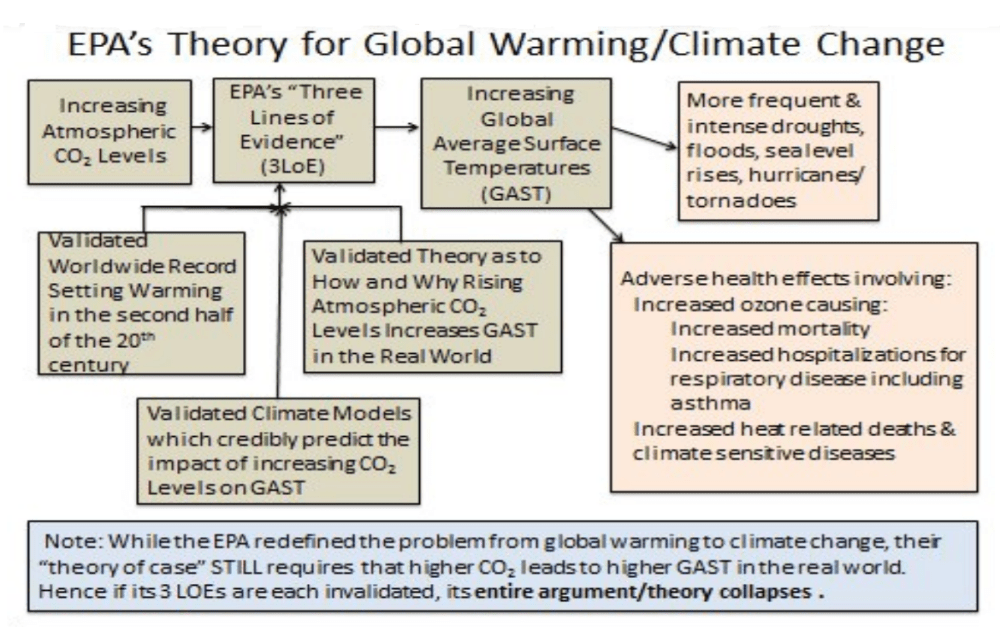

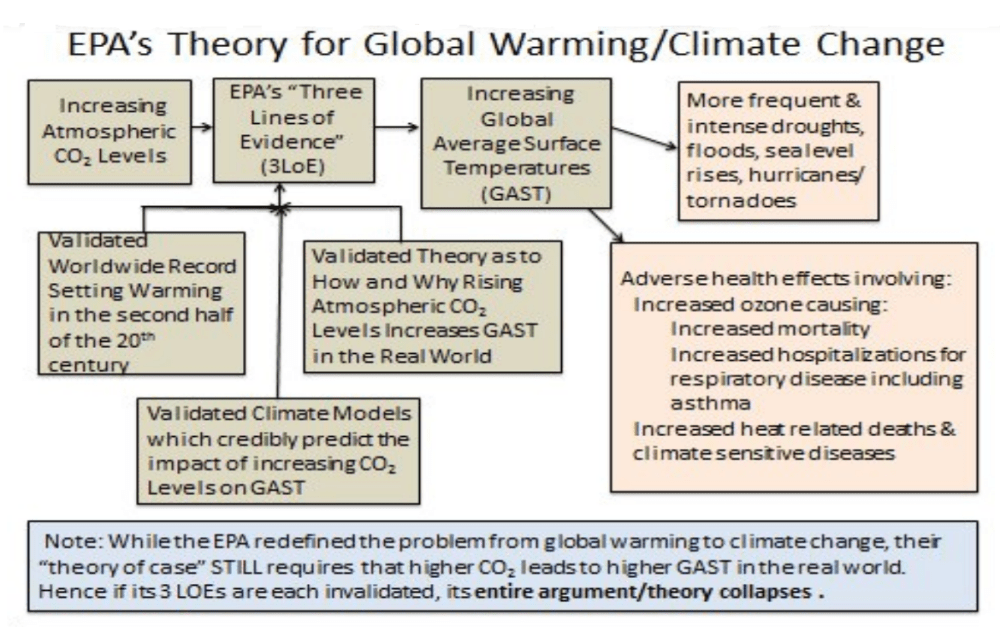

Now that Trump’s EPA is determined to reconsider its past GHG Endangerment Finding, it’s important to understand how we got here. First of all there was the EPA’s theory basis for the finding:

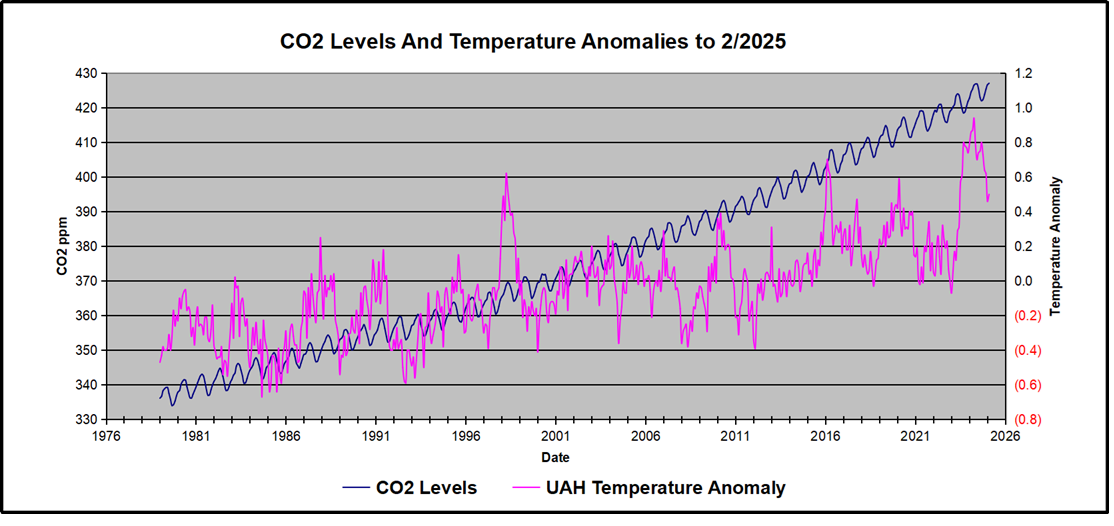

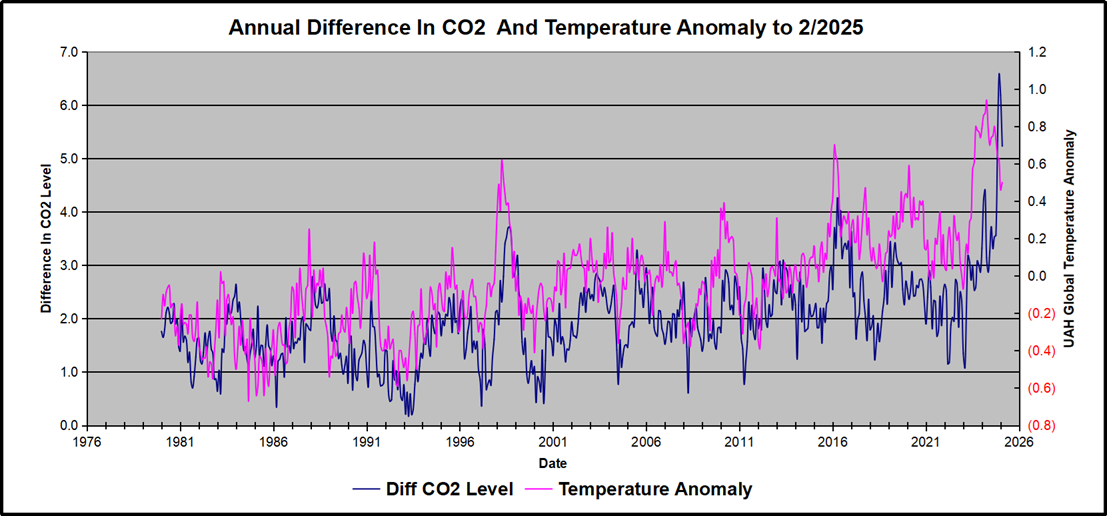

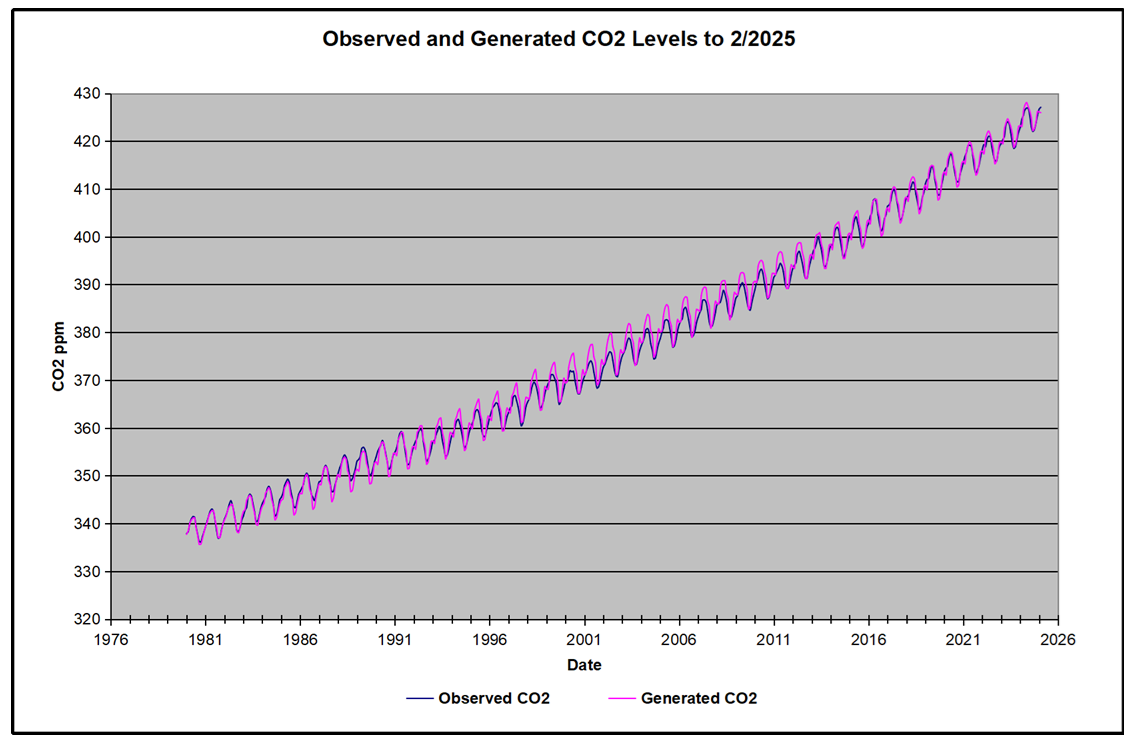

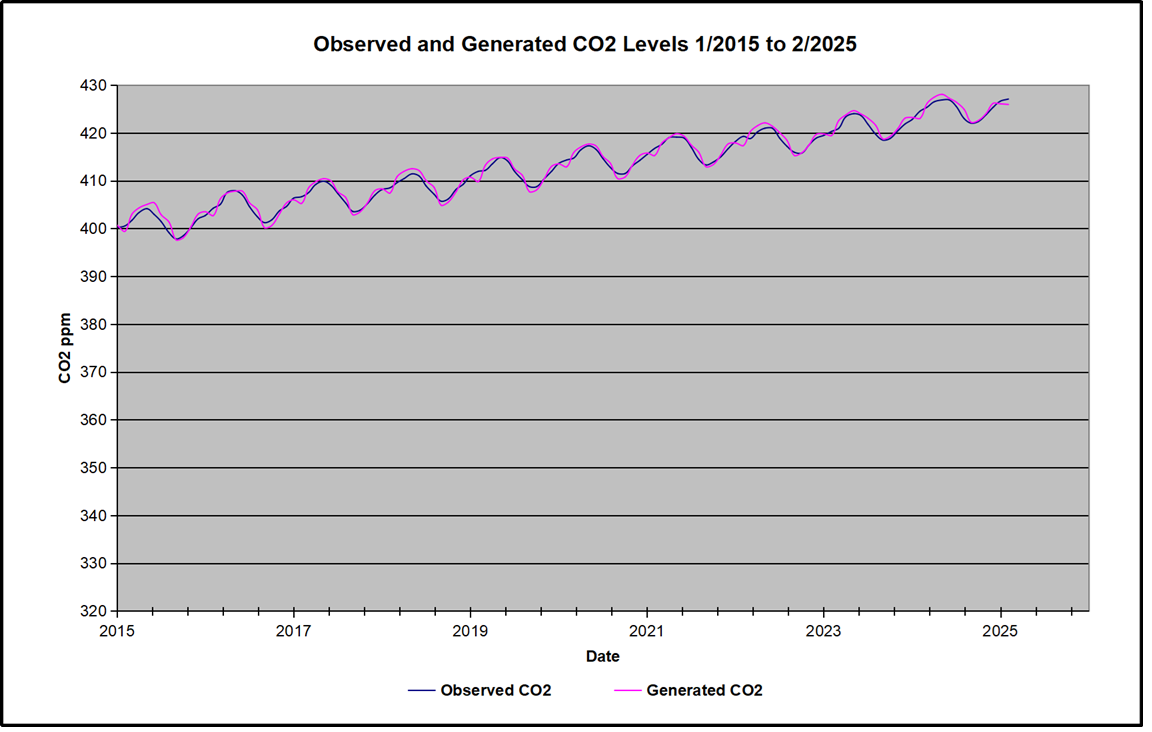

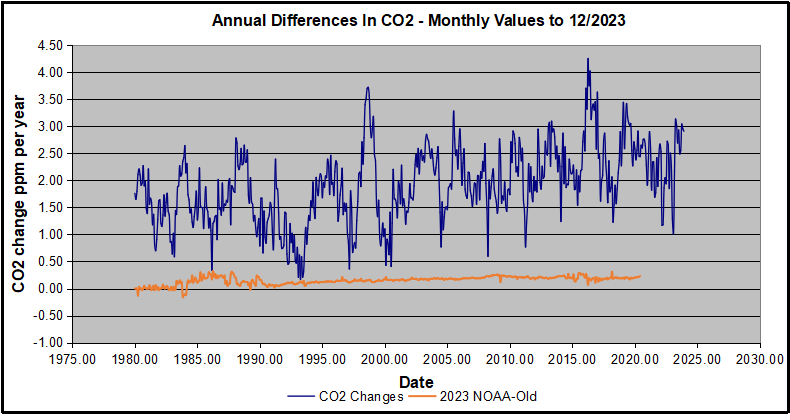

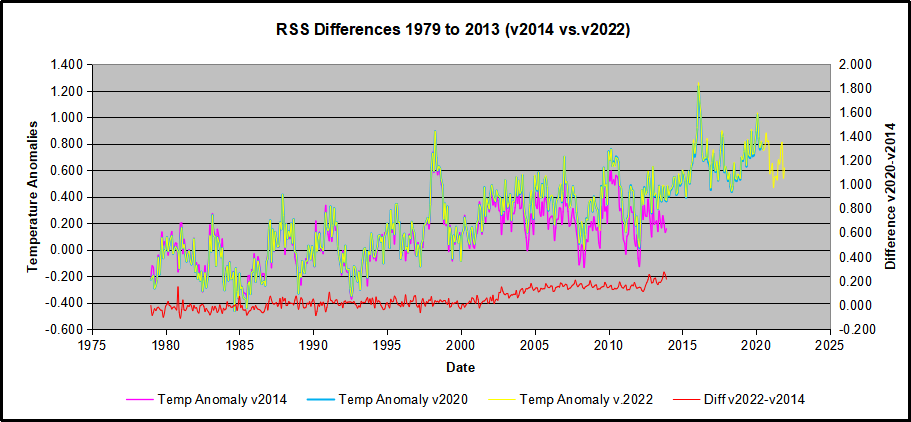

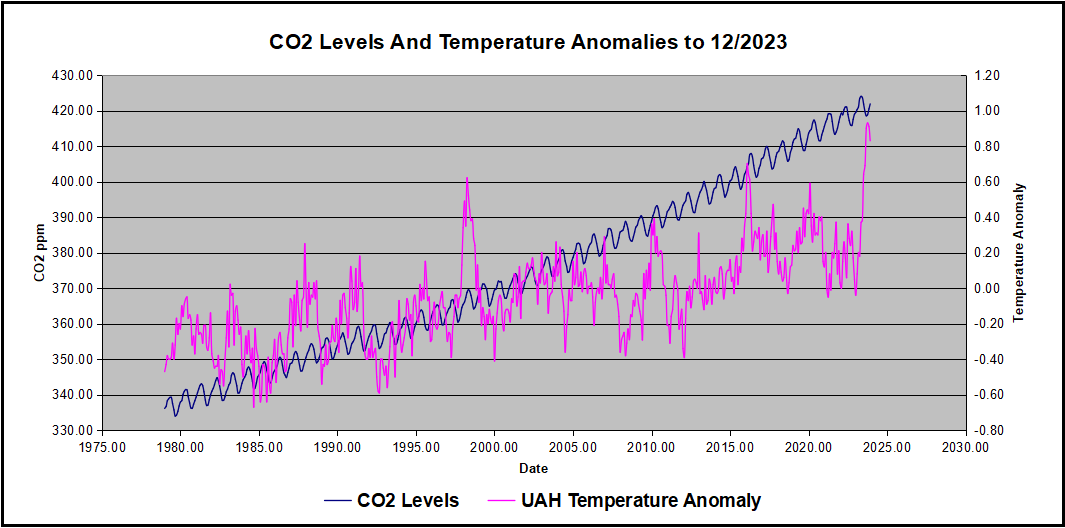

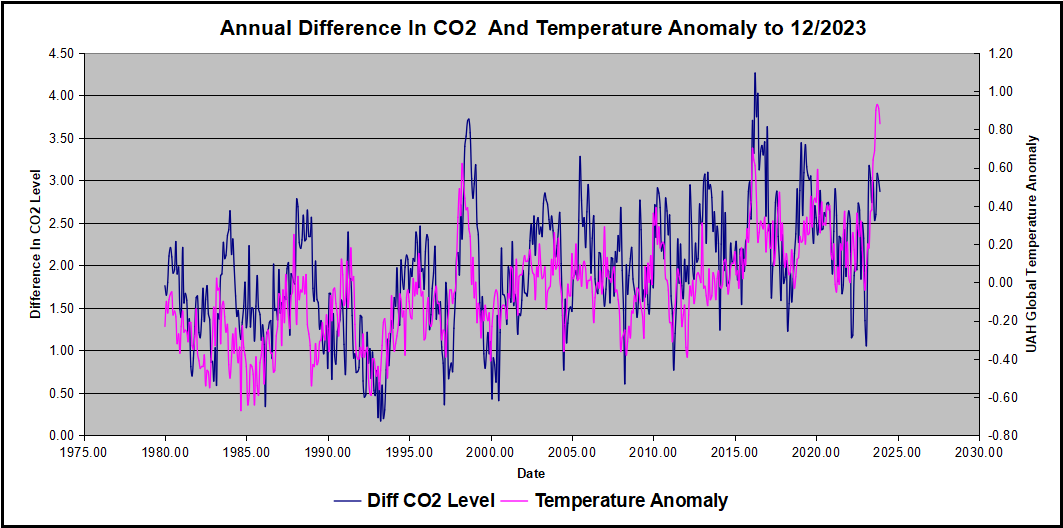

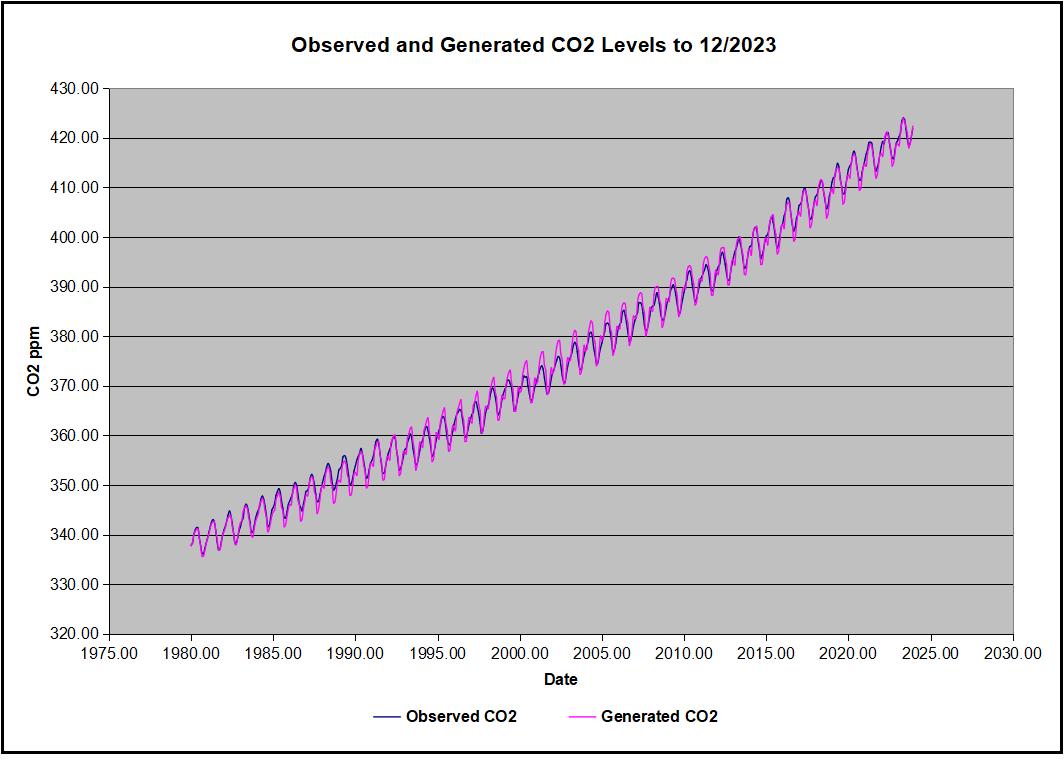

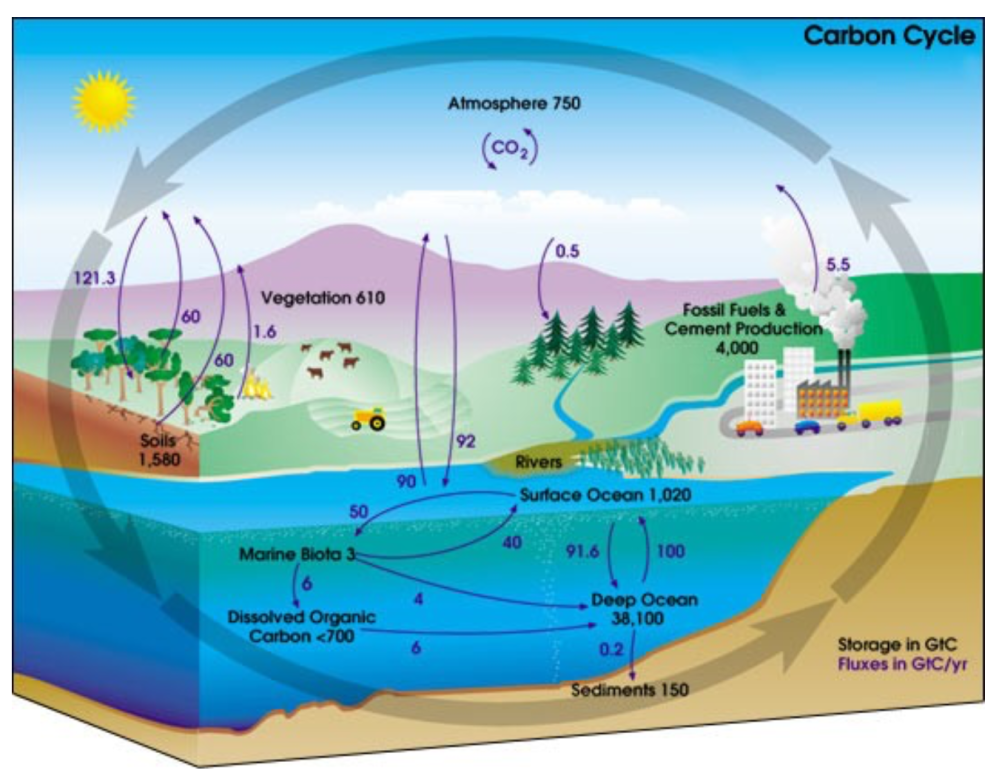

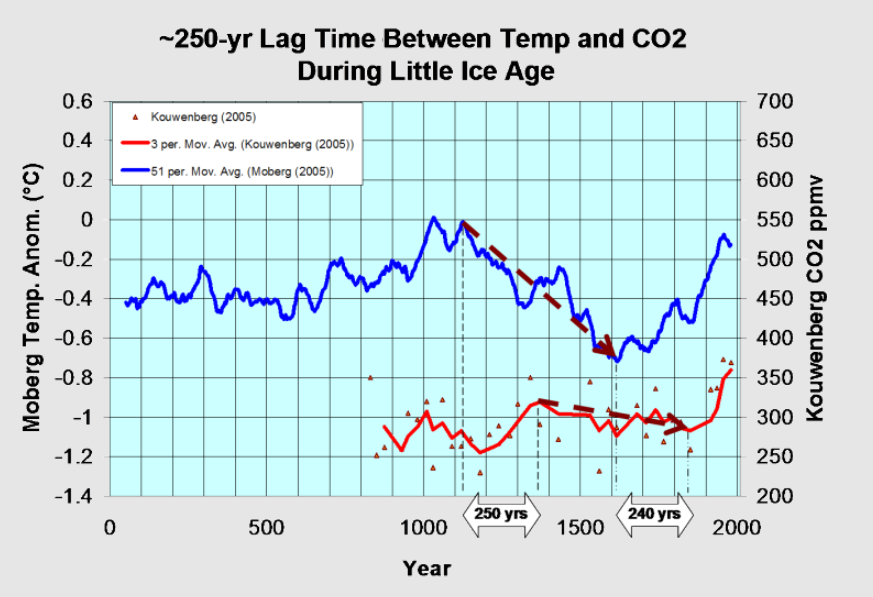

The 3 Lines of Evidence can all be challenged by scientific studies since the 2009 ruling. The temperature records have been adjusted over time and the validity of the measurements are uncertain. The issues with climate models give many reasons to regard them as unfit for policy making. And the claim that rising CO2 caused rising Global Average Surface Temperature (GAST) is dubious, both on grounds that CO2 Infrared activity declines with higher levels, and that temperature changes precede CO2 changes on all time scales from last month’s observations to ice core proxies spanning millennia.

Thus all the arrows claiming causal relations are flawed. The rise of atmospheric CO2 is mostly nature’s response to warming, rather than the other way around. And the earth warming since the Little Ice Age (LIA) is a welcome recovery from the coldest period in the last 10,000 years. Claims of extreme weather and rising sea levels ignore that such events are ordinary in earth history. And the health warnings are contrived in attributing them to barely noticeable warming temperatures.

Background on the Legal Precedents

This post was triggered by noticing an event some years ago. Serial valve turner Ken Ward was granted a new trial by the Washington State Court of Appeals, and he was allowed to present a “necessity defense.” This astonishingly bad ruling is reported approvingly by Kelsey Skaggs at Pacific Standard Why the Necessity Defense is Critical to the Climate Struggle. Excerpt below with my bolds.

A climate activist who was convicted after turning off an oil pipeline won the right in April to argue in a new trial that his actions were justified. The Washington State Court of Appeals ruled that Ken Ward will be permitted to explain to a jury that, while he did illegally stop the flow of tar sands oil from Canada into the United States, his action was necessary to slow catastrophic climate change.

The Skaggs article goes on to cloak energy vandalism with the history of civil disobedience against actual mistreatment and harm. Nowhere is it recognized that the brouhaha over climate change concerns future imaginary harm. How could lawyers and judges get this so wrong? It can only happen when an erroneous legal precedent can be cited to spread a poison in the public square. So I went searching for the tree producing all of this poisonous fruit. The full text of the April 8, 2019, ruling is here.

A paper at Stanford Law School (where else?) provides a good history of the necessity defense as related to climate change activism The Climate Necessity Defense: Proof and Judicial Error in Climate Protest Cases. Excerpts in italics with my bolds.

My perusal of the text led me to the section where the merits are presented.

The typical climate necessity argument is straightforward. The ongoing effects of climate change are not only imminent, they are currently occurring; civil disobedience has been proven to contribute to the mitigation of these harms, and our political and legal systems have proven uniquely ill-equipped to deal with the climate crisis, thus creating the necessity of breaking the law to address it. As opposed to many classic political necessity defendants, such as anti-nuclear power protesters, climate activists can point to the existing (rather than speculative) nature of the targeted harm and can make a more compelling case that their protest activity (for example, blocking fossil fuel extraction) actually prevents some quantum of harm produced by global warming. pg.78

What? On what evidence is such confidence based? Later on (page 80), comes this:

Second, courts’ focus on the politics of climate change distracts from the scientific issues involved in climate necessity cases. There may well be political disagreement over the realities and effects of climate change, but there is little scientific disagreement, as the Supreme Court has noted.131

131 Massachusetts v. E.P.A., 549 U.S. 497, 499 (2007) (“The harms associated with climate change are serious and well recognized . . . [T]he relevant science and a strong consensus among qualified experts indicate that global warming threatens, inter alia, a precipitate rise in sea levels by the end of the century, severe and irreversible changes to natural ecosystems, a significant reduction in water storage in winter snowpack in mountainous regions with direct and important economic consequences, and an increase in the spread of disease and the ferocity of weather events.”).

The roots of this poisonous tree are found in citing the famous Massachusetts v. E.P.A. (2007) case decided by a 5-4 opinion of Supreme Court justices (consensus rate: 56%). But let’s see in what context lies that reference and whether it is a quotation from a source or an issue addressed by the court. The majority opinion was written by Justice Stevens, with dissenting opinions from Chief Justice Roberts and Justice Scalia. All these documents are available at sureme.justia.com Massachusetts v. EPA, 549 U.S. 497 (2007)

From the Majority Opinion:

A well-documented rise in global temperatures has coincided with a significant increase in the concentration of carbon dioxide in the atmosphere. Respected scientists believe the two trends are related. For when carbon dioxide is released into the atmosphere, it acts like the ceiling of a greenhouse, trapping solar energy and retarding the escape of reflected heat. It is therefore a species—the most important species—of a “greenhouse gas.” Source: National Research Council:

National Research Council 2001 report titled Climate Change: An Analysis of Some Key Questions (NRC Report), which, drawing heavily on the 1995 IPCC report, concluded that “[g]reenhouse gases are accumulating in Earth’s atmosphere as a result of human activities, causing surface air temperatures and subsurface ocean temperatures to rise. Temperatures are, in fact, rising.” NRC Report 1.

Calling global warming “the most pressing environmental challenge of our time,”[Footnote 1] a group of States,[Footnote 2] local governments,[Footnote 3] and private organizations,[Footnote 4] alleged in a petition for certiorari that the Environmental Protection Agency (EPA) has abdicated its responsibility under the Clean Air Act to regulate the emissions of four greenhouse gases, including carbon dioxide. Specifically, petitioners asked us to answer two questions concerning the meaning of §202(a)(1) of the Act: whether EPA has the statutory authority to regulate greenhouse gas emissions from new motor vehicles; and if so, whether its stated reasons for refusing to do so are consistent with the statute.

EPA reasoned that climate change had its own “political history”: Congress designed the original Clean Air Act to address local air pollutants rather than a substance that “is fairly consistent in its concentration throughout the world’s atmosphere,” 68 Fed. Reg. 52927 (emphasis added); declined in 1990 to enact proposed amendments to force EPA to set carbon dioxide emission standards for motor vehicles, ibid. (citing H. R. 5966, 101st Cong., 2d Sess. (1990)); and addressed global climate change in other legislation, 68 Fed. Reg. 52927. Because of this political history, and because imposing emission limitations on greenhouse gases would have even greater economic and political repercussions than regulating tobacco, EPA was persuaded that it lacked the power to do so. Id., at 52928. In essence, EPA concluded that climate change was so important that unless Congress spoke with exacting specificity, it could not have meant the agency to address it.

Having reached that conclusion, EPA believed it followed that greenhouse gases cannot be “air pollutants” within the meaning of the Act. See ibid. (“It follows from this conclusion, that [greenhouse gases], as such, are not air pollutants under the [Clean Air Act’s] regulatory provisions …”).

Even assuming that it had authority over greenhouse gases, EPA explained in detail why it would refuse to exercise that authority. The agency began by recognizing that the concentration of greenhouse gases has dramatically increased as a result of human activities, and acknowledged the attendant increase in global surface air temperatures. Id., at 52930. EPA nevertheless gave controlling importance to the NRC Report’s statement that a causal link between the two “ ‘cannot be unequivocally established.’ ” Ibid. (quoting NRC Report 17). Given that residual uncertainty, EPA concluded that regulating greenhouse gas emissions would be unwise. 68 Fed. Reg. 52930.

The harms associated with climate change are serious and well recognized. Indeed, the NRC Report itself—which EPA regards as an “objective and independent assessment of the relevant science,” 68 Fed. Reg. 52930—identifies a number of environmental changes that have already inflicted significant harms, including “the global retreat of mountain glaciers, reduction in snow-cover extent, the earlier spring melting of rivers and lakes, [and] the accelerated rate of rise of sea levels during the 20th century relative to the past few thousand years … .” NRC Report 16.

In sum—at least according to petitioners’ uncontested affidavits—the rise in sea levels associated with global warming has already harmed and will continue to harm Massachusetts. The risk of catastrophic harm, though remote, is nevertheless real. That risk would be reduced to some extent if petitioners received the relief they seek. We therefore hold that petitioners have standing to challenge the EPA’s denial of their rulemaking petition.[Footnote 24]

In short, EPA has offered no reasoned explanation for its refusal to decide whether greenhouse gases cause or contribute to climate change. Its action was therefore “arbitrary, capricious, … or otherwise not in accordance with law.” 42 U. S. C. §7607(d)(9)(A). We need not and do not reach the question whether on remand EPA must make an endangerment finding, or whether policy concerns can inform EPA’s actions in the event that it makes such a finding. Cf. Chevron U. S. A. Inc. v. Natural Resources Defense Council, Inc., 467 U. S. 837, 843–844 (1984). We hold only that EPA must ground its reasons for action or inaction in the statute.

My Comment: Note that the citations of scientific proof were uncontested assertions by petitioners. Note also that the majority did not rule that EPA must make an endangerment finding: “We hold only that EPA must ground its reasons for action or inaction in the statute.”

From the Minority Dissenting Opinion

It is not at all clear how the Court’s “special solicitude” for Massachusetts plays out in the standing analysis, except as an implicit concession that petitioners cannot establish standing on traditional terms. But the status of Massachusetts as a State cannot compensate for petitioners’ failure to demonstrate injury in fact, causation, and redressability.

When the Court actually applies the three-part test, it focuses, as did the dissent below, see 415 F. 3d 50, 64 (CADC 2005) (opinion of Tatel, J.), on the State’s asserted loss of coastal land as the injury in fact. If petitioners rely on loss of land as the Article III injury, however, they must ground the rest of the standing analysis in that specific injury. That alleged injury must be “concrete and particularized,” Defenders of Wildlife, 504 U. S., at 560, and “distinct and palpable,” Allen, 468 U. S., at 751 (internal quotation marks omitted). Central to this concept of “particularized” injury is the requirement that a plaintiff be affected in a “personal and individual way,” Defenders of Wildlife, 504 U. S., at 560, n. 1, and seek relief that “directly and tangibly benefits him” in a manner distinct from its impact on “the public at large,” id., at 573–574. Without “particularized injury, there can be no confidence of ‘a real need to exercise the power of judicial review’ or that relief can be framed ‘no broader than required by the precise facts to which the court’s ruling would be applied.’ ” Warth v. Seldin, 422 U. S. 490, 508 (1975) (quoting Schlesinger v. Reservists Comm. to Stop the War, 418 U. S. 208, 221–222 (1974)).

The very concept of global warming seems inconsistent with this particularization requirement. Global warming is a phenomenon “harmful to humanity at large,” 415 F. 3d, at 60 (Sentelle, J., dissenting in part and concurring in judgment), and the redress petitioners seek is focused no more on them than on the public generally—it is literally to change the atmosphere around the world.

If petitioners’ particularized injury is loss of coastal land, it is also that injury that must be “actual or imminent, not conjectural or hypothetical,” Defenders of Wildlife, supra, at 560 (internal quotation marks omitted), “real and immediate,” Los Angeles v. Lyons, 461 U. S. 95, 102 (1983) (internal quotation marks omitted), and “certainly impending,” Whitmore v. Arkansas, 495 U. S. 149, 158 (1990) (internal quotation marks omitted).

As to “actual” injury, the Court observes that “global sea levels rose somewhere between 10 and 20 centimeters over the 20th century as a result of global warming” and that “[t]hese rising seas have already begun to swallow Massachusetts’ coastal land.” Ante, at 19. But none of petitioners’ declarations supports that connection. One declaration states that “a rise in sea level due to climate change is occurring on the coast of Massachusetts, in the metropolitan Boston area,” but there is no elaboration. Petitioners’ Standing Appendix in No. 03–1361, etc. (CADC), p. 196 (Stdg. App.). And the declarant goes on to identify a “significan[t]” non-global-warming cause of Boston’s rising sea level: land subsidence. Id., at 197; see also id., at 216. Thus, aside from a single conclusory statement, there is nothing in petitioners’ 43 standing declarations and accompanying exhibits to support an inference of actual loss of Massachusetts coastal land from 20th century global sea level increases. It is pure conjecture.

The Court ignores the complexities of global warming, and does so by now disregarding the “particularized” injury it relied on in step one, and using the dire nature of global warming itself as a bootstrap for finding causation and redressability.

Petitioners are never able to trace their alleged injuries back through this complex web to the fractional amount of global emissions that might have been limited with EPA standards. In light of the bit-part domestic new motor vehicle greenhouse gas emissions have played in what petitioners describe as a 150-year global phenomenon, and the myriad additional factors bearing on petitioners’ alleged injury—the loss of Massachusetts coastal land—the connection is far too speculative to establish causation.

From Justice Scalia’s Dissenting Opinion

Even on the Court’s own terms, however, the same conclusion follows. As mentioned above, the Court gives EPA the option of determining that the science is too uncertain to allow it to form a “judgment” as to whether greenhouse gases endanger public welfare. Attached to this option (on what basis is unclear) is an essay requirement: “If,” the Court says, “the scientific uncertainty is so profound that it precludes EPA from making a reasoned judgment as to whether greenhouse gases contribute to global warming, EPA must say so.” Ante, at 31. But EPA has said precisely that—and at great length, based on information contained in a 2001 report by the National Research Council (NRC) entitled Climate Change Science:

“As the NRC noted in its report, concentrations of [greenhouse gases (GHGs)] are increasing in the atmosphere as a result of human activities (pp. 9–12). It also noted that ‘[a] diverse array of evidence points to a warming of global surface air temperatures’ (p. 16). The report goes on to state, however, that ‘[b]ecause of the large and still uncertain level of natural variability inherent in the climate record and the uncertainties in the time histories of the various forcing agents (and particularly aerosols), a [causal] linkage between the buildup of greenhouse gases in the atmosphere and the observed climate changes during the 20th century cannot be unequivocally established. The fact that the magnitude of the observed warming is large in comparison to natural variability as simulated in climate models is suggestive of such a linkage, but it does not constitute proof of one because the model simulations could be deficient in natural variability on the decadal to century time scale’ (p. 17).

“The NRC also observed that ‘there is considerable uncertainty in current understanding of how the climate system varies naturally and reacts to emissions of [GHGs] and aerosols’ (p. 1). As a result of that uncertainty, the NRC cautioned that ‘current estimate of the magnitude of future warming should be regarded as tentative and subject to future adjustments (either upward or downward).’ Id. It further advised that ‘[r]educing the wide range of uncertainty inherent in current model predictions of global climate change will require major advances in understanding and modeling of both (1) the factors that determine atmospheric concentrations of [GHGs] and aerosols and (2) the so-called “feedbacks” that determine the sensitivity of the climate system to a prescribed increase in [GHGs].’ Id.

“The science of climate change is extraordinarily complex and still evolving. Although there have been substantial advances in climate change science, there continue to be important uncertainties in our understanding of the factors that may affect future climate change and how it should be addressed. As the NRC explained, predicting future climate change necessarily involves a complex web of economic and physical factors including: Our ability to predict future global anthropogenic emissions of GHGs and aerosols; the fate of these emissions once they enter the atmosphere (e.g., what percentage are absorbed by vegetation or are taken up by the oceans); the impact of those emissions that remain in the atmosphere on the radiative properties of the atmosphere; changes in critically important climate feedbacks (e.g., changes in cloud cover and ocean circulation); changes in temperature characteristics (e.g., average temperatures, shifts in daytime and evening temperatures); changes in other climatic parameters (e.g., shifts in precipitation, storms); and ultimately the impact of such changes on human health and welfare (e.g., increases or decreases in agricultural productivity, human health impacts). The NRC noted, in particular, that ‘[t]he understanding of the relationships between weather/climate and human health is in its infancy and therefore the health consequences of climate change are poorly understood’ (p. 20). Substantial scientific uncertainties limit our ability to assess each of these factors and to separate out those changes resulting from natural variability from those that are directly the result of increases in anthropogenic GHGs.

“Reducing the wide range of uncertainty inherent in current model predictions will require major advances in understanding and modeling of the factors that determine atmospheric concentrations of greenhouse gases and aerosols, and the processes that determine the sensitivity of the climate system.” 68 Fed. Reg. 52930.

I simply cannot conceive of what else the Court would like EPA to say.

Conclusion

Justice Scalia laid the axe to the roots of this poisonous tree. Even the scientific source document relied on by the majority admits that claims of man made warming are conjecture without certain evidence. This case does not prove CAGW despite it being repeatedly cited as though it did.

2025 The Legal Landscape Has Shifted For EPA

But much has changed in the legal landscape in recent years that will give opponents to Zeldin’s effort an uphill battle to fight. First is the changed make-up of the Supreme Court. When the Massachusetts v. EPA case was decided in 2007, the Court was evenly divided, consisting of four conservatives, four liberals, and Anthony Kennedy, a moderate who served as the Court’s “swing vote” in many major decisions. Kennedy was the deciding vote in that case, siding with the four liberal justices.

But conservatives hold an overwhelming 6-3 majority on today’s Supreme Court. While Chief Justice John Roberts and Associate Justice Amy Coney Barrett have occasionally sided with the Court’s three liberal justices in a handful of decisions, there is little reason to think that would happen in a reconsideration of the Massachusetts v. EPA case. That seems especially true for Justice Roberts, who wrote the dissenting opinion in the 2007 decision.

The Supreme Court’s 2024 decision in the Loper Bright Industries v. EPA case could present another major challenge for Zeldin’s opponents to overcome. In a 6-3 decision in that case, the Court reversed the longstanding Chevron Deference legal doctrine.

As I wrote at the time, [w]hen established in 1984 in a unanimous, 6-0 decision written by Justice John Paul Stevens, Chevron instructed federal courts to defer to the judgment of legal counsel for the regulatory agencies when such regulations were challenged via litigation. Since that time, agencies focused on extending their authority well outside the original intents of the governing statutes have relied on the doctrine to ensure they will not be overturned.

The existence of the Chevron deference has worked to ensure the judiciary branch of government has also been largely paralyzed to act decisively to review and overrule elements of the Biden agenda whenever the EPA, Bureau of Land Management or other agencies impose regulations that may lie outside the scope and intent of the governing statutes. In effect, this doctrine has served as a key enabler of the massive growth of what has come to be known as the US administrative state.

The question now becomes whether the current Supreme Court with its strong conservative majority will uphold its reasoning in Massachusetts v. EPA in the absence of the Chevron Deference.

The Bottom Line For Zeldin And EPA

Opponents of the expansion of EPA air regulations by the Obama and Biden presidencies have long contended that the underpinnings for those actions – Massachusetts v. EPA and the 2009 endangerment finding – were a classic legal house of cards that would ultimately come falling down when the politics and makeup of the Supreme Court shifted.

Trump and Zeldin are betting that both factors are now in favor of these major actions at EPA. Only time, and an array of major court battles to come, will tell. [Source: David Blackmon at Forbes]

Footnote:

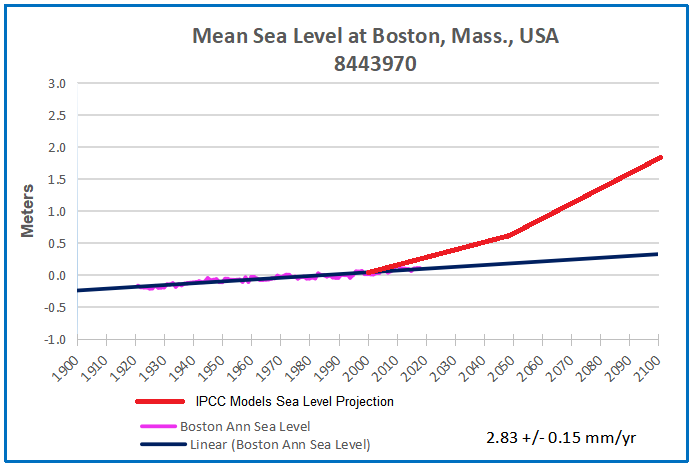

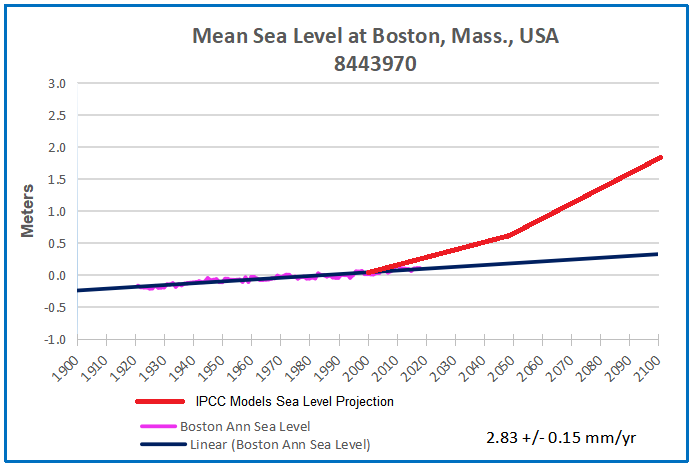

Taking the sea level rise projected by Sea Change Boston, and through the magic of CAI (Computer-Aided Imagining), we can compare to tidal gauge observations at Boston:

The Liberal party may have a new face, but Carney’s insistence on keeping an emissions cap and industrial carbon tax in place — both products of Justin Trudeau’s Liberal government — doesn’t invoke much confidence in his energy superpower plan.

The Liberal party may have a new face, but Carney’s insistence on keeping an emissions cap and industrial carbon tax in place — both products of Justin Trudeau’s Liberal government — doesn’t invoke much confidence in his energy superpower plan.

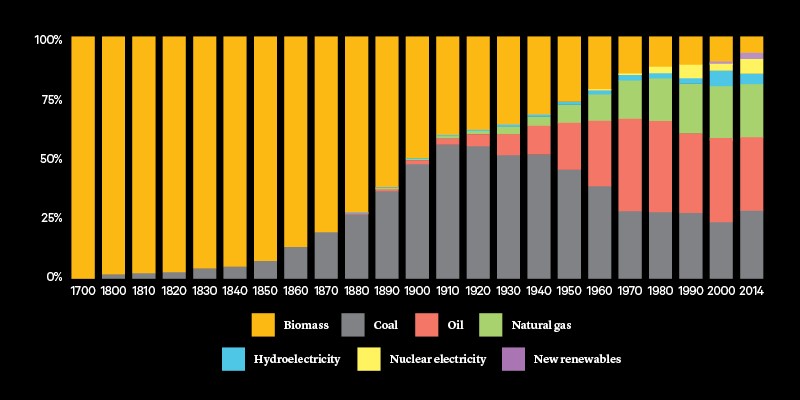

The history of America is, in many ways, the history of energy. The steam power that revolutionized travel and the shipping of goods. The coal that fueled the railroads and the industrial revolution. The petroleum that helped birth the age of the automobile. And now, if we only have the will, a new era of renewable energy.

The history of America is, in many ways, the history of energy. The steam power that revolutionized travel and the shipping of goods. The coal that fueled the railroads and the industrial revolution. The petroleum that helped birth the age of the automobile. And now, if we only have the will, a new era of renewable energy.

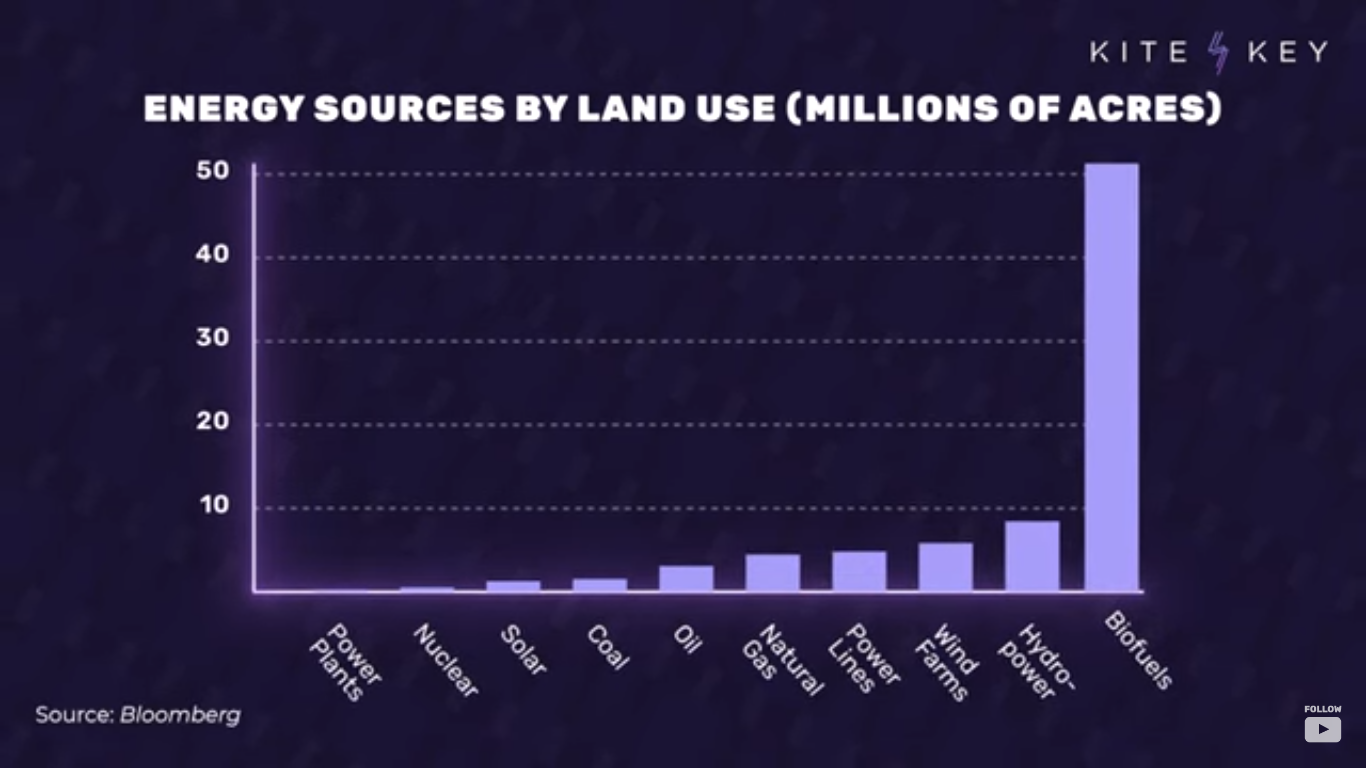

In fact, the government reports that switching over to natural gas did more than twice as much to cut carbon emissions as renewables did in recent years. Why did natural gas progress so much faster than renewables? It wasn’t an accident.

Energy is a little like money: You have to spend it to make it. To get usable natural gas, for example, you’ve first got to drill a well, process and transport the gas, build a power plant, and generate the electricity. But the question is how much energy are you getting back for your investment? With natural gas, you get about 30 times as much power out of the system as you put into creating it. By contrast, with something like solar power, you only get about 3 1/2 times as much power back.

In fact, the government reports that switching over to natural gas did more than twice as much to cut carbon emissions as renewables did in recent years. Why did natural gas progress so much faster than renewables? It wasn’t an accident.

Energy is a little like money: You have to spend it to make it. To get usable natural gas, for example, you’ve first got to drill a well, process and transport the gas, build a power plant, and generate the electricity. But the question is how much energy are you getting back for your investment? With natural gas, you get about 30 times as much power out of the system as you put into creating it. By contrast, with something like solar power, you only get about 3 1/2 times as much power back.

In human terms, with an EROI of 1, we can mine fuel and look at it—we have no energy left over. To get a society that can feed itself and provide a basic educational system we need an EROI of our base-load fuel to be in excess of 5, and for a society with international travel and high culture we need EROI greater than 10. The new renewable energies do not reach this last level when the extra energy costs of overcoming intermittency are added in. In energy terms the current generation of renewable energy technologies alone will not enable a civilized modern society to continue!

In human terms, with an EROI of 1, we can mine fuel and look at it—we have no energy left over. To get a society that can feed itself and provide a basic educational system we need an EROI of our base-load fuel to be in excess of 5, and for a society with international travel and high culture we need EROI greater than 10. The new renewable energies do not reach this last level when the extra energy costs of overcoming intermittency are added in. In energy terms the current generation of renewable energy technologies alone will not enable a civilized modern society to continue!

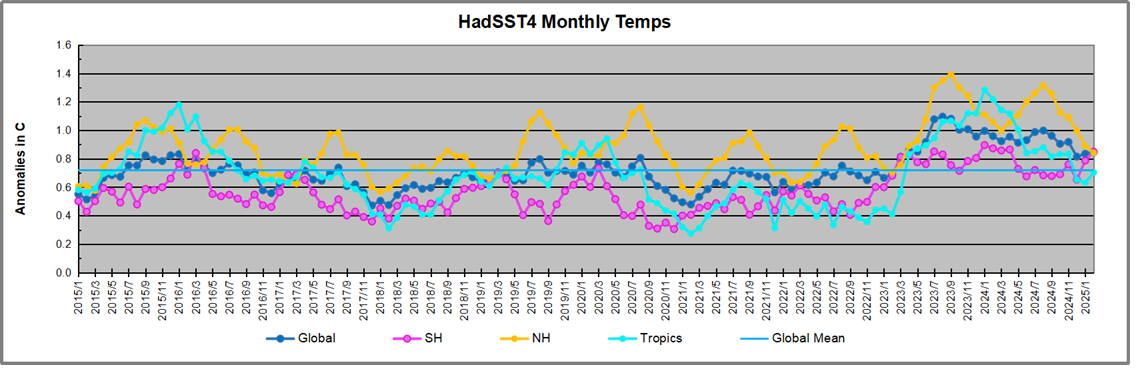

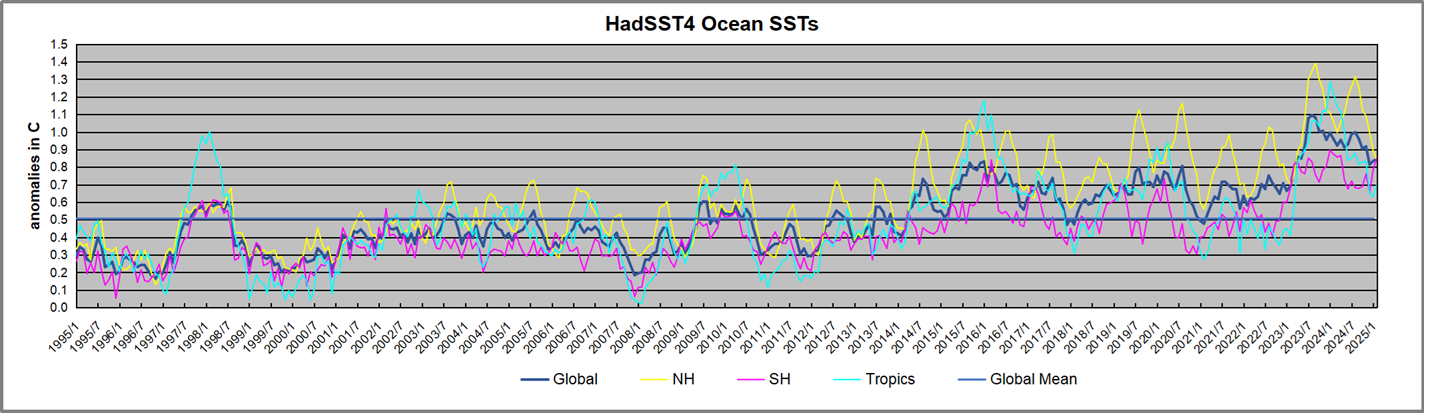

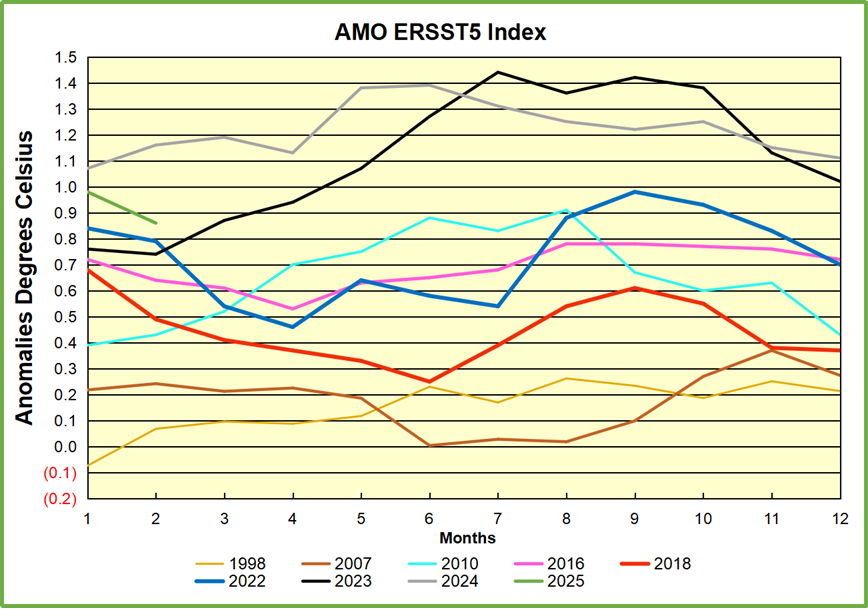

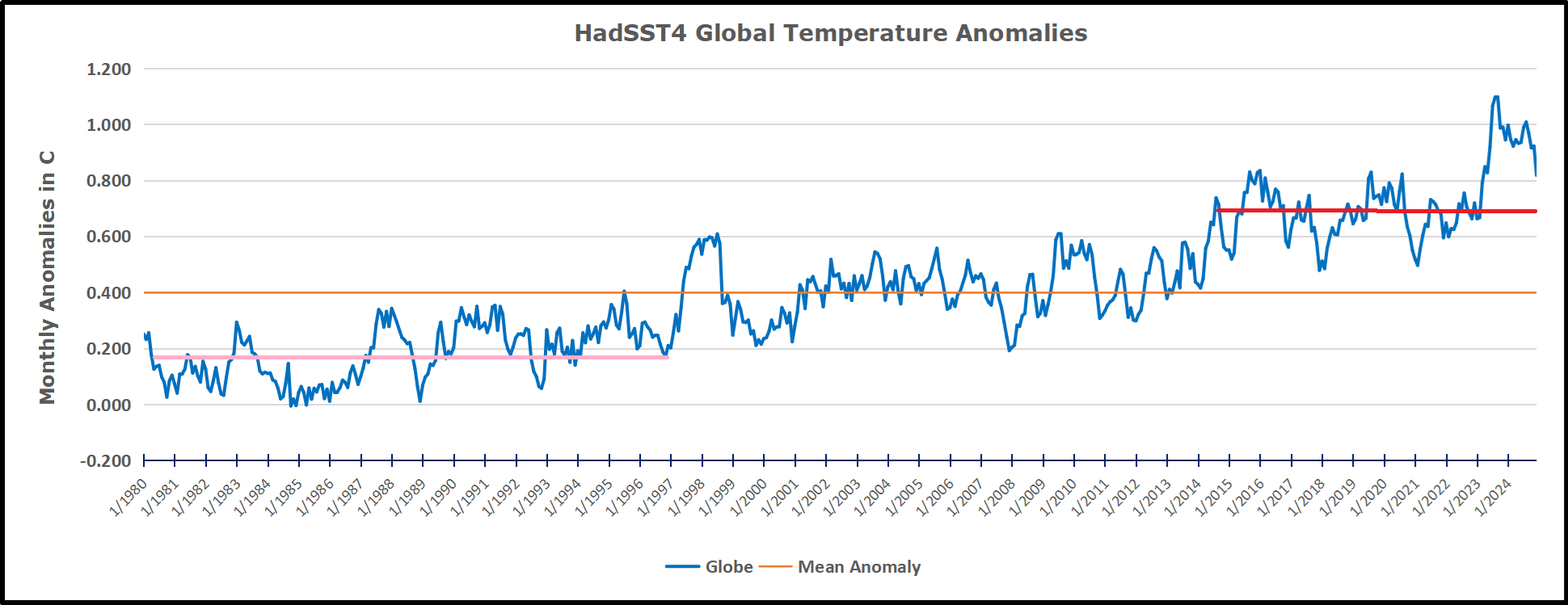

The best context for understanding decadal temperature changes comes from the world’s sea surface temperatures (SST), for several reasons:

The best context for understanding decadal temperature changes comes from the world’s sea surface temperatures (SST), for several reasons: