Covid Recedes in Canada August

The map shows that in Canada 8979 deaths have been attributed to Covid19, meaning people who died having tested positive for SARS CV2 virus. This number accumulated over a period of 204 days starting January 31. The daily death rate reached a peak of 177 on May 6, 2020, and is down to 5 as of yesterday. More details on this below, but first the summary picture. (Note: 2019 is the latest demographic report)

| Canada Pop | Ann Deaths | Daily Deaths | Risk per Person |

|

| 2019 | 37589262 | 330786 | 906 | 0.8800% |

| Covid 2020 | 37589262 | 8979 | 44 | 0.0239% |

Over the epidemic months, the average Covid daily death rate amounted to 5% of the All Causes death rate. During this time a Canadian had an average risk of 1 in 5000 of dying with SARS CV2 versus a 1 in 114 chance of dying regardless of that infection. As shown later below the risk varied greatly with age, much lower for younger, healthier people.

Background Updated from Previous Post

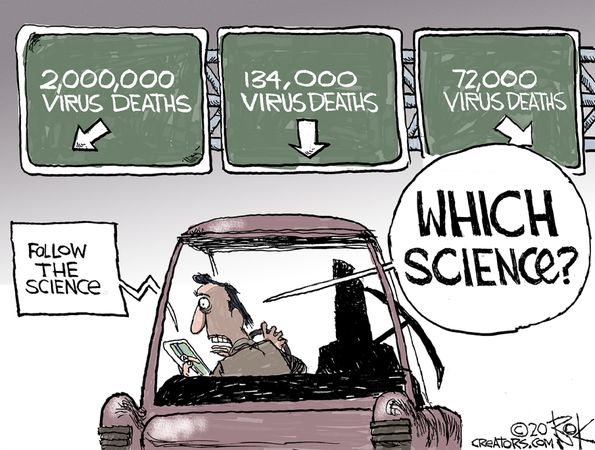

In reporting on Covid19 pandemic, governments have provided information intended to frighten the public into compliance with orders constraining freedom of movement and activity. For example, the above map of the Canadian experience is all cumulative, and the curve will continue upward as long as cases can be found and deaths attributed. As shown below, we can work around this myopia by calculating the daily differentials, and then averaging newly reported cases and deaths by seven days to smooth out lumps in the data processing by institutions.

A second major deficiency is lack of reporting of recoveries, including people infected and not requiring hospitalization or, in many cases, without professional diagnosis or treatment. The only recoveries presently to be found are limited statistics on patients released from hospital. The only way to get at the scale of recoveries is to subtract deaths from cases, considering survivors to be in recovery or cured. Comparing such numbers involves the delay between infection, symptoms and death. Herein lies another issue of terminology: a positive test for the SARS CV2 virus is reported as a case of the disease COVID19. In fact, an unknown number of people have been infected without symptoms, and many with very mild discomfort.

August 7 in the UK it was reported (here) that around 10% of coronavirus deaths recorded in England – almost 4,200 – could be wiped from official records due to an error in counting. Last month, Health Secretary Matt Hancock ordered a review into the way the daily death count was calculated in England citing a possible ‘statistical flaw’. Academics found that Public Health England’s statistics included everyone who had died after testing positive – even if the death occurred naturally or in a freak accident, and after the person had recovered from the virus. Numbers will now be reconfigured, counting deaths if a person died within 28 days of testing positive much like Scotland and Northern Ireland…

Professor Heneghan, director of the Centre for Evidence-Based Medicine at Oxford University, who first noticed the error, told the Sun: ‘It is a sensible decision. There is no point attributing deaths to Covid-19 28 days after infection…

For this discussion let’s assume that anyone reported as dying from COVD19 tested positive for the virus at some point prior. From the reasoning above let us assume that 28 days after testing positive for the virus, survivors can be considered recoveries.

Recoveries are calculated as cases minus deaths with a lag of 28 days. Daily cases and deaths are averages of the seven days ending on the stated date. Recoveries are # of cases from 28 days earlier minus # of daily deaths on the stated date. Since both testing and reports of Covid deaths were sketchy in the beginning, this graph begins with daily deaths as of April 24, 2020 compared to cases reported on March 27, 2020.

The line shows the Positivity metric for Canada starting at nearly 8% for new cases April 24, 2020. That is, for the 7 day period ending April 24, there were a daily average of 21,772 tests and 1715 new cases reported. Since then the rate of new cases has dropped down, now holding steady at ~1% since mid-June. Yesterday, the daily average number of tests was 43,612 with 375 new cases. So despite double the testing, the positivity rate is not climbing. Another view of the data is shown below.

The scale of testing has increased and has now reached nearly 50,000 a day, while positive tests (cases) are hovering at 1% positivity. The shape of the recovery curve resembles the case curve lagged by 28 days, since death rates are a small portion of cases. The recovery rate has grown from 83% to 98% steady over the last 2 weeks. This approximation surely understates the number of those infected with SAR CV2 who are healthy afterwards, since antibody studies show infection rates multiples higher than confirmed positive tests (8 times higher in Canada). In absolute terms, cases are now down to 375 a day and deaths 5 a day, while estimates of recoveries are 285 a day.

Summary of Canada Covid Epidemic

It took a lot of work, but I was able to produce something akin to the Dutch advice to their citizens.

The media and governmental reports focus on total accumulated numbers which are big enough to scare people to do as they are told. In the absence of contextual comparisons, citizens have difficulty answering the main (perhaps only) question on their minds: What are my chances of catching Covid19 and dying from it?

A previous post reported that the Netherlands parliament was provided with the type of guidance everyone wants to see.

For canadians, the most similar analysis is this one from the Daily Epidemiology Update: :

The table presents only those cases with a full clinical documentation, which included some 2194 deaths compared to the 5842 total reported. The numbers show that under 60 years old, few adults and almost no children have anything to fear.

Update May 20, 2020

It is really quite difficult to find cases and deaths broken down by age groups. For Canadian national statistics, I resorted to a report from Ontario to get the age distributions, since that province provides 69% of the cases outside of Quebec and 87% of the deaths. Applying those proportions across Canada results in this table. For Canada as a whole nation:

| Age | Risk of Test + | Risk of Death | Population per 1 CV death |

| <20 | 0.05% | None | NA |

| 20-39 | 0.20% | 0.000% | 431817 |

| 40-59 | 0.25% | 0.002% | 42273 |

| 60-79 | 0.20% | 0.020% | 4984 |

| 80+ | 0.76% | 0.251% | 398 |

In the worst case, if you are a Canadian aged more than 80 years, you have a 1 in 400 chance of dying from Covid19. If you are 60 to 80 years old, your odds are 1 in 5000. Younger than that, it’s only slightly higher than winning (or in this case, losing the lottery).

As noted above Quebec provides the bulk of cases and deaths in Canada, and also reports age distribution more precisely, The numbers in the table below show risks for Quebecers.

| Age | Risk of Test + | Risk of Death | Population per 1 CV death |

| 0-9 yrs | 0.13% | 0 | NA |

| 10-19 yrs | 0.21% | 0 | NA |

| 20-29 yrs | 0.50% | 0.000% | 289,647 |

| 30-39 | 0.51% | 0.001% | 152,009 |

| 40-49 years | 0.63% | 0.001% | 73,342 |

| 50-59 years | 0.53% | 0.005% | 21,087 |

| 60-69 years | 0.37% | 0.021% | 4,778 |

| 70-79 years | 0.52% | 0.094% | 1,069 |

| 80-89 | 1.78% | 0.469% | 213 |

| 90 + | 5.19% | 1.608% | 62 |

While some of the risk factors are higher in the viral hotspot of Quebec, it is still the case that under 80 years of age, your chances of dying from Covid 19 are better than 1 in 1000, and much better the younger you are.

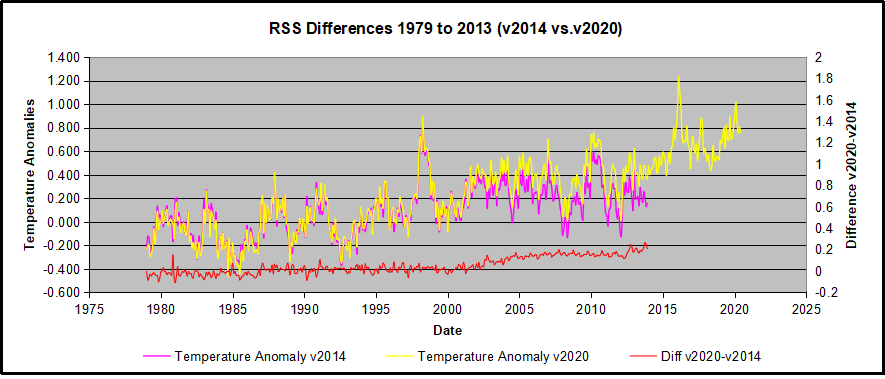

With apologies to Paul Revere, this post is on the lookout for cooler weather with an eye on both the Land and the Sea. UAH has updated their tlt (temperatures in lower troposphere) dataset for July 2020. Previously I have done posts on their reading of ocean air temps as a prelude to updated records from HADSST3. This month also has a separate graph of land air temps because the comparisons and contrasts are interesting as we contemplate possible cooling in coming months and years.

With apologies to Paul Revere, this post is on the lookout for cooler weather with an eye on both the Land and the Sea. UAH has updated their tlt (temperatures in lower troposphere) dataset for July 2020. Previously I have done posts on their reading of ocean air temps as a prelude to updated records from HADSST3. This month also has a separate graph of land air temps because the comparisons and contrasts are interesting as we contemplate possible cooling in coming months and years.