How to FLICC Off Climate Alarms

John Ridgway has provided an excellent framework for skeptics to examine and respond to claims from believers in global warming/climate change. His essay at Climate Scepticism is Deconstructing Scepticism: The True FLICC. Excerpts in italics with my bolds and added comments.

Overview

I have modified slightly the FLICC components to serve as a list of actions making up a skeptical approach to an alarmist claim. IOW this is a checklist for applying critical intelligence to alarmist discourse in the public arena. The Summary can be stated thusly:

♦ Follow the Data

Find and follow the data and facts to where they lead

♦ Look for full risk profile

Look for a complete assessment of risks and costs from proposed policies

♦ Interrogate causal claims

Inquire into claimed cause-effect relationships

♦ Compile contrary explanations

Construct an organized view of contradictory evidence to the theory

♦ Confront cultural bias

Challenge attempts to promote consensus story with flimsy coincidence

A Case In Point

John Ridgway illustrates how this method works in a comment:

No sooner have I’ve pressed the publish button, and the BBC comes out with the perfect example of what I have been writing about: Climate change: Rising sea levels threaten 200,000 England properties

It tells of a group of experts theorizing that 200,000 coastal properties are soon to be lost due to climate change. Indeed, it “is already happening” as far as Happisburg on the Norfolk coast is concerned. Coastal erosion is indeed a problem there.

But did the experts take into account that the data shows no acceleration of erosion over the last 2000 years? No.

Have they acknowledge the fact that erosion on the East coast is a legacy of glaciation? No.

[For the US example of this claim, see my post Sea Level Scare Machine]

The FLICC Framework

Below is Ridgway’s text regarding this thought process, followed by a synopsis of his discussion of the five elements. Text is in italics with my bolds.

As part of the anthropogenic climate change debate, and when discussing the proposed plans for transition to Net Zero, efforts have been made to analyse the thinking that underpins the typical sceptic’s position. These analyses have universally presupposed that such scepticism stubbornly persists in the face of overwhelming evidence, as reflected in the widespread use of the term ‘denier’. Consequently, they are based upon taxonomies of flawed reasoning and methods of deception and misinformation.1

However, by taking such a prejudicial approach, the analyses have invariably failed to acknowledge the ideological, philosophical and psychological bases for sceptical thinking. The following taxonomy redresses that failing and, as a result, offers a more pertinent analysis that avoids the worst excesses of opinionated philippic. The taxonomy identifies a basic set of ideologies and attitudes that feature prominently in the typical climate change sceptic’s case. For my taxonomy I have chosen the acronym FLICC:2

- Follow data but distrust judgement and speculation

i.e. value empirical evidence over theory and conjecture.

- Look for the full risk profile

i.e. when considering the management of risks and uncertainties, demand that those associated with mitigating and preventative measures are also taken into account.

- Interrogate causal arguments

i.e. demand that both necessity and sufficiency form the basis of a causal analysis.

- Contrariness

i.e. distrust consensus as an indicator of epistemological value.

- Cultural awareness

i.e. never underestimate the extent to which a society can fabricate a truth for its own purposes.

All of the above have a long and legitimate history outside the field of climate science. The suggestion that they are not being applied in good faith by climate change sceptics falls beyond the remit of taxonomical analysis and strays into the territory of propaganda and ad hominem.

The five ideologies and attitudes of climate change scepticism introduced above are now discussed in greater detail.

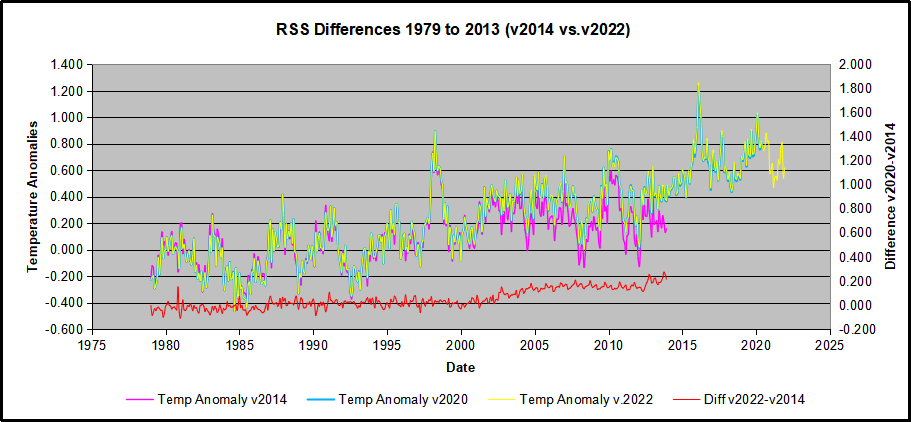

Following the data

Above all else, the sceptical approach is characterized by a reluctance to draw conclusions from a given body of evidence. When it comes to evidence supporting the idea of a ‘climate crisis’, such reluctance is judged by many to be pathological and indicative of motivated reasoning. Cognitive scientists use the term ‘conservative belief revision’ to refer to an undue reluctance to update beliefs in accordance with a new body of evidence. More precisely, when the individual retains the view that events have a random pattern, thereby downplaying the possibility of a causative factor, the term used is ‘slothful induction’. Either way, the presupposition is that the individual is committing a logical fallacy resulting from cognitive bias.

However, far from being a pathology of thinking, such reluctance has its legitimate foundations in Pyrrhonian philosophy and, when properly understood, it can be seen as an important thinking strategy.3 Conservative belief revision and slothful induction can indeed lead to false conclusions but, more importantly, the error most commonly encountered when making decisions under uncertainty (and the one with the greatest potential for damage) is to downplay unknown and possibly random factors and instead construct a narrative that overstates and prejudges causation. This tendency is central to the human condition and it lies at the heart of our failure to foresee the unexpected – this is the truly important cognitive bias that the sceptic seeks to avoid.

The empirical sceptic is cognisant of evidence and allows the formulation of theories but treats them with considerable caution due to the many ways in which such theories often entail unwarranted presupposition.

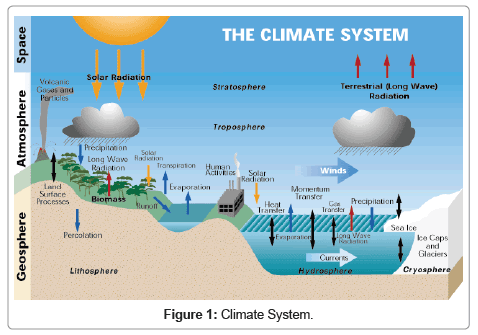

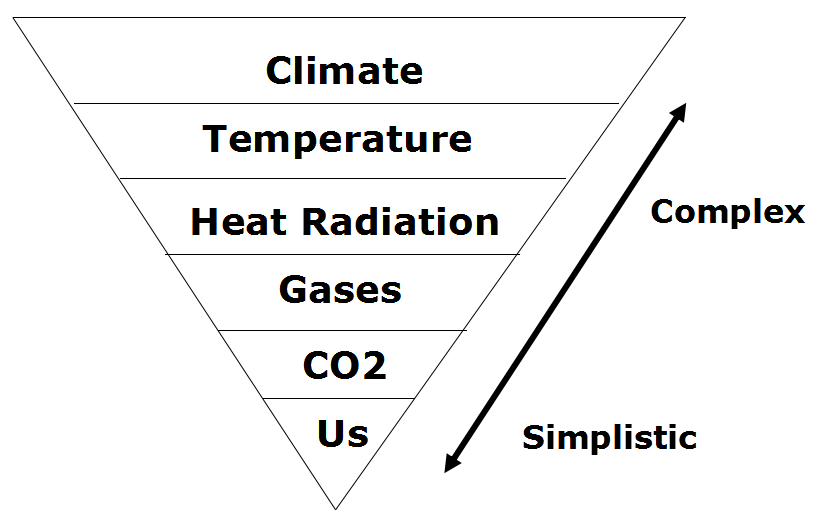

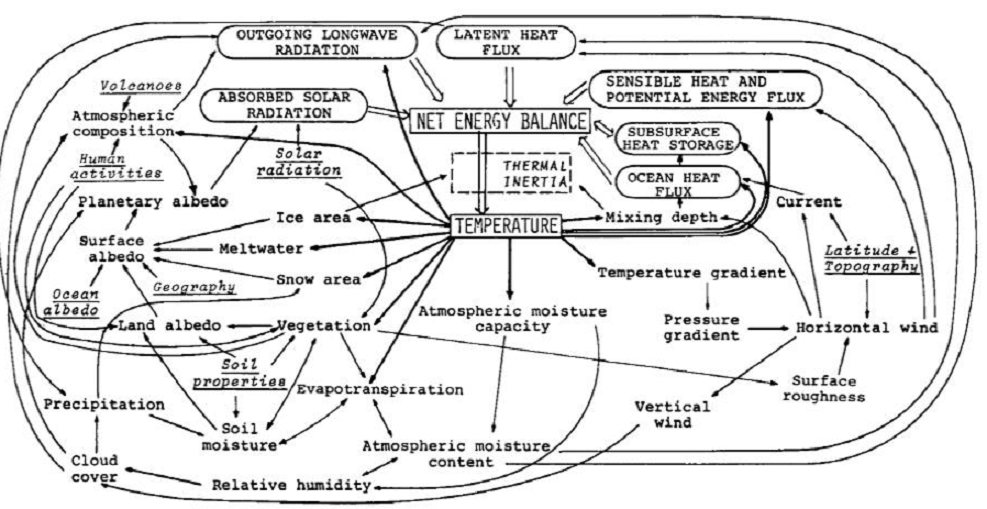

The drivers behind this problem are the propensity of the human mind to seek patterns, to construct narratives that hide complexities, to over-emphasise the causative role played by human agents and to under-emphasise the role played by external and possibly random factors. Ultimately, it is a problem regarding the comprehension of uncertainty — we comprehend in a manner that has served us well in evolutionary terms but has left us vulnerable to unprecedented, high consequence events.

It is often said that a true sceptic is one who is prepared to accept the prevailing theory once the evidence is ‘overwhelming’. The climate change sceptic’s reluctance to do so is taken as an indication that he or she is not a true sceptic. However, we see here that true scepticism lies in the willingness to challenge the idea that the evidence is overwhelming – it only seems overwhelming to those who fail to recognise the ‘theorizing disease’ and lack the resolve to resist it. Secondly, there cannot be a climate change sceptic alive who is not painfully aware of the humiliation handed out to those who resist the theorizing.

In practice, the theorizing and the narratives that trouble the empirical sceptic take many forms. It can be seen in:

♦ over-dependence upon mathematical models for which the tuning owes more to art than science.

♦ readiness to treat the output of such models as data resulting from experiment, rather than the hypotheses they are.

♦ lack of regard for ontological uncertainty (i.e. the unknown unknowns which, due to their very nature, the models do not address).

♦ emergence of story-telling as a primary weapon in the armoury of extreme weather event attribution.

♦ willingness to commit trillions of pounds to courses of action that are predicated upon Representative Concentration Pathways and economic models that are the ‘theorizing disease’ writ large.

♦ contributions of the myriad of activists who seek to portray the issues in a narrative form laden with social justice and other ethical considerations.

♦ imaginative but simplistic portrayals of climate change sceptics and their motives; portrayals that are drawing categorical conclusions that cannot possibly be justified given the ‘evidence’ offered. And;

♦ any narrative that turns out to be unfounded when one follows the data.

Climate change may have its basis in science and data, but this basis has long since been overtaken by a plethora of theorizing and causal narrative that sometimes appears to have taken on a life of its own. Is this what settled science is supposed to look like?

Looking for the full risk profile

Almost as fundamental as the sceptic’s resistance to theorizing and narrative is his or her appreciation that the management of anthropogenic warming (particularly the transition to Net Zero) is an undertaking beset with risk and uncertainty. This concern reflects a fundamental principle of risk management: proposed actions to tackle a risk are often in themselves problematic and so a full risk analysis is not complete until it can be confirmed that the net risk will decrease following the actions proposed.7

Firstly, the narrative of existential risk is rejected on the grounds of empirical scepticism (the evidence for an existential threat is not overwhelming, it is underwhelming).

Secondly, even if the narrative is accepted, it has not been reliably demonstrated that the proposal for Net Zero transition is free from existential or extreme risks.

Indeed, given the dominant role played by the ‘theorizing disease’ and how it lies behind our inability to envisage the unprecedented high consequence event, there is every reason to believe that the proposals for Net Zero transition should be equally subject to the precautionary principle. The fact that they are not is indicative of a double standard being applied. The argument seems to run as follows: There is no uncertainty regarding the physical risk posed by climate change, but if there were it would only add to the imperative for action. There is also no uncertainty regarding the transition risk, but if there were it could be ignored because one can only apply the precautionary principle once!

This is precisely the sort of inconsistency one encounters when uncertainties are rationalised away in order to support the favoured narrative.

The upshot of this double standard is that the activists appear to be proceeding with two very different risk management frameworks depending upon whether physical or transition risk is being considered. As a result, risks associated with renewable energy security, the environmental damage associated with proposals to reduce carbon emissions and the potentially catastrophic effects of the inevitable global economic shock are all played down or explained away.

Looking for the full risk profile is a basic of risk management practice. The fact that it is seen as a ploy used only by those wishing to oppose the management of anthropogenic climate change is both odd and worrying. It is indeed important to the sceptic, but it should be important to everyone.

Interrogating causal arguments

For many years we have been told that anthropogenic climate change will make bad things happen. These dire predictions were supposed to galvanize the world into action but that didn’t happen, no doubt partly due to the extent to which such predictions repeatedly failed to come true (as, for example, with the predictions of the disappearance of Arctic sea ice). . .This is one good reason for the empirical sceptic to distrust the narrative,8 but an even better one lies in the very concept of causation.

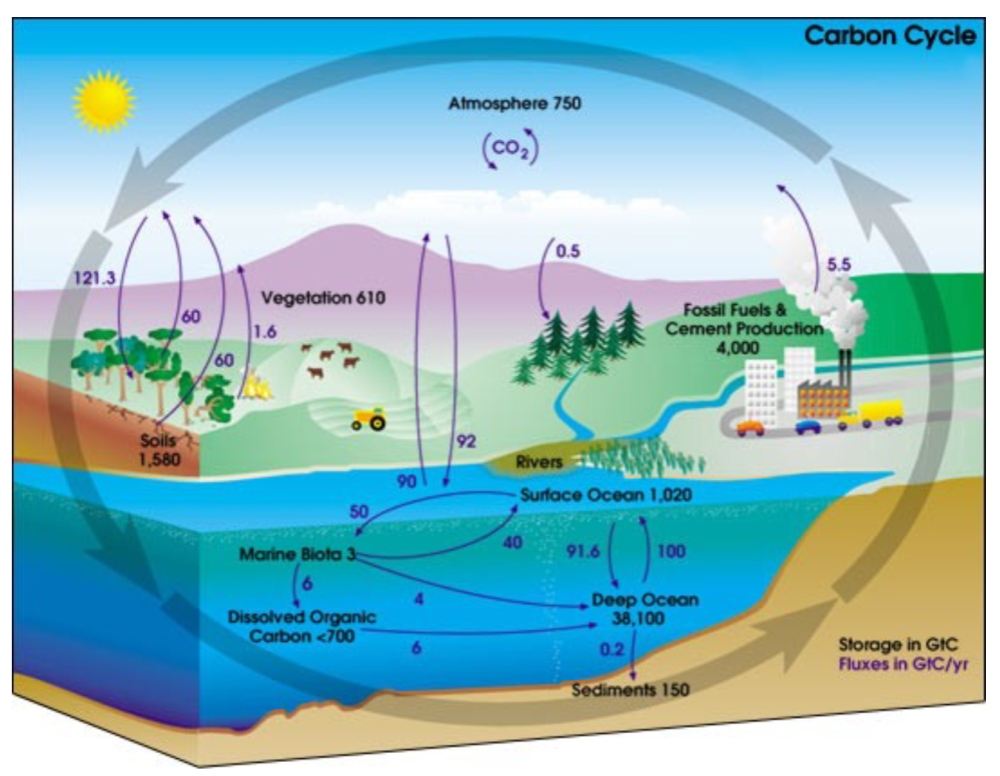

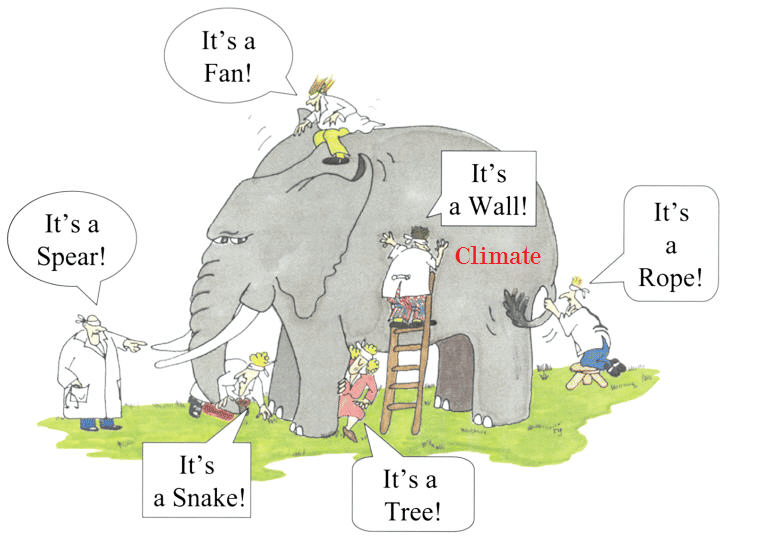

A major purpose of narrative is to reduce complexity so that the ‘truth’ can shine through. This is particularly the case with causal narratives. We all want executive summaries and sound bites such as ‘Y happened because of X’. But very few of us are interested in examining exactly what we mean by such statements – very few except, of course, for the empirical sceptics. In a messy world in which many factors may be at play, the more pertinent questions are:

♦ To what extent was X necessary for Y to happen?

♦ To what extent was X sufficient for Y to happen?

The vast majority of the extreme weather event attribution narrative is focused upon the first question and very little attention is paid to the second; at least not in the many press bulletins issued. Basically, we are told that the event was virtually impossible without climate change, but very little is said regarding whether climate change on its own was enough.

This problem of oversimplification is even more worrying once one starts to examine consequential damages whilst failing to take into account man-made failings such as those that exacerbate the impacts of floods and forest fires.9 The oversimplification of causal narrative is not restricted to weather-related events, of course. Climate change, we are told, is wreaking havoc with the flora and fauna and many species are dying out as a result. However, when such claims are examined more closely,10 it is invariably the case that climate change has been lumped in with a number of other factors that are destroying habitat.

When climate change sceptics point this out they are, of course, accused of cherry-picking. The truth, however, is that their insistence that the extended causal narrative of necessity and sufficiency should be respected is nothing more than the consequence of following the data and looking for the full risk profile.

Contrariness

The climate change debate is all about making decisions under uncertainty, so it is little surprise that gaining consensus is seen as centrally important. Uncertainty is reduced when the evidence is overwhelming and it is tempting to believe that the high level of consensus amongst climate scientists surely points towards there being overwhelming evidence. If one accepts this logic then the sceptic’s refusal to accept the consensus is just another manifestation of his or her denial.

Except, of course, an empirical sceptic would not accept this logic. Consensus does not result from a simple examination of concordant evidence, it is instead the fruit of the tendentious theorizing and simplifying narrative that the empirical sceptic intuitively distrusts. As explained above, there are a number of drivers that cause such theories and narratives to entail unwarranted presupposition, and it is naïve to believe that scientists are immune to such drivers.

However, the fact remains that consensus on beliefs is neither a sufficient nor a necessary condition for presuming that these beliefs constitute shared knowledge. It is only when a consensus on beliefs is uncoerced, uniquely heterogeneous and large, that a shared knowledge provides the best explanation of a given consensus.11 The notion that a scientific consensus can be trusted because scientists are permanently seeking to challenge accepted views is simplistic at best.

It is actually far from obvious that in climate science the conditions have been met for consensus to be a reliable indicator of shared knowledge.

Contrariness simply comes with the territory of being an empirical sceptic. The evidence of consensus is there to be seen, but the amount of theorizing and narrative required for its genesis, together with the social dimension to consensus generation, are enough for the empirical sceptic to treat the whole matter of consensus with a great deal of caution.

Cultural awareness

There has been a great deal said already regarding the culture wars surrounding issues such as the threat posed by anthropogenic climate change. Most of the concerns are directed at the sceptic, who for reasons never properly explained is deemed to be the instigator of the conflict. However, it is the sceptic who chooses to point out that the value-laden arguments offered by climate activists are best understood as part of a wider cultural movement in which rationality is subordinate to in-group versus outgroup dynamics.

Psychological, ethical and spiritual needs lie at the heart of the development of culture and so the adoption of the climate change phenomenon in service of these needs has to be seen as essentially a cultural power play. The dangers of uncritically accepting the fruits of theorizing and narrative are only the beginning of the empirical sceptic’s concerns. Beyond that is the concern that the direction the debate is taking is not even a matter of empiricism – data analysis has little to offer when so much depends upon whether the phenomenon is subsequently to be described as warming or heating. It is for this reason that much of the sceptic’s attention is directed towards the manner in which the science features in our culture rather than the science itself. Such are our psychological, ethical and spiritual needs, that we must not underestimate the extent to which ostensibly scientific output can be moulded in their service.

Conclusions

Taxonomies of thinking should not be treated too seriously. Whilst I hope that I have offered here a welcome antidote to the diatribe that often masquerades as a scholarly appraisal of climate change scepticism, it remains the case that the form that scepticism takes will be unique to the individual. I could not hope to cover all aspects of climate change scepticism in the limited space available to me, but it remains my belief that there are unifying principles that can be identified.

Central to these is the concept of the empirical sceptic and the need to understand that there are sound reasons to treat theorizing and simplifying narratives with extreme caution. The empirical sceptic resists the temptation to theorize, preferring instead to keep an open mind on the interpretation of the evidence. This is far from being self-serving denialism; it is instead a self-denying servitude to the data.

That said, I cannot believe that there would be any activist who, upon reading this account, would see a reason to modify their opinions regarding the bad faith and irrationality that lies behind scepticism. This, unfortunately, is only to be expected given that such opinions are themselves the result of theorizing and simplifying narrative.

Footnote:

While the above focuses on climate alarmism, there are many other social and political initiatives that are theory-driven, suffering from inadequate attention to analysis by empirical sceptics. One has only to note corporate and governmental programs based on Critical Race or Gender theories. In addition, COVID policies in advanced nations ignored the required full risk profiling, as well as overturning decades of epidemiological knowledge in favor of models and experimental gene therapies proposed by Big Pharma.

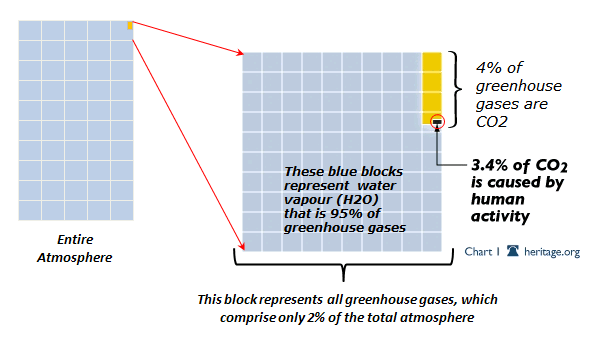

Others think it means: It is real that using fossil fuels causes global warming. This too lacks persuasive evidence.

Others think it means: It is real that using fossil fuels causes global warming. This too lacks persuasive evidence.

Conclusion

Conclusion

Ryan McMaken explains at Mises

Ryan McMaken explains at Mises