Michelle Dispels CO2 Hysteria

Thanks to a post at Friends of Science, I was alerted to an important declaration by Michelle. On March 17, 2017, Michelle Stirling presented “The Myth of the 97% Consensus” to the FreedomTalk.ca Annual Conference in Calgary, Alberta.

Thanks to a post at Friends of Science, I was alerted to an important declaration by Michelle. On March 17, 2017, Michelle Stirling presented “The Myth of the 97% Consensus” to the FreedomTalk.ca Annual Conference in Calgary, Alberta.

Because it’s not Michelle Obama speaking out, no one knows about it and few even care. Which tells you all you need to know about global warming/climate change. It’s a social phenomenon, now completely detached from reason and science. It is not what you know, it’s how many friends know you that gives you impact regarding the climate. Celebrity and popularity are convincing, detailed facts and knowledge not so much.

At the end of this post is a synopsis and link showing how thoroughly Stirling debunks the “97% consensus”. Much of that will be familiar to readers, so the excerpts here will emphasize the way Michelle puts the whole climatism movement into socio-economic context.

Why Claim 97%? – Ultimate Social Proof

One feature that stands out in most of the claimed consensus studies, no matter how small the relevant sample, is the repeated figure of 97%. Many of the 97% consensus studies are co-authored or supported by social psychologists

The groundbreaking work of Cialdini (2007) demonstrated that humans are significantly motivated to comply according to ‘social proof’ – in other words, “if everyone agrees, that is proof enough so get on the bandwagon.” Just as social media ‘trending’ leads to more people following the story, social proofs work on the inherently gregarious nature of humans and our herd mentality. The 97% figure delivers two powerful psychological messages in one – i) ‘everyone’ agrees, and ii) you will be left out.

To compound the psychological impact upon the dissenting 3% of the population, climate bullying terms like ‘denier,’ and more recently various high-profile ‘witch hunts,’ even at the Presidential level, have been employed by activists. These actions activate physical and emotional pain centers in the victims, as found by Williams (2001), Kross et al (2011) on ostracism, making most people reluctant to speak up with any questions regarding the science, policy, cost or impact on industry. In practical terms, many dissidents have lost their jobs for daring to challenge ‘the consensus.’ Williams (2007) found that being ostracized was the ‘kiss of social death.’

A more nuanced study with clear definitions done by PBL Netherlands Environmental Assessment Agency reveals that out of 1,868 respondents, only 43% agreed with the IPCC definition: “It is extremely likely {95%+ certainty} that more than half of [global warming] from 1951 to 2010 was caused by [human activity].”

Clearly, van der Linden et al (2015), Ding et al (2011) Dunlap & McCright (2011) are correct in stating that consensus is an important tool and a gateway belief for acceptance of public policy on climate change. However, the question is, should we be making policy based on statistically manipulated consensus studies that lack clearly defined empirical parameters, or should public policy be based on actual scientific evidence?

The Cost of Delusion

Despite several surveys claiming that ‘consensus’ is a valuable driver of public acceptance of climate change, and expressions of dismay that a large percent of the public continue to reject the alleged ‘consensus’ and to question human-caused climate change claims in general, the foregoing demonstrates that ‘belief’ and ‘consensus’ are not grounds for action on climate change. If anything, such thinking is more likely to lead to extraordinary mass delusions, such as the Mississippi Scheme, the South-Sea Bubble, and Tulipomania, all three of which nearly bankrupted national economies of France, England and the Netherlands, respectively (Mackay 2008).

There has been a concerted effort to push the climate catastrophe perspective by well-funded foundations, philanthropies and institutional investors which are bound by the UN Principles for Responsible Investment to invest in renewables and clean-tech, despite clean-tech having been found to be a ‘noble way to lose money’ after several patient years of investment, according to past CIO of CalPERS in a Wall Street Journal interview of 2013. (my bold)

Indeed, a review of the performance of renewable energy companies is concerning – particularly the level of expectation and investment versus scope of real or possible failure. The electric vehicle company “A Better Place” cars was valued at some $2 billion in the fall of 2012, by the spring of 2013 it had gone bankrupt, valued only at $12 million, despite having had a raft of experienced Wall Street investors. More recently, Spain’s Abengoa began spectacular bankruptcy proceedings, also putting some 27,000 employees world-wide at risk of unemployment. (my bold)

Unusual new market instruments like the ‘yieldco’ has led to catastrophic financial outcomes, as in the case of SunEdison’s $16.1 billion bankruptcy filing. Devonshire Research (Part II-May 2016)41 is claiming that the much-vaunted Tesla is reliant on subsidies: “Tesla is not a car, battery, or tech company; it is an experimental financial services company and should be regulated as such” and that “Tesla has engaged in aggressive accounting that calls to mind the experiences of Enron and WorldCom; its future is highly uncertain.” (my bold)

Recent research by Cambridge engineering professor Michael J. Kelly (2016) shows that wind and solar do not provide sufficient Energy Return on Energy Invested (EROI) to maintain even basic society, and that: “all the actions taken together until now to reduce our emissions of carbon dioxide will not achieve a serious reduction, and in some cases, they will actually make matters worse.”

Thus, there is an evident divide between evidence and ideologies when the concepts of ‘renewable’ and ‘sustainable’ are applied in the field, as well.

The Ethics of CO2 Hysteria

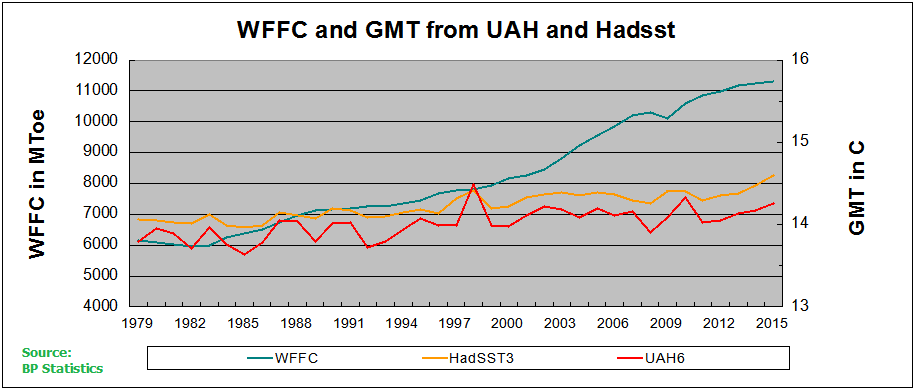

Climate change is often framed as a moral and ethical concern, thus one must question the ethics of those participating in peer-reviewed research who are psychology professionals but who employ such tactics, especially when the scientific evidence of global temperature rise does not support the Catastrophic Anthropogenic Global Warming theory. This discrepancy between the surveyed ‘beliefs’ and the physical evidence demonstrates that opinion-based ‘consensus’ surveys are scientifically worthless and are an improper and potentially dangerous basis for making climate change policy.

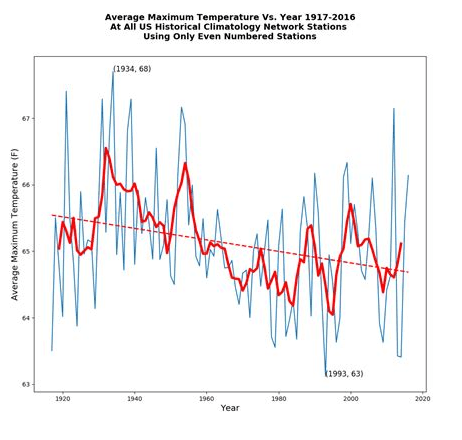

To date, much of the world’s diverse climate policy has been predicated upon public acceptance that there is an urgent crisis of human-caused global warming, but this claim is not supported by the temperature records. As noted by Tol in a response to the Grantham Research Institute: “The twenty-two studies cited above all agree that the impact of climate change is small relative to economic growth. This was found in studies by Professor William Nordhaus and Professor Samuel Fankhauser. It was confirmed by the Intergovernmental Panel on Climate Change from its Second Assessment Report, in a chapter led by the late Professor David Pearce, to its Fifth Assessment Report, in a chapter led by me. Even the highest estimate, the 20% upper bound by Lord Professor Nicholas Stern of Brentford, has that a century of climate change is not worse than losing a decade of economic growth.” [bold emphasis added]

Thus, even economic evidence does not support the ‘belief’ in human caused global warming; actual temperature data certainly does not support the claims of impending catastrophic climate change.

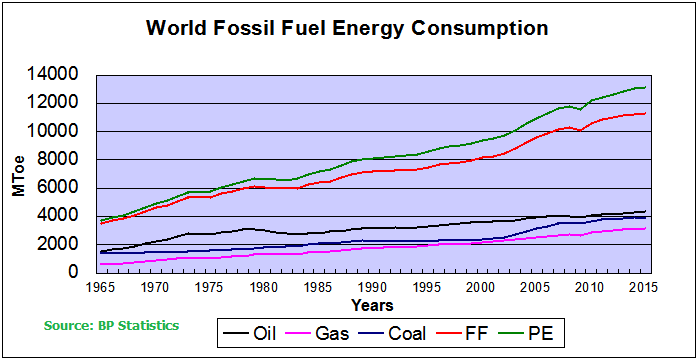

The evidence shows that the world runs on three cubic miles of oil equivalent energy every year, of which one cubic mile is oil. All renewable devices such as wind turbines and solar panels are manufactured using vast amounts of oil, natural gas and coal. As Vaclav Smil notes, ‘to get wind you need oil.’

Conclusion

Science is not a democratic undertaking. It is unfortunate that respected scientific journals continue to publish such papers without critical vetting as to whether the ‘consensus’ claims equate to the empirical evidence. Public policy on climate change should be evidence-based and carefully thought through in the context of longer time-scales, historical evidence and paleoclimatology.

There is no consideration that the study of 4 billion years of climate change, written in the strata of the earth, might make those scientists working with fossil fuel industries question the claims of Anthropogenic Global Warming proponents whose evidence relies on spotty temperature records of some 100 years, climate models and unproven theories.

While much good came of the original impetus of the “Law of the Atmosphere” in terms of reducing noxious pollutants, much economic and social harm is being done by the current hysteria focussed solely on carbon dioxide. France has learned that lesson the hard way, having incentivized diesel cars and trucks in order to reduce carbon dioxide, only to find its gem – the City of Lights – Paris – blackened with the worst air quality in the world thanks to a significant rise in soot and nitrogen oxide.

Consensus = nonsensus. We must look at the evidence over ideology.

Climate Lemmings

The excerpts above come from Michelle Stirling’s paper Consensus Nonsensus on 97%: Science is not a Democracy

Stirling’s presentation dissects the 97% consensus, powerpoint slides are here: The Myth of the 97% consensus