Currently some Zero Carbon zealots are trying to discredit and disappear a peer reviewed study of CO2 atmospheric concentrations because its findings contradict IPCC dogma. The paper is World Atmospheric CO2, Its 14C Specific Activity, Non-fossil Component, Anthropogenic Fossil Component, and Emissions (1750–2018). by Skrable et al. (2022). The link is to the paper and also shows the comments recently addressed to the authors and the editor of the journal, as well as responses by both.

This came to my attention by way of a comment by one of the attackers on my 2022 post regarding this study. Text is below in italics with my bolds.

D. Andrews 10/7/2023

This post is over a year old, but in the interest of correcting the record, please note the following:

1. Skrable et al. have conceded that the data they “guesstimated” bore little resemblance to actual atmospheric radiocarbon data.

2. In a reanalysis using good data, they still find that the present atmosphere contains more 14C than if the entire atmospheric carbon increase since 1750 was 14C -free fossil fuel carbon. But that is no surprise. Atmospheric carbon and carbon from ocean/land reservoirs is continually mixing, with the result that net 14C moves to the 14C depleted atmosphere. Because of this mixing, one cannot infer the source of the atmospheric carbon increase from its present radiocarbon content.

3. One can conclude from the atmospheric increase being but half of human emissions, that ocean /land reservoirs are net sinks of carbon, nor sources. The increase is clearly on us, not natural processes.

4.Because this paper was made open access by the Health Physics editor, while numerous rebuttals and the partial retraction were kept behind a paywall, it got far more attention than it deserved. Health Physics has now removed the paywall, making the rebuttals available from their website (for a limited time). See in particular the letters from Schwartz et al. and Andrews (myself).

Skrable et al. Respond:

None of the four letters to the editor in the June 2022 issue of Health Physics include any specific criticism of the assumptions, methodologies, and simple equations that we use in our paper to estimate the anthropogenic fossil and non-fossil components present each year in the atmosphere. We have estimated from the “No bombs” curve, modeled in the absence of the perturbation due to nuclear weapons testing, an approximation fitting function of annual expected specific activities.

Annual mean concentrations of CO2 in our paper are used along with our revised expected specific activities to calculate values of the anthropogenic fossil and non-fossil components of CO2. These values are presented in revisions of Table 2a, Table 2, and figures in our paper. They are included here in a revised supporting document for our paper, which provides a detailed discussion of the assumptions, methodology, equations, and example calculations of the two components of CO2 in 2018.

Our revised results support our original conclusions and produce an even smaller anthropogenic fraction of CO2 in the atmosphere. The file for the revised supporting document, including Table 2, is available at the link: (Supplemental Digital Content link, https://links.lww.com/HP/A230 provided by HPJ).

With respect to the elements of our paper (Skrable et al. 2022), our responses to this lengthy letter to the Health Physics Journal, which mostly contains extraneous comments and critiques that are wrong, are as follows:

-

- Assumptions: No specific critique of our assumptions is given in the letter. Other related criticisms include the value of S(0), the specific activity in 1750, and the assumption that bomb- produced 14C being released from reservoirs was not significant. Our use of the likely elevated S(0) value is explained and justified in the paper. Regarding the use of bomb-produced 14C recycling from reservoirs to the atmosphere, we did express our belief that this influence would be small because most of it remains in the oceans, and the entire bomb 14C represents a small fraction of all 14C present in the world.

- Methodology: No specific critique of our methodology is given in the letter. The major thrust of our paper was to describe a simple methodology for determining the anthropogenic portion of CO2 in the atmosphere, based on the dilution of naturally occurring 14CO2 by the anthropogenic fossil-derived CO2, the well-known Suess effect as acknowledged by Andrews and Tans.

- Equations: Our D14C equation expressed in per mil was obtained from the Δ14C equation reported by Miller et.al referenced in our paper. Our D14C equation is the same as NOAA’s Δ14C equation, and it does not agree with that in the letter. Our equation was not used to calculate D14C values. Rather, we extracted annual mean D14 values directly from a file provided by NOAA and used them to calculate annual mean values of the specific activity. The annual mean D14C values in our paper are consistent with those displayed in a figure by NOAA (https://gml.noaa.gov/ccgg/isotopes/c14tellsus.html).

- Results: As a consequence of our disagreement in (3) above, many of the comments, criticisms, and suggestions of why we did certain things are wrong in paragraph 3 and others.

- Technical Merits: The letter does not have any specific comments or criticisms of the simple equations used to estimate all components of CO2 by either of two independent pathways, which rely on the estimation of the annual changes since 1750 in either the 14C activity per unit volume or the 14C activity per gram of carbon in the atmosphere.

- Practical Significance: Andrews and Tans do not agree with our conclusion (10) on page 303 of our paper, which includes the practical significance of our paper that is not recognized by Andrews and Tans.

We stand by our methodology, results, and conclusions.

HPJ Editor Brant Ulsh Responds

The commentors argued that the Skrable paper is outside the scope of Health Physics. I disagree. The journal’s scope is clearly articulated in our Instructions for Authors (https://edmgr.ovid.com/hpj/accounts/ifauth.htm): . . . The Skrable et al. paper is solidly within our scope and adds to a body of similar research previously published in Health Physics.

The commentors asserted that the authors should have submitted their paper to a more relevant (in their opinion) journal (e.g., Journal of Geophysical Research or Geophysical Research Letters). It is not clear to me how the commentors could know what journals the authors submitted their manuscript to prior to submitting it to Health Physics. In their response to this criticism in this issue, Skrable and his co-authors revealed that they had indeed previously submitted a similar version of this manuscript to the Journal of Geophysical Research, but that journal was unable to secure two qualified peer-reviewers. I am assuming—though the authors did not state so—that part of the difficulty in securing peer-reviewers stemmed from the interdisciplinary nature of their work, which straddles radiation and atmospheric sciences. This leads to the last criticism I will address.

The commentors stated that the peer-reviewers selected by the Journal are unqualified to review Skrable et al. (2022) due to a lack of expertise in atmospheric sciences. Again, as Health Physics employs double-blind peer-review, and the identities of reviewers are kept confidential, it is not at all clear how the commentors could have known who reviewed this paper and their qualifications to do so. Regardless, this claim is without foundation. In fact, both peer-reviewers were selected specifically for their expertise in atmospheric science/meteorology/climate science.

In closing, I stand behind my decision to publish Skrable et al. (2022) in Health Physics. I invite our readers to examine the original paper, the criticisms in the Letters in this issue, and the authors’ responses to these criticisms and come to their own informed conclusions of this work.

Full Defence in Previous Post: By the Numbers: CO2 Mostly Natural

This post compiles several independent proofs which refute those reasserting the “consensus” view attributing all additional atmospheric CO2 to humans burning fossil fuels.

The IPCC doctrine which has long been promoted goes as follows. We have a number over here for monthly fossil fuel CO2 emissions, and a number over there for monthly atmospheric CO2. We don’t have good numbers for the rest of it-oceans, soils, biosphere–though rough estimates are orders of magnitude higher, dwarfing human CO2. So we ignore nature and assume it is always a sink, explaining the difference between the two numbers we do have. Easy peasy, science settled.

The non-IPCC paradigm is that atmospheric CO2 levels are a function of two very different fluxes. FF CO2 changes rapidly and increases steadily, while Natural CO2 changes slowly over time, and fluctuates up and down from temperature changes. The implications are that human CO2 is a simple addition, while natural CO2 comes from the integral of previous fluctuations.

1. History of Atmospheric CO2 Mostly Natural

This proof is based on the 2021 paper World Atmospheric CO2, Its 14C Specific Activity, Non-fossil Component, Anthropogenic Fossil Component, and Emissions (1750–2018) by Kenneth Skrable, George Chabot, and Clayton French at University of Massachusetts Lowell.

The analysis employs ratios of carbon isotopes to calculate the relative proportions of atmospheric CO2 from natural sources and from fossil fuel emissions.

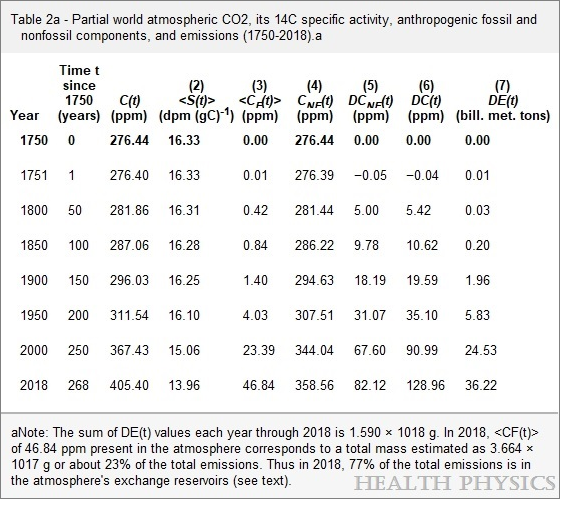

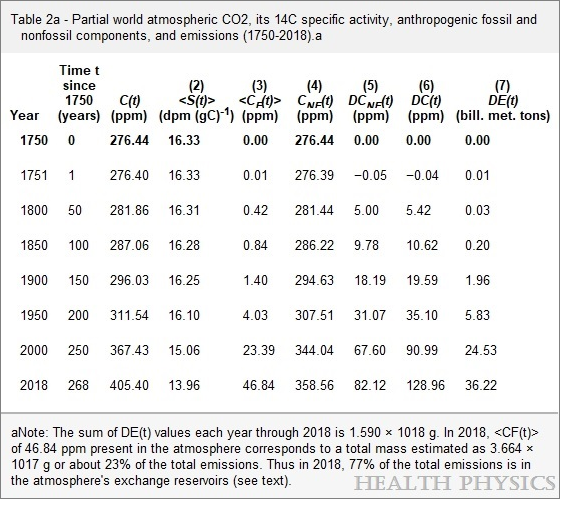

The specific activity of 14C in the atmosphere gets reduced by a dilution effect when fossil CO2, which is devoid of 14C, enters the atmosphere. We have used the results of this effect to quantify the two components: the anthropogenic fossil component and the non-fossil component. All results covering the period from 1750 through 2018 are listed in a table and plotted in figures.

These results negate claims that the increase in total atmospheric CO2 concentration C(t) since 1800 has been dominated by the increase of the anthropogenic fossil component. We determined that in 2018, atmospheric anthropogenic fossil CO2 represented 23% of the total emissions since 1750 with the remaining 77% in the exchange reservoirs. Our results show that the percentage of the total CO2 due to the use of fossil fuels from 1750 to 2018 increased from 0% in 1750 to 12% in 2018, much too low to be the cause of global warming.

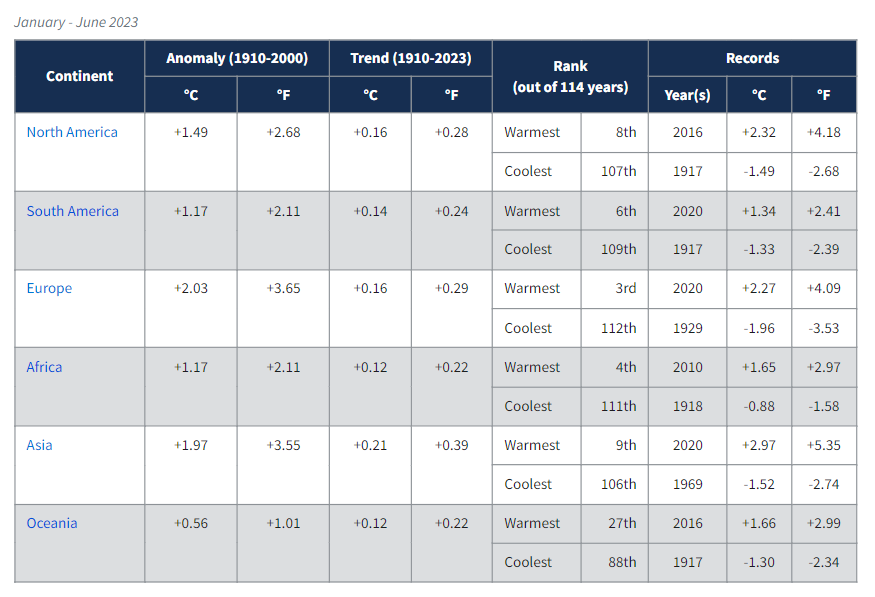

The graph above is produced from Skrable et al. dataset Table 2. World atmospheric CO2, its C‐14 specific activity, anthropogenic‐fossil component, non fossil component, and emissions (1750 ‐ 2018). The purple line shows reported annual concentrations of atmospheric CO2 from Energy Information Administration (EIA) The starting value in 1750 is 276 ppm and the final value in this study is 406 ppm in 2018, a gain of 130 ppm.

The red line is based on EIA estimates of human fossil fuel CO2 emissions starting from zero in 1750 and the sum slowly accumulating over the first 200 years. The estimate of annual CO2 emitted from FF increases from 0.75 ppm in 1950 up to 4.69 ppm in 2018. The sum of all these annual emissions rises from 29.3 ppm in 1950 (from the previous 200 years) up to 204.9 ppm (from 268 years). These are estimates of historical FF CO2 emitted into the atmosphere, not the amount of FF CO2 found in the air.

Atmospheric CO2 is constantly in two-way fluxes between multiple natural sinks/sources, principally the ocean, soil and biosphere. The annual dilution of carbon 14 proportion is used to calculate the fractions of atmospheric FF CO2 and Natural CO2 remaining in a given year. The blue line shows the FF CO2 fraction rising from 4.03 ppm in 1950 to 46.84 ppm in 2018. The cyan line shows Natural CO2 fraction rising from 307.51 in 1950 to 358.56 in 2018.

The details of these calculations from observations are presented in the two links above, and the logic of the analysis is summarized in my previous post On CO2 Sources and Isotopes. The table below illustrates the factors applied in the analysis.

C(t) is total atm CO2, S(t) is Seuss 14C effect, CF(t) is FF atm CO2, CNF(t) is atm non-FF CO2, DE(t) is FF CO2 emissions

Summary

Despite an estimated 205 ppm of FF CO2 emitted since 1750, only 46.84 ppm (23%) of FF CO2 remains, while the other 77% is distributed into natural sinks/sources. As of 2018 atmospheric CO2 was 405, of which 12% (47 ppm) originated from FF. And the other 88% (358 ppm) came from natural sources: 276 prior to 1750, and 82 ppm since. Natural CO2 sources/sinks continue to drive rising atmospheric CO2, presently at a rate of 2 to 1 over FF CO2.

2. Analysis of CO2 Flows Confirms Natural Dominance

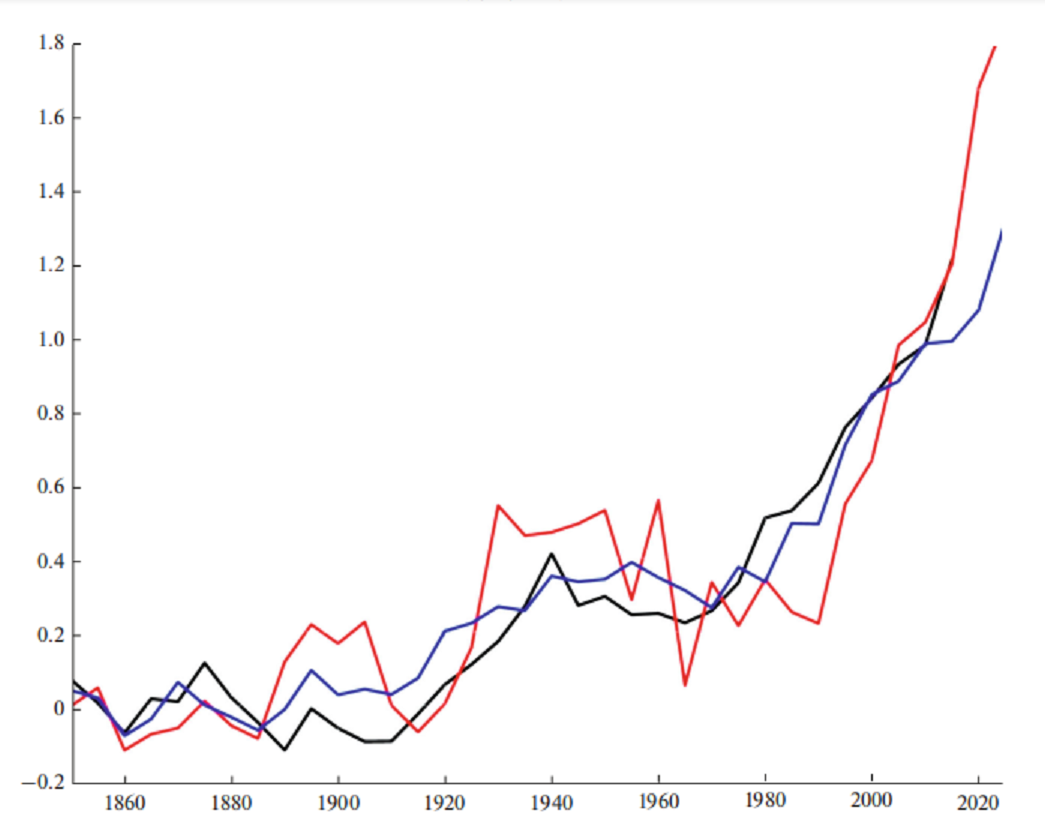

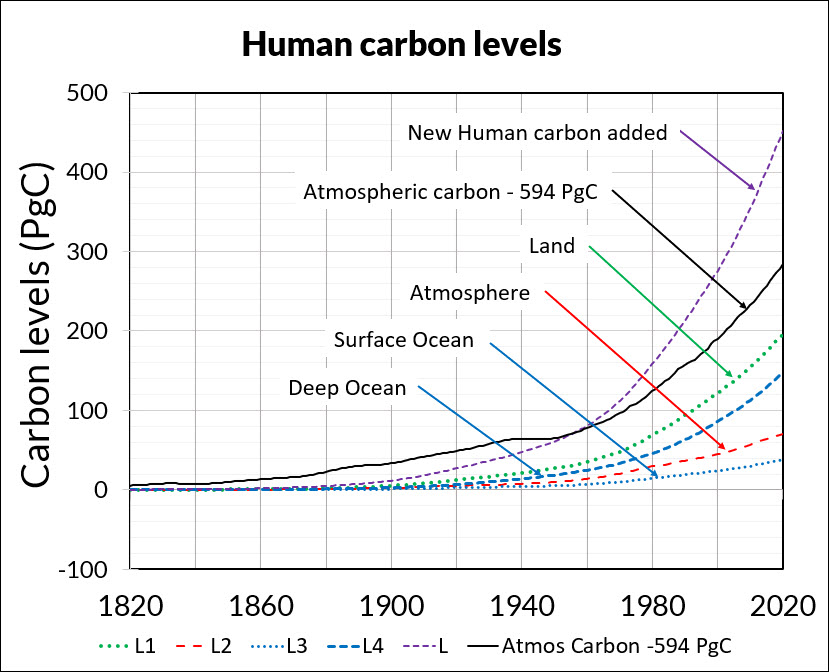

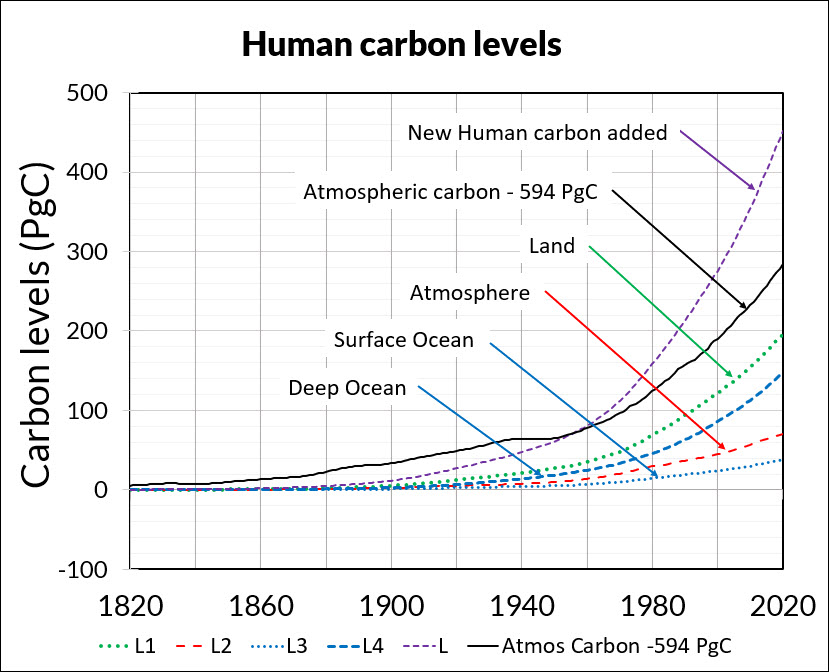

Figure 3. How human carbon levels change with time.

Independent research by Dr. Ed Berry focused on studying flows and level of CO2 sources and sinks. The above summary chart from his published work presents a very similar result.

The graph above summarizes Dr. Berry’s findings. The lines represent CO2 added into the atmosphere since the 1750 level of 280 ppm. Based on IPCC data regarding CO2 natural sources and sinks, the black dots show the CO2 data. The small blue dots show the sum of all human CO2 emissions since they became measurable, irrespective of transfers of that CO2 from the atmosphere to land or to ocean.

Notice the CO2 data is greater than the sum of all human CO2 until 1960. That means nature caused the CO2 level to increase prior to 1960, with no reason to stop adding CO2 since. In fact, the analysis shows that in the year 2020, the human contribution to atmospheric CO2 level is 33 ppm, which means that from a 2020 total of 413 ppm, 280 is pre-industrial and 100 is added from land and ocean during the industrial era.

My synopsis of his work is IPCC Data: Rising CO2 is 75% Natural

A new carbon cycle model shows human emissions cause 25% and nature 75% of the CO2 increase is the title (and link) for Dr. Edwin Berry’s paper accepted in the journal Atmosphere August 12, 2021.

3. Nature Erases Pulses of Human CO2 Emissions

Those committed to blaming humans for rising atmospheric CO2 sometimes admit that emitted CO2 (from any source) only stays in the air about 5 years (20% removed each year) being absorbed into natural sinks. But they then save their belief by theorizing that human emissions are “pulses” of additional CO2 which persist even when particular molecules are removed, resulting in higher CO2 concentrations. The analogy would be a traffic jam on the freeway which persists long after the blockage is removed.

A recent study by Bud Bromley puts the fork in this theory. His paper is A conservative calculation of specific impulse for CO2. The title links to his text which goes through the math in detail. Excerpts are in italics here with my bolds.

In the 2 years following the June 15, 1991 eruption of the Pinatubo volcano, the natural environment removed more CO2 than the entire increase in CO2 concentration due to all sources, human and natural, during the entire measured daily record of the Global Monitoring Laboratory of NOAA/Scripps Oceanographic Institute (MLO) May 17, 1974 to June 15, 1991. Then, in the 2 years after that, that CO2 was replaced plus an additional increment of CO2.

The data and graphs produced by MLO also show a reduction in slope of total CO2 concentration following the June 1991 eruption of Pinatubo, and also show the more rapid recovery of total CO2 concentration that began about 2 years after the 1991 eruption. This graph is the annual rate of change (i.e., velocity or slope) of total atmosphere CO2 concentration. This graph is not human CO2.

More recently is his study Scaling the size of the CO2 error in Friedlingstein et al. Excerpts in italics with my bolds.

Since net human emissions would be a cumulative net of two fluxes, if there were a method to measure it, and since net global average CO2 concentration (i.e., NOAA Mauna Loa) is the net of two fluxes, then we should compare these data as integral areas. That is still an apples and oranges comparison because we only have the estimate of human emissions, not net human emissions. But at least the comparison would be in the right order of magnitude.

That comparison would look something like the above graphic. We would be comparing the entire area of the orange quadrangle to the entire blue area, understanding that the tiny blue area shown is much larger than actually is because the amount shown is human emissions only, not net human emissions. Human CO2 absorptions have not been subtracted. Nevertheless, it should be obvious that (1) B is not causing A, and (2) the orange area is enormously larger than the blue area.

Human emissions cannot be driving the growth rate (slope) observed in net global average CO2 concentration.

4. Setting realistic proportions for the carbon cycle.

Hermann Harde applies a comparable perspective to consider the carbon cycle dynamics. His paper is Scrutinizing the carbon cycle and CO2 residence time in the atmosphere. Excerpts with my bolds.

Different to the IPCC we start with a rate equation for the emission and absorption processes, where the uptake is not assumed to be saturated but scales proportional with the actual CO2 concentration in the atmosphere (see also Essenhigh, 2009; Salby, 2016). This is justified by the observation of an exponential decay of 14C. A fractional saturation, as assumed by the IPCC, can directly be expressed by a larger residence time of CO2 in the atmosphere and makes a distinction between a turnover time and adjustment time needless.

Based on this approach and as solution of the rate equation we derive a concentration at steady state, which is only determined by the product of the total emission rate and the residence time. Under present conditions the natural emissions contribute 373 ppm and anthropogenic emissions 17 ppm to the total concentration of 390 ppm (2012). For the average residence time we only find 4 years.

The stronger increase of the concentration over the Industrial Era up to present times can be explained by introducing a temperature dependent natural emission rate as well as a temperature affected residence time. With this approach not only the exponential increase with the onset of the Industrial Era but also the concentrations at glacial and cooler interglacial times can well be reproduced in full agreement with all observations.

So, different to the IPCC’s interpretation the steep increase of the concentration since 1850 finds its natural explanation in the self accelerating processes on the one hand by stronger degassing of the oceans as well as a faster plant growth and decomposition, on the other hand by an increasing residence time at reduced solubility of CO2 in oceans. Together this results in a dominating temperature controlled natural gain, which contributes about 85% to the 110 ppm CO2 increase over the Industrial Era, whereas the actual anthropogenic emissions of 4.3% only donate 15%. These results indicate that almost all of the observed change of CO2 during the Industrial Era followed, not from anthropogenic emission, but from changes of natural emission. The results are consistent with the observed lag of CO2 changes behind temperature changes (Humlum et al., 2013; Salby, 2013), a signature of cause and effect. Our analysis of the carbon cycle, which exclusively uses data for the CO2 concentrations and fluxes as published in AR5, shows that also a completely different interpretation of these data is possible, this in complete conformity with all observations and natural causalities.

5. More CO2 Is Not a Problem But a Blessing

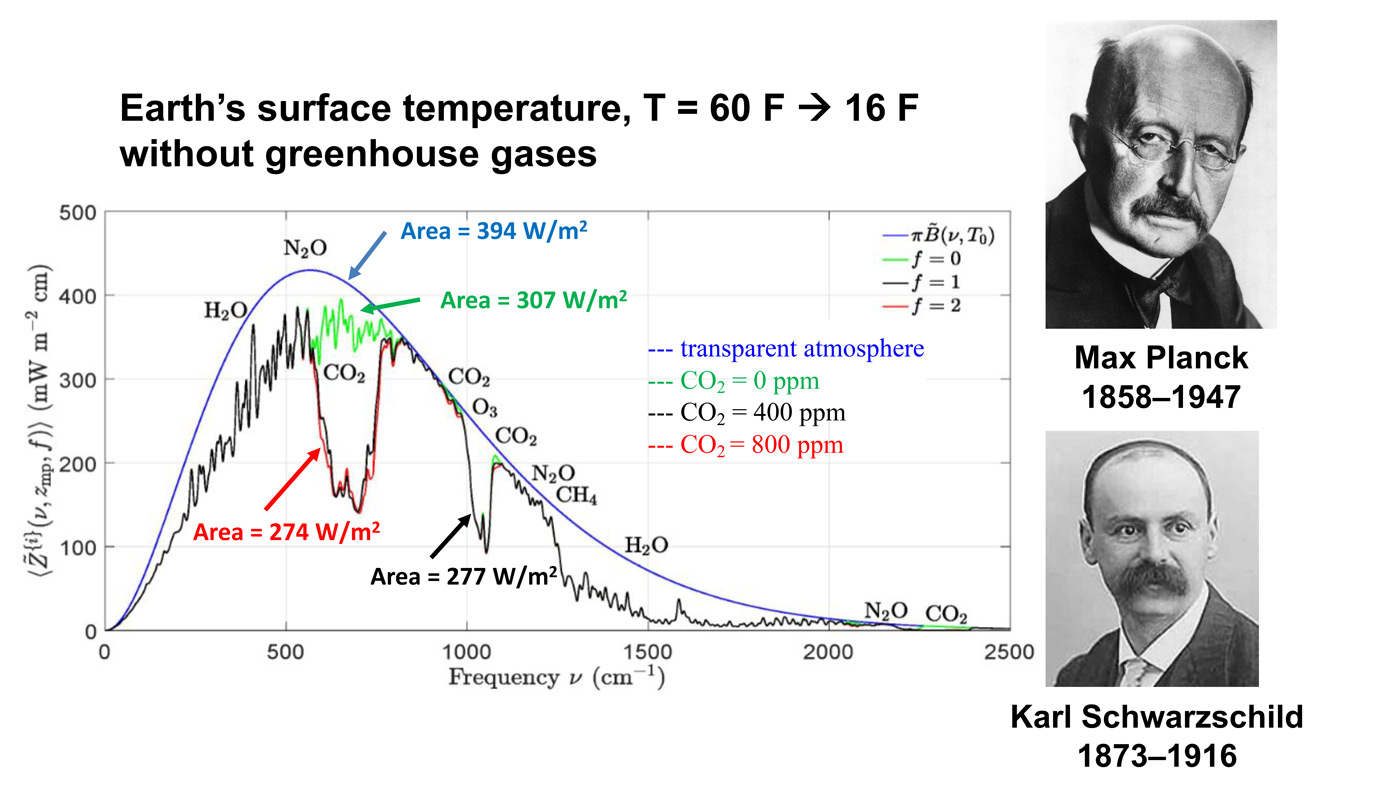

William Happer provides a framework for thinking about climate, based on his expertise regarding atmospheric radiation (the “greenhouse” mechanism). But he uses plain language accessible to all. The Independent Institute published the transcript for those like myself who prefer reading for full comprehension. Source: How to Think about Climate Change

His presentation boils down to two main points: More CO2 will result in very little additional global warming. But it will increase productivity of the biosphere. My synopsis is: Climate Change and CO2 Not a Problem Brief excerpts in italics with my bolds.

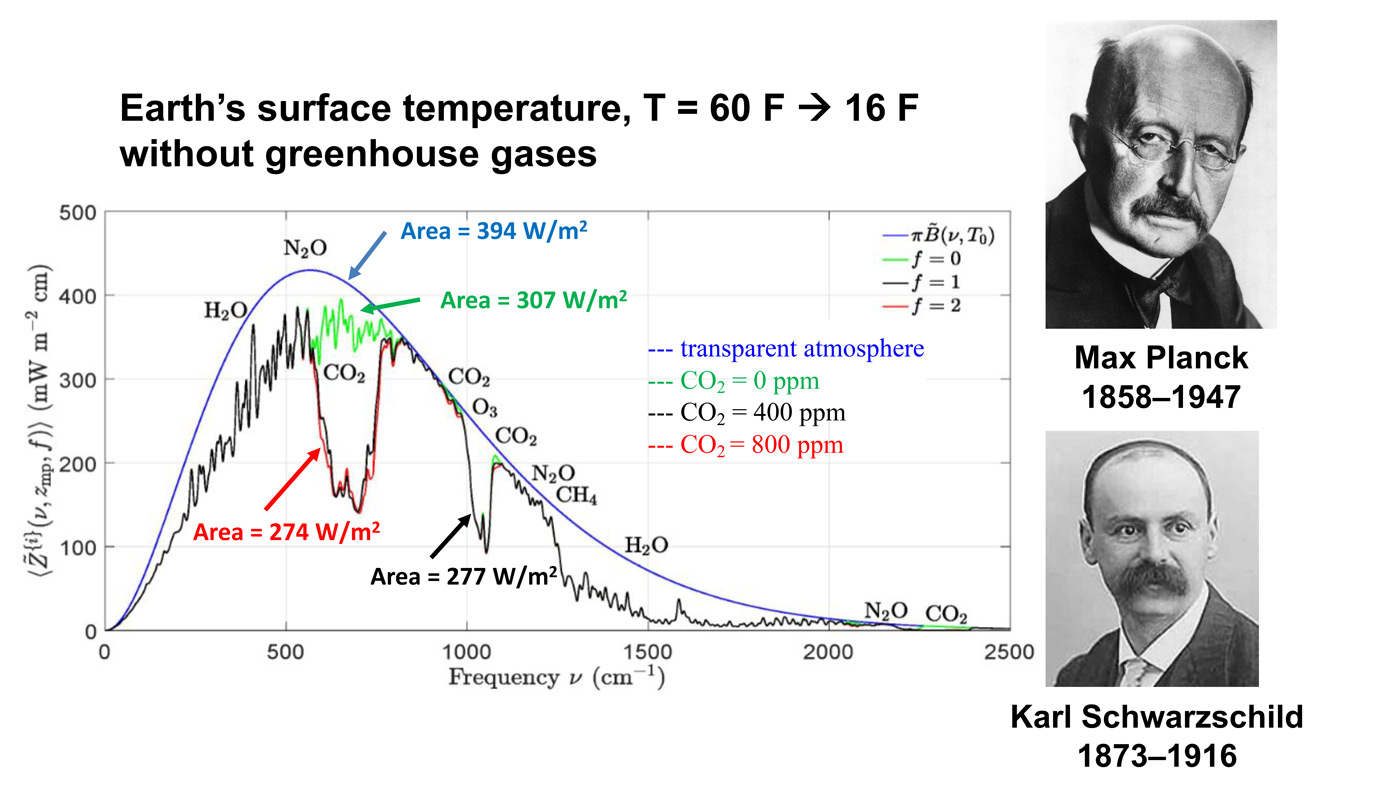

This is an important slide. There is a lot of history here and so there are two historical pictures. The top picture is Max Planck, the great German physicist who discovered quantum mechanics. Amazingly, quantum mechanics got its start from greenhouse gas-physics and thermal radiation, just what we are talking about today. Most climate fanatics do not understand the basic physics. But Planck understood it very well and he was the first to show why the spectrum of radiation from warm bodies has the shape shown on this picture, to the left of Planck. Below is a smooth blue curve. The horizontal scale, left to right is the “spatial frequency” (wave peaks per cm) of thermal radiation. The vertical scale is the thermal power that is going out to space. If there were no greenhouse gases, the radiation going to space would be the area under the blue Planck curve. This would be the thermal radiation that balances the heating of Earth by sunlight.

In fact, you never observe the Planck curve if you look down from a satellite. We have lots of satellite measurements now. What you see is something that looks a lot like the black curve, with lots of jags and wiggles in it. That curve was first calculated by Karl Schwarzschild, who first figured out how the real Earth, including the greenhouse gases in its atmosphere, radiates to space. That is described by the jagged black line. The important point here is the red line. This is what Earth would radiate to space if you were to double the CO2 concentration from today’s value. Right in the middle of these curves, you can see a gap in spectrum. The gap is caused by CO2 absorbing radiation that would otherwise cool the Earth. If you double the amount of CO2, you don’t double the size of that gap. You just go from the black curve to the red curve, and you can barely see the difference. The gap hardly changes.

The message I want you to understand, which practically no one really understands, is that doubling CO2 makes almost no difference.

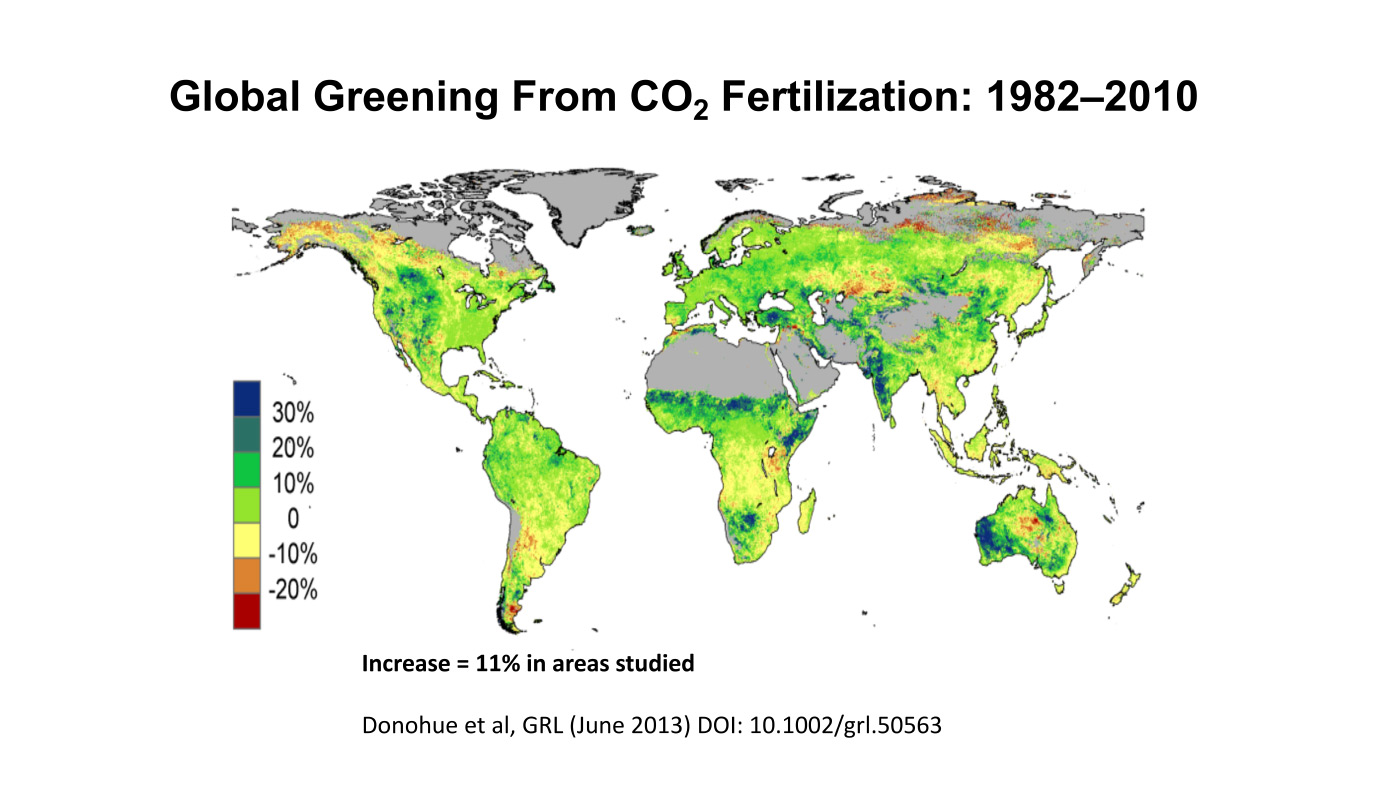

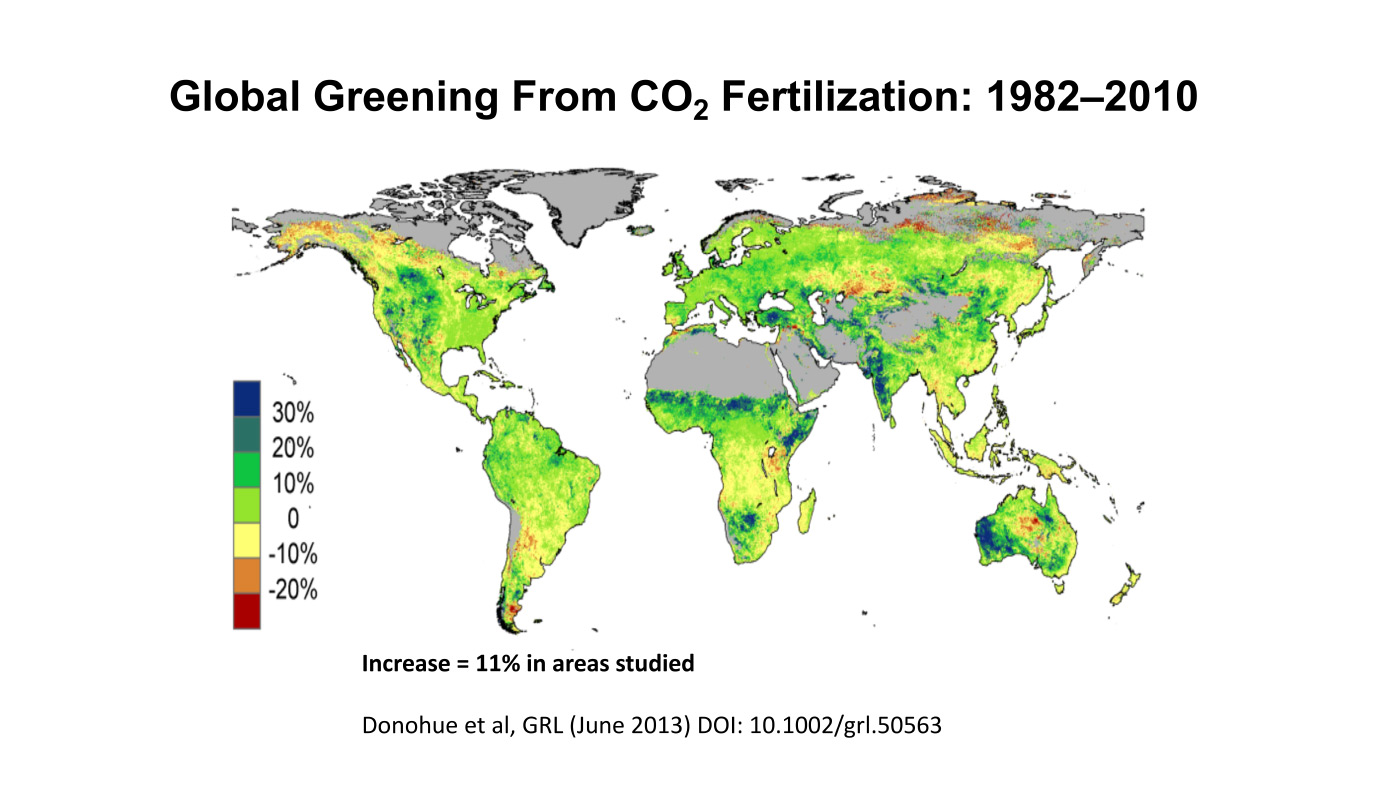

The alleged harm from CO2 is from warming, and the warming observed is much, much less than predictions. In fact, warming as small as we are observing is almost certainly beneficial. It gives slightly longer growing seasons. You can ripen crops a little bit further north than you could before. So, there is completely good news in terms of the temperature directly. But there is even better news. By standards of geological history, plants have been living in a CO2 famine during our current geological period.

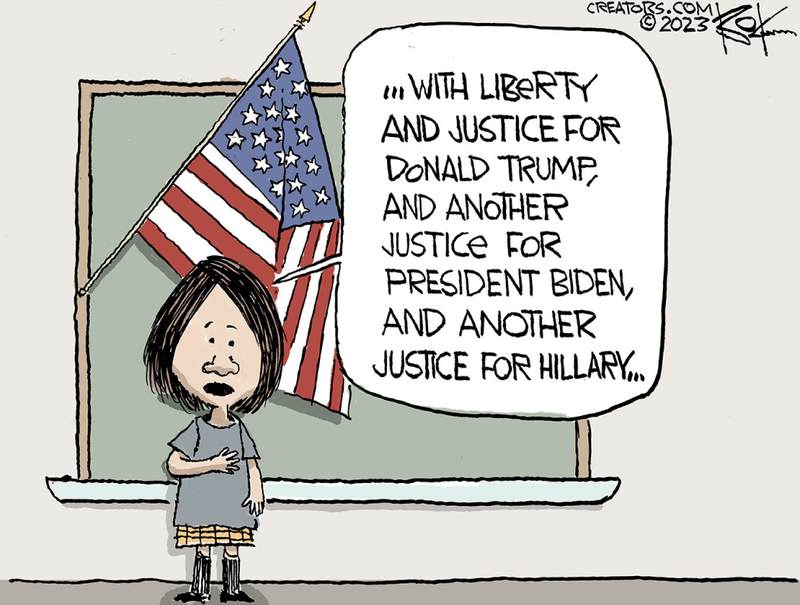

So, the takeaway message is that policies that slow CO2 emissions are based on flawed computer models which exaggerate warming by factors of two or three, probably more. That is message number one. So, why do we give up our freedoms, why do we give up our automobiles, why do we give up a beefsteak because of this model that does not work?

Takeaway message number two is that if you really look into it, more CO2 actually benefits the world. So, why are we demonizing this beneficial molecule that is making plants grow better, that is giving us slightly less harsh winters, a slightly longer growing season? Why is that a pollutant? It is not a pollutant at all, and we should have the courage to do nothing about CO2 emissions. Nothing needs to be done.

See Also Peter Stallinga 2023 Study CO2 Fluxes Not What IPCC Telling You

Footnote: The Core of the CO2 Issue Update July 15

An adversarial comment below goes to the heart of the issue:

“The increase of the CO2 level since 1850 are more than accounted for by manmade emissions. Nature remains a net CO2 sink, not a net emitter.”

The data show otherwise. Warming temperatures favor natural sources/sinks emitting more CO2 into the atmosphere, while previously captured CO2 shifts over time into long term storage as bicarbonates. In fact, rising temperatures are predictive of rising CO2, as shown mathematically.

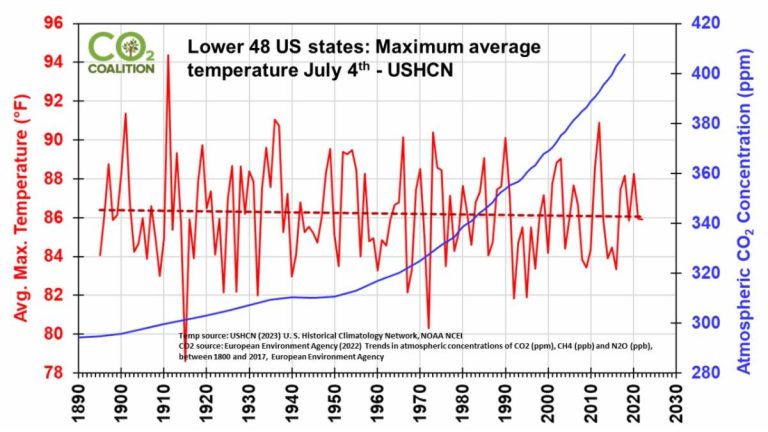

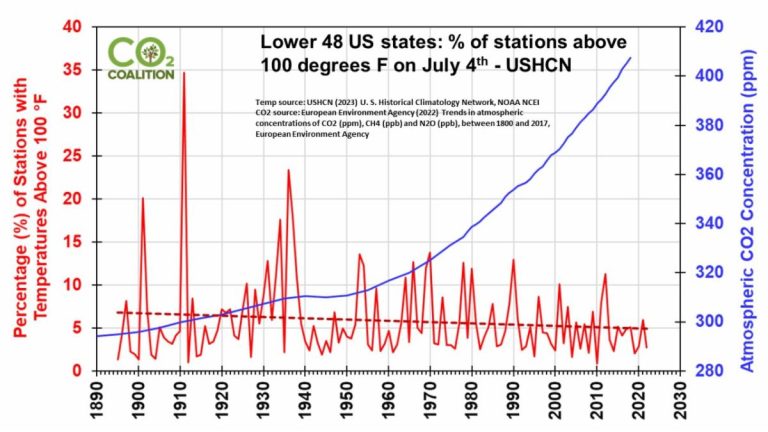

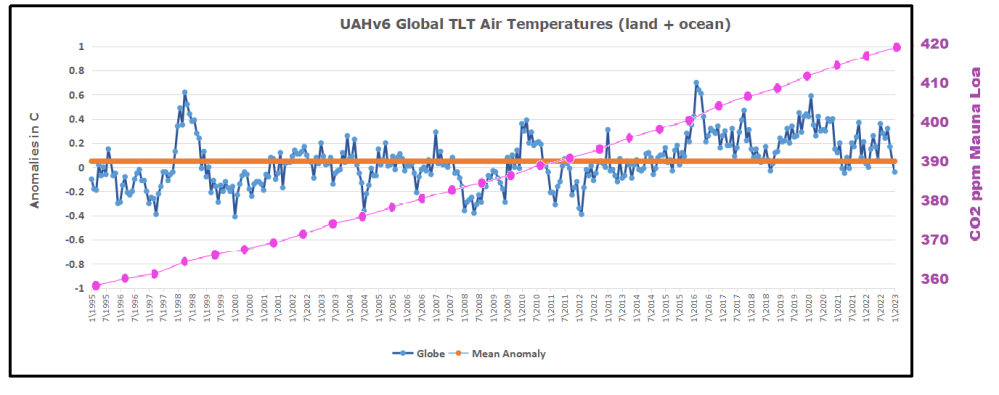

02/2025 Update–Temperature Changes, CO2 Follows

It is the ongoing natural contribution to atmospheric CO2 that is being denied.